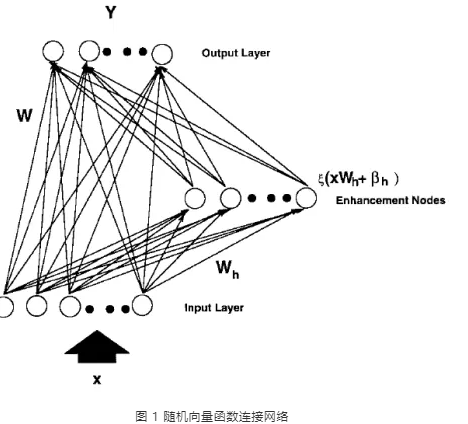

为应对这些情况,有学者提出了区别于深度结构的另一种结构:“平坦”神经网络,即横向增加神经元结点。典型代表如单层前馈网络(Single-layer Feedforward Network, SLFN)和随机向量函数连接网络(Random Vector Functional-link Neural Network, RVFL)。但单层前馈网络对于参数设置较为敏感,往往陷于局部极值点,收敛较慢。典型的随机向量函数连接网络结构如图1,其缺点在于将原始数据直接输入网络,当数据维度很高时,网络将不再具有实用性。

基于随机向量函数连接网络,华南理工大学陈俊龙教授等学者提出了宽度学习系统(Broad Learning System, BLS)。不同于RVFL,宽度学习系统将原始输入映射到特征空间,并设计了三种增量学习算法以在不同情况下高效的重新训练网络。

文献目录如下:

[1] C. L. P. Chen and Z. Liu, "Broad Learning System: An Effective and Efficient Incremental Learning System Without the Need for Deep Architecture," in IEEE Transactions on Neural Networks and Learning Systems, vol. 29, no. 1, pp. 10-24, Jan. 2018, doi: 10.1109/TNNLS.2017.2716952.

[2] J. Du, C. -M. Vong and C. L. P. Chen, "Novel Efficient RNN and LSTM-Like Architectures: Recurrent and Gated Broad Learning Systems and Their Applications for Text Classification," in IEEE Transactions on Cybernetics, vol. 51, no. 3, pp. 1586-1597, March 2021, doi: 10.1109/TCYB.2020.2969705.

[3] C. L. P. Chen, Z. Liu and S. Feng, "Universal Approximation Capability of Broad Learning System and Its Structural Variations," in IEEE Transactions on Neural Networks and Learning Systems, vol. 30, no. 4, pp. 1191-1204, April 2019, doi: 10.1109/TNNLS.2018.2866622.

[4] H. Ye, H. Li and C. L. P. Chen, "Adaptive Deep Cascade Broad Learning System and Its Application in Image Denoising," in IEEE Transactions on Cybernetics, vol. 51, no. 9, pp. 4450-4463, Sept. 2021, doi: 10.1109/TCYB.2020.2978500.

[5] Z. Liu, C. L. P. Chen, S. Feng, Q. Feng and T. Zhang, "Stacked Broad Learning System: From Incremental Flatted Structure to Deep Model," in IEEE Transactions on Systems, Man, and Cybernetics: Systems, vol. 51, no. 1, pp. 209-222, Jan. 2021, doi: 10.1109/TSMC.2020.3043147.

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢