论文地址:https://arxiv.org/pdf/2207.10397.pdf

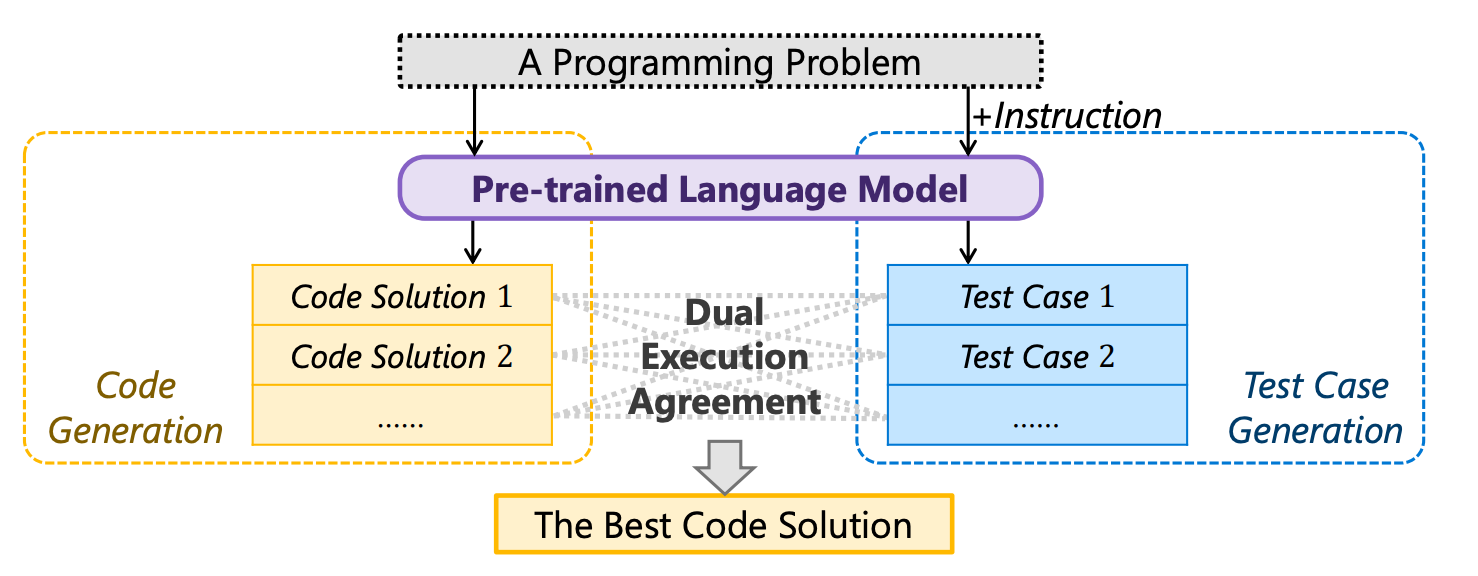

摘要:对于一个编程问题,预训练的语言模型(例如 Codex)已经展示了通过采样生成多种不同代码解决方案的能力。然而,从这些样本中选择正确或最佳的解决方案仍然是一个挑战。虽然验证代码解决方案正确性的一种简单方法是执行测试用例,但生成高质量的测试用例成本高得令人望而却步。在本文中,我们探索使用预训练的语言模型来自动生成测试用例,调用我们的方法 CodeT: Code generation with generated Tests。 CodeT 使用生成的测试用例执行代码解决方案,然后根据与生成的测试用例和其他生成的解决方案的双重执行协议选择最佳解决方案。我们使用 HumanEval 和 MBPP 基准在五个不同的预训练模型上评估 CodeT。大量的实验结果表明,CodeT 可以比以前的方法实现显着、一致和令人惊讶的改进。例如,CodeT 将 HumanEval 上的 pass@1 提高到 65.8%,在 code-davinci-002 模型上绝对提高了 18.8%,并且比之前最先进的结果绝对提高了 20+%。

Given a programming problem, pre-trained language models such as Codex have demonstrated the ability to generate multiple different code solutions via sampling. However, selecting a correct or best solution from those samples still remains a challenge. While an easy way to verify the correctness of a code solution is through executing test cases, producing high-quality test cases is prohibitively expensive. In this paper, we explore the use of pre-trained language models to automatically generate test cases, calling our method CodeT: Code generation with generated Tests. CodeT executes the code solutions using the generated test cases, and then chooses the best solution based on a dual execution agreement with both the generated test cases and other generated solutions. We evaluate CodeT on five different pre-trained models with both HumanEval and MBPP benchmarks. Extensive experimental results demonstrate CodeT can achieve significant, consistent, and surprising improvements over previous methods. For example, CodeT improves the pass@1 on HumanEval to 65.8%, an increase of absolute 18.8% on the code-davinci-002 model, and an absolute 20+% improvement over previous state-of-the-art results.

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢