来自今天的爱可可AI前沿推介。

[LG] The Forward-Forward Algorithm: Some Preliminary Investigations

G Hinton

[Google Brain]

推荐阅读:Geoffrey Hinton最新演讲:前向-前向神经网络训练算法(NeurIPS 2022论文)

前向-前向算法:一些初步研究

简介:本文提出前向-前向算法,一种新的神经网络学习程序,需要两个前向通道——一个是真实数据,一个是负面数据,其目标是提高真实数据的"良好度",减少负面数据的"良好度",建议反向传递可离线进行,允许视频和其他数据通过网络运行,而不需要存储激活或暂停导数传播。

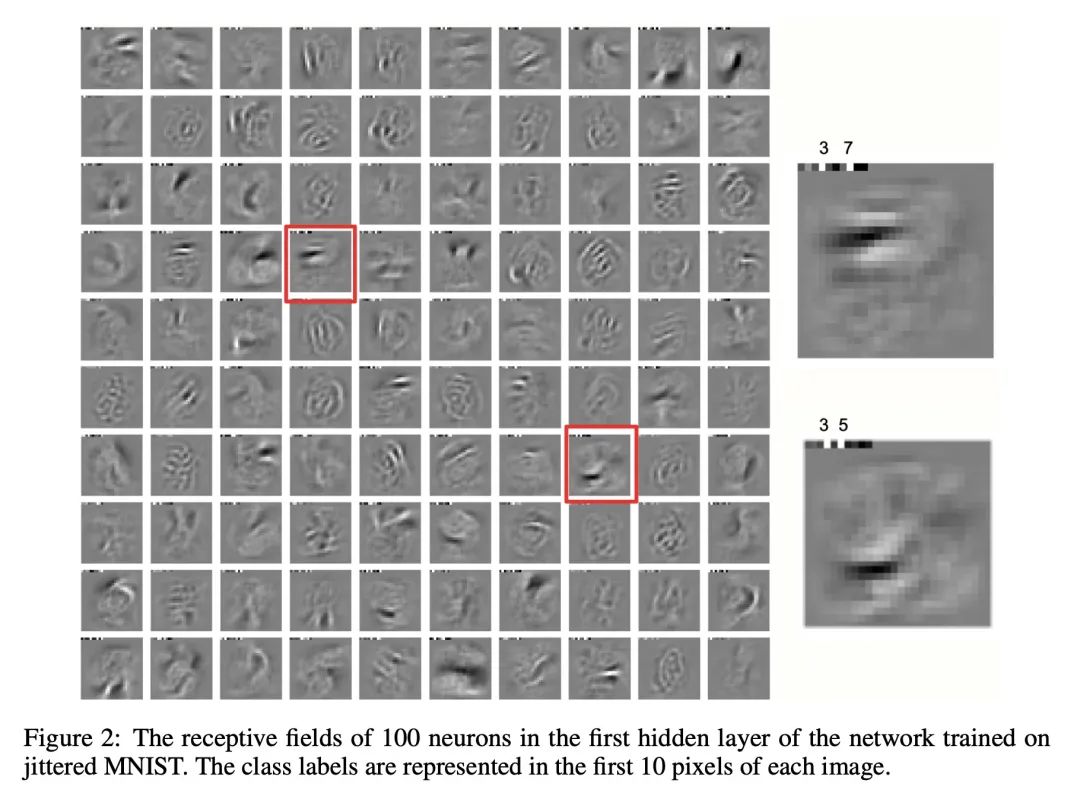

摘要:本文提出一种新的神经网络学习程序,并证明它在一些小问题上运行良好,值得认真研究。前向-前向算法用两个前向通道取代了反向传播的前向和后向通道,一个是正向(即真实)数据,另一个是可以由网络本身产生的负向数据。每一层都有自己的目标函数,即对正向数据有较高的"良好度",对负向数据有较低的"良好度"。一层中激活的平方之和可以作为良好度,但也有许多其他的可能性,包括减去激活的平方之和。如果前向和反向通道可以在时间上分开,反向通道可以离线进行,这使得前向通道的学习变得更加简单,并允许视频通过网络进行流水线传输,而不需要存储激活或停止导数传播。

地址:https://cs.toronto.edu/~hinton/FFA13.pdf

The aim of this paper is to introduce a new learning procedure for neural networks and to demonstrate that it works well enough on a few small problems to be worth serious investigation. The Forward-Forward algorithm replaces the forward and backward passes of backpropagation by two forward passes, one with positive (i.e. real) data and the other with negative data which could be generated by the network itself. Each layer has its own objective function which is simply to have high goodness for positive data and low goodness for negative data. The sum of the squared activities in a layer can be used as the goodness but there are many other possibilities, including minus the sum of the squared activities. If the positive and negative passes can be separated in time, the negative passes can be done offline, which makes the learning much simpler in the positive pass and allows video to be pipelined through the network without ever storing activities or stopping to propagate derivatives.

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢