来自今天的爱可可AI前沿推介

[CL] Language Models as Agent Models

J Andreas

[MIT CSAIL]

语言模型作为个体模型

简介:本文探讨了这样一个观点,即用语言模型——用单独个体产生的文档集训练得到——可以推断出关于这些个体的内在状态信息。最近的文献结果表明,尽管训练数据有限,但语言模型可以推断出细粒度的交流意图和目标表征,使它们能够作为交流和有意图行动的系统的构建块。

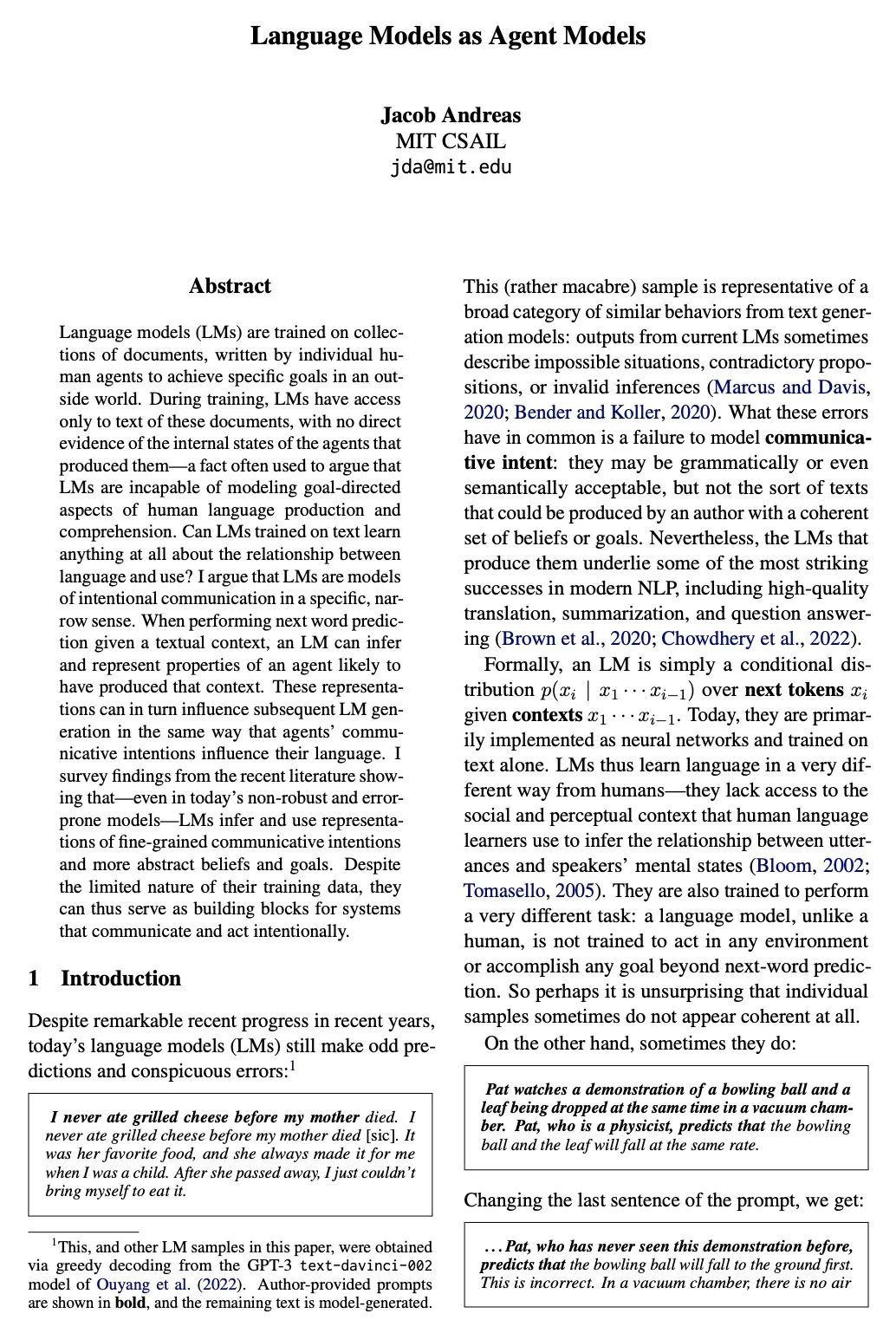

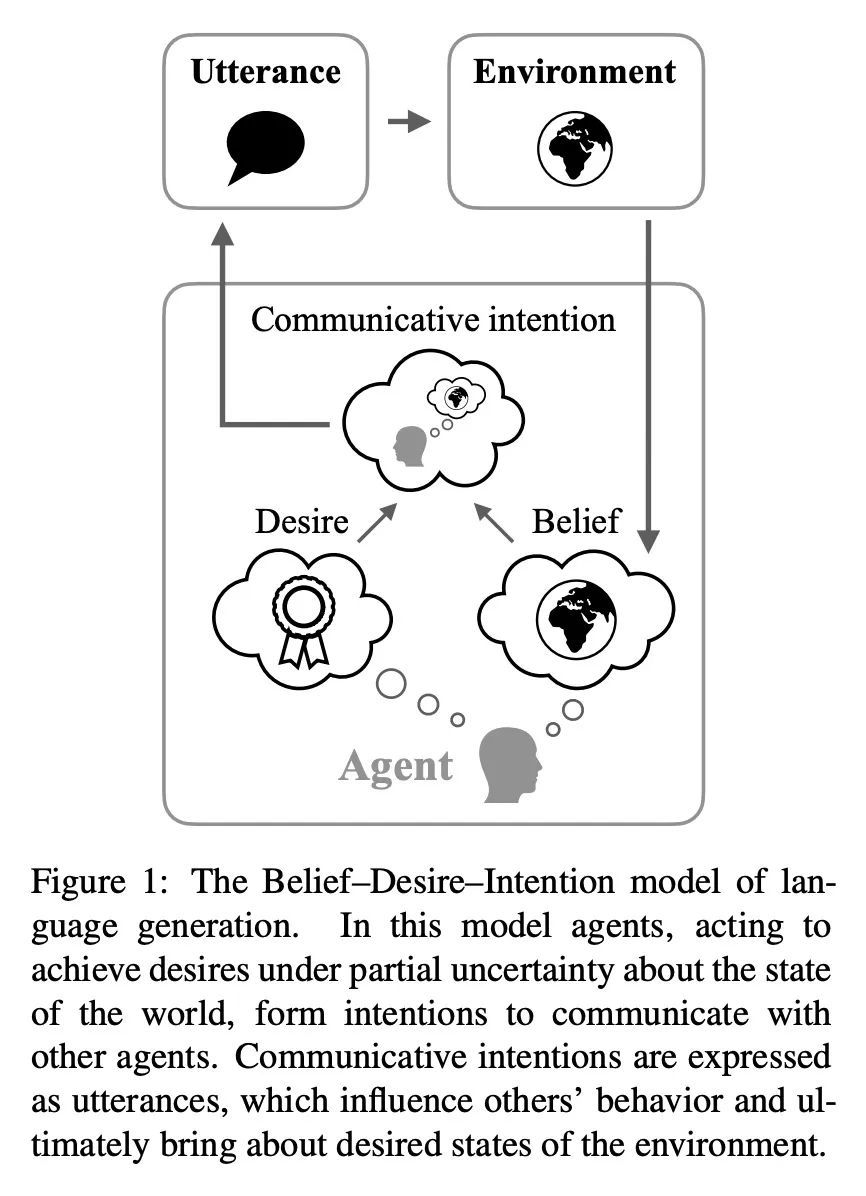

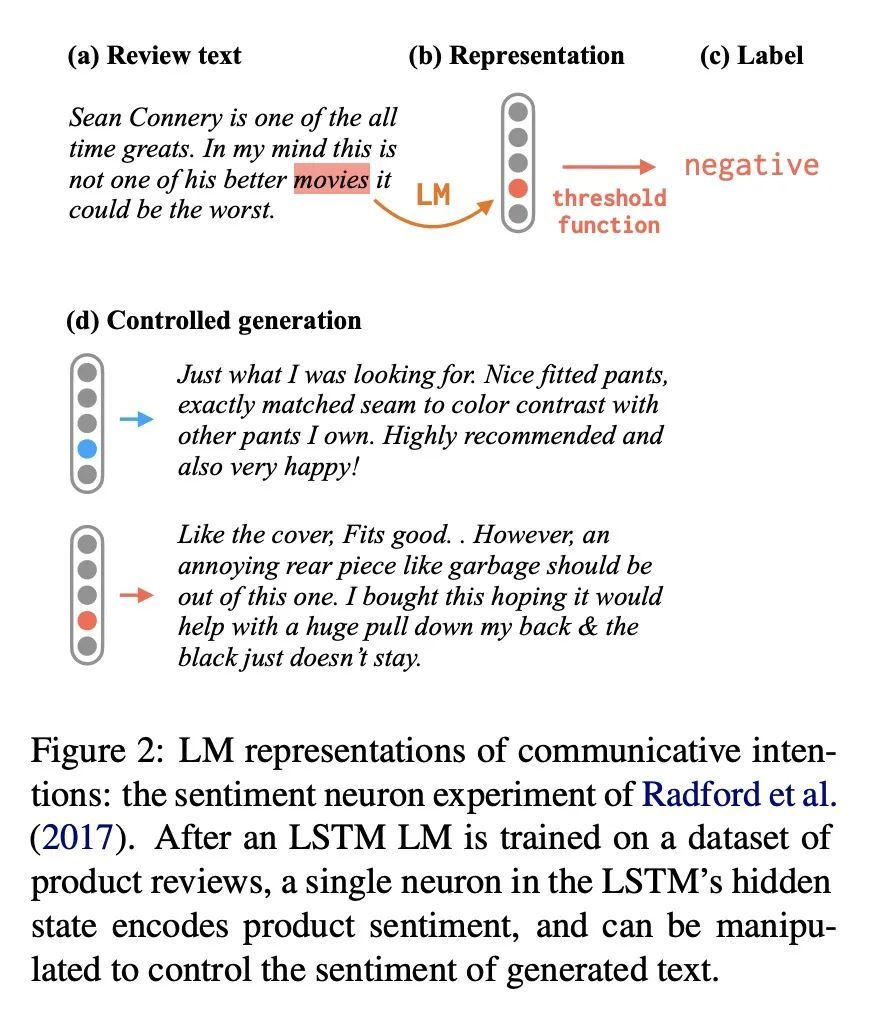

摘要:语言模型(LM)是在文档集上训练的,这些文档是由单个人类个体为实现外部世界中的特定目标而写的。在训练过程中,语言模型只能接触到这些文档的文本,没有产生这些文档的个体的内部状态的直接证据——这一事实经常被用来论证语言模型没有能力对人类语言生产和理解的目标导向方面进行建模。在文本上训练的LM能不能学到关于语言和使用之间关系的蛛丝马迹?本文认为,在特定的狭义上,语言模型是有意沟通的模型。当给定一个文本语境进行下一个词的预测时,语言模型可以推断和表示一个可能产生该语境的个体的属性。这些表示可以反过来影响随后的语言模型生成,就像个体的交流意图影响他们的语言一样。本文调查了最近的文献发现,即使在今天的非鲁棒且易出错的模型中,语言模型也能推断和使用细粒度的交际意图和更抽象的信念和目标的表征。尽管它们的训练数据有限,但它们可以作为交流和有意行动的系统的构建块。

Language models (LMs) are trained on collections of documents, written by individual human agents to achieve specific goals in an outside world. During training, LMs have access only to text of these documents, with no direct evidence of the internal states of the agents that produced them -- a fact often used to argue that LMs are incapable of modeling goal-directed aspects of human language production and comprehension. Can LMs trained on text learn anything at all about the relationship between language and use? I argue that LMs are models of intentional communication in a specific, narrow sense. When performing next word prediction given a textual context, an LM can infer and represent properties of an agent likely to have produced that context. These representations can in turn influence subsequent LM generation in the same way that agents' communicative intentions influence their language. I survey findings from the recent literature showing that -- even in today's non-robust and error-prone models -- LMs infer and use representations of fine-grained communicative intentions and more abstract beliefs and goals. Despite the limited nature of their training data, they can thus serve as building blocks for systems that communicate and act intentionally.

论文链接:https://arxiv.org/abs/2212.01681

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢