[CL] Prompting Is Programming: A Query Language For Large Language Models

L Beurer-Kellner, M Fischer, M Vechev

[ETH Zurich]

提示即编程: 大型语言模型查询语言

要点:

1. 提出语言模型编程(LMP),推广了语言模型提示;

2. 提出一种新的语言和运行时,称为语言模型查询语言(LMP),用于高效的语言模型提示。

摘要:

大型语言模型在诸如问答和代码生成等广泛的任务中表现出卓越的性能。在一个较高的水平上,给定一个输入,一个语言模型可以被用来以统计学上可能性的方式自动完成序列。在此基础上,用户用语言指令或例子提示这些模型,以实现各种下游任务。高级提示方法甚至可以暗示语言模型、用户和外部工具(如计算器)之间的交互。然而,为了获得最先进的性能或使语言模型适应特定的任务,必须实施复杂的任务和特定模型的程序,这可能仍然需要临时的交互。基于此,本文提出语言模型编程(LMP)的概念,将语言模型提示从纯文本提示推广为文本提示和脚本的直观组合。此外,LMP允许对语言模型的输出进行约束。使得能轻松地适应许多任务,同时抽象出语言模型的内部结构并提供高级语义。为实现LMP,本文实现了LMQL(语言模型查询语言),利用LMP提示的约束和控制流来生成一个高效的推理程序,使对底层语言模型的昂贵调用数量最小化。LMQL能以一种直观的方式捕捉广泛的最先进的提示方法,特别是促进那些用现有高级API实现的具有挑战性的交互流程。评估表明,该方法保持或提高了多个下游任务的准确性,同时在付费使用API的情况下,也大大减少了所需的计算量或成本(节省13-85%的成本)。

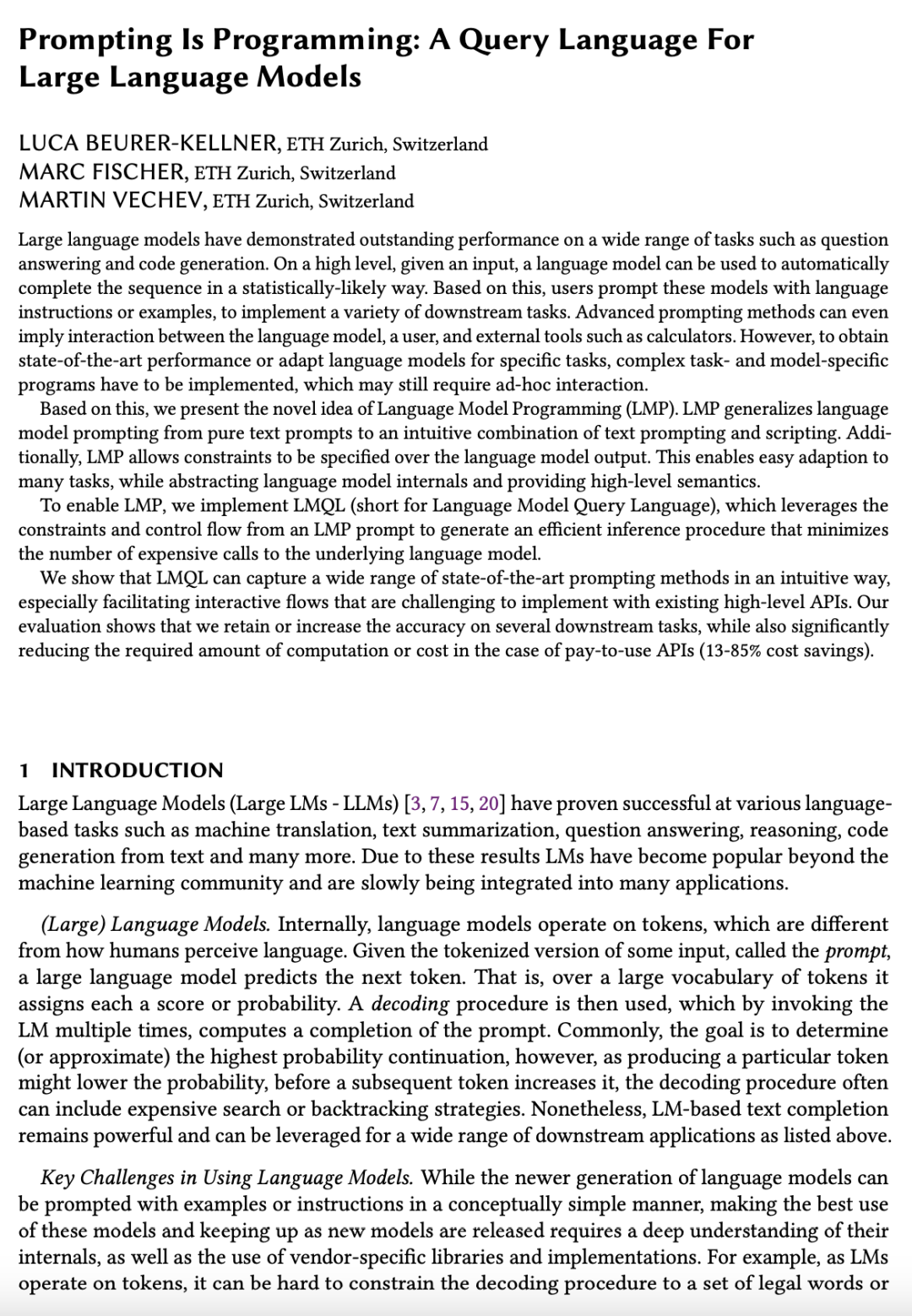

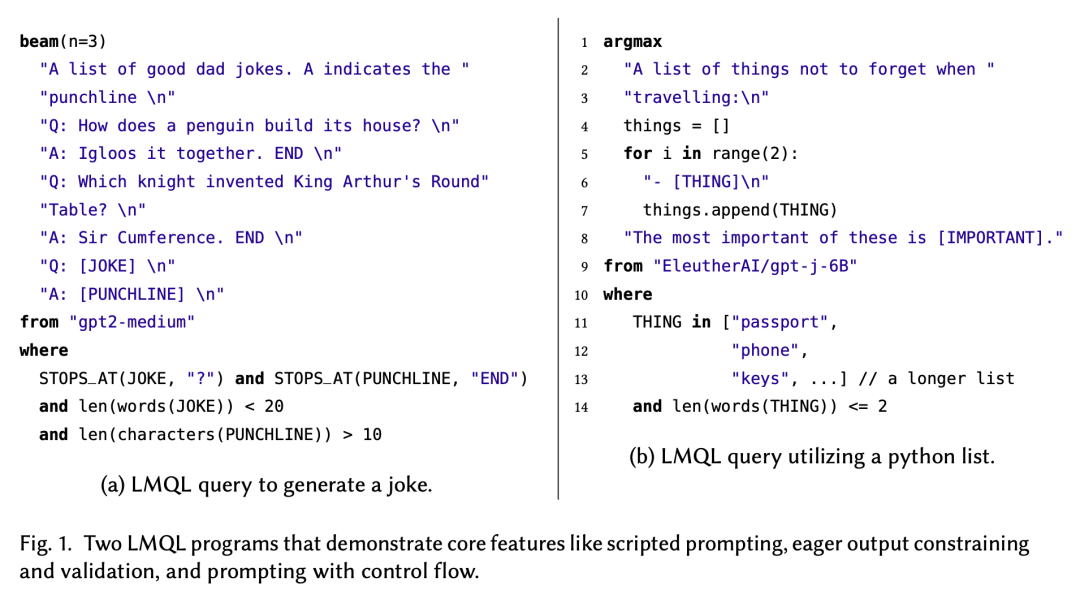

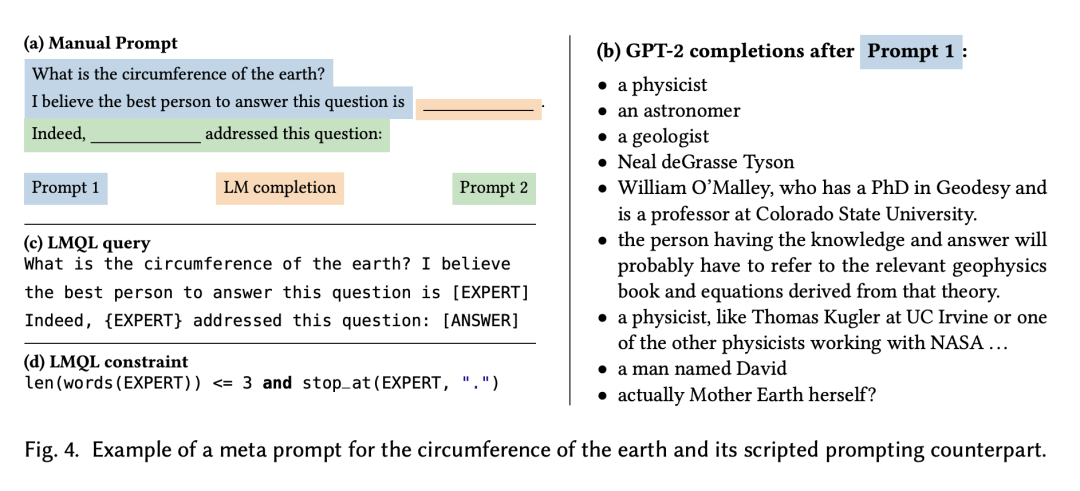

Large language models have demonstrated outstanding performance on a wide range of tasks such as question answering and code generation. On a high level, given an input, a language model can be used to automatically complete the sequence in a statistically-likely way. Based on this, users prompt these models with language instructions or examples, to implement a variety of downstream tasks. Advanced prompting methods can even imply interaction between the language model, a user, and external tools such as calculators. However, to obtain state-of-the-art performance or adapt language models for specific tasks, complex task- and model-specific programs have to be implemented, which may still require ad-hoc interaction.Based on this, we present the novel idea of Language Model Programming (LMP). LMP generalizes language model prompting from pure text prompts to an intuitive combination of text prompting and scripting. Additionally, LMP allows constraints to be specified over the language model output. This enables easy adaption to many tasks, while abstracting language model internals and providing high-level semantics. To enable LMP, we implement LMQL (short for Language Model Query Language), which leverages the constraints and control flow from an LMP prompt to generate an efficient inference procedure that minimizes the number of expensive calls to the underlying language model. We show that LMQL can capture a wide range of state-of-the-art prompting methods in an intuitive way, especially facilitating interactive flows that are challenging to implement with existing high-level APIs. Our evaluation shows that we retain or increase the accuracy on several downstream tasks, while also significantly reducing the required amount of computation or cost in the case of pay-to-use APIs (13-85% cost savings).

论文链接:https://arxiv.org/abs/2212.06094

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢