来自今天的爱可可AI前沿推介

[LG] Feature Dropout: Revisiting the Role of Augmentations in Contrastive Learning

A Tamkin, M Glasgow, X He, N Goodman

[Stanford University]

特征Dropout:重新审视增强在对比学习中的作用

要点:

-

标签破坏性增强在基础模型设置下可用,其目标是为多个下游任务学习多样的通用表示; -

标签破坏性增强作为一种特征dropout的形式——防止任何一个特征成为捷径特征并抑制其他特征的学习; -

如果目标是避免以一个特征的学习为代价来学习其他特征,那么标签破坏性观点会对对比学习产生积极影响。

摘要:

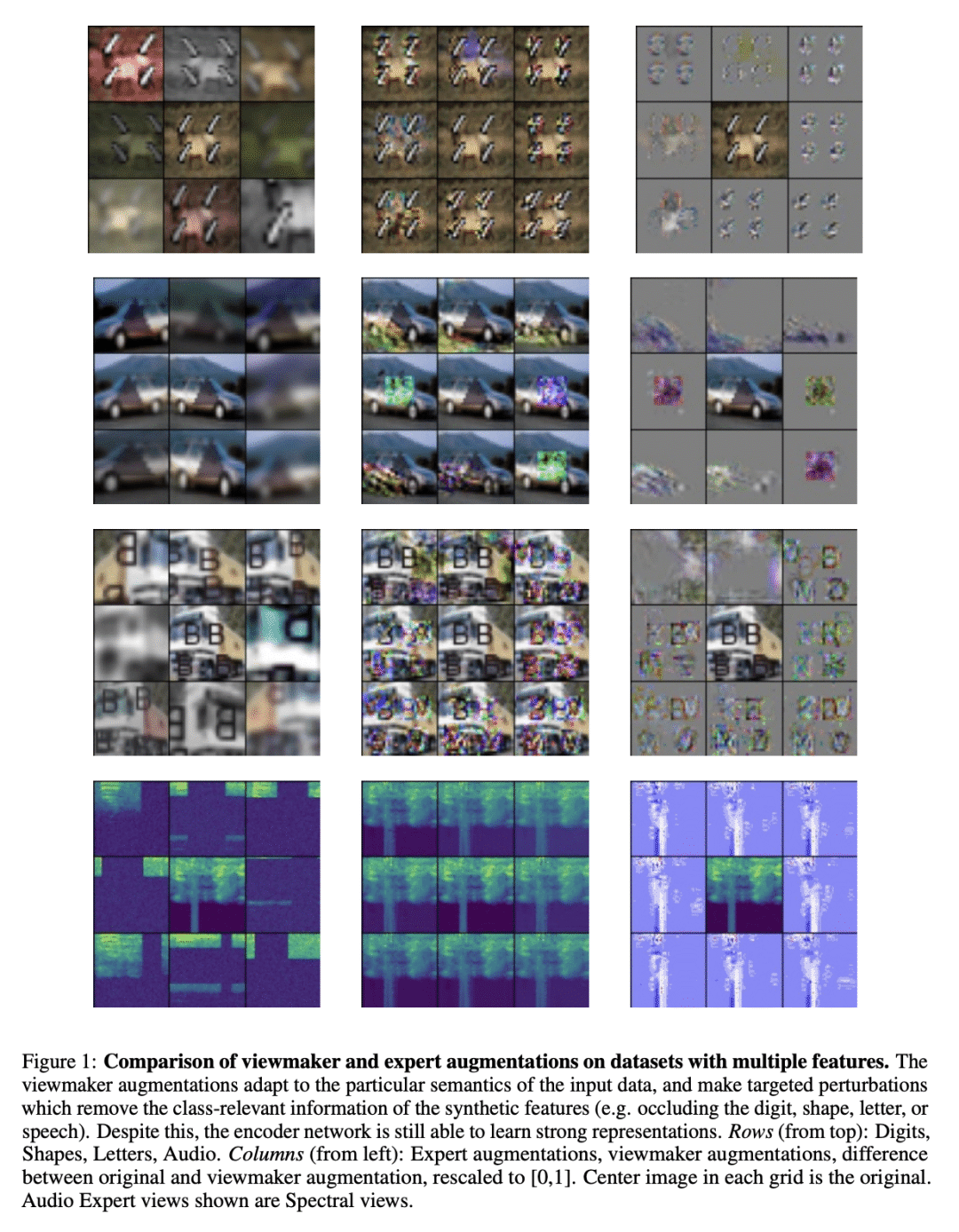

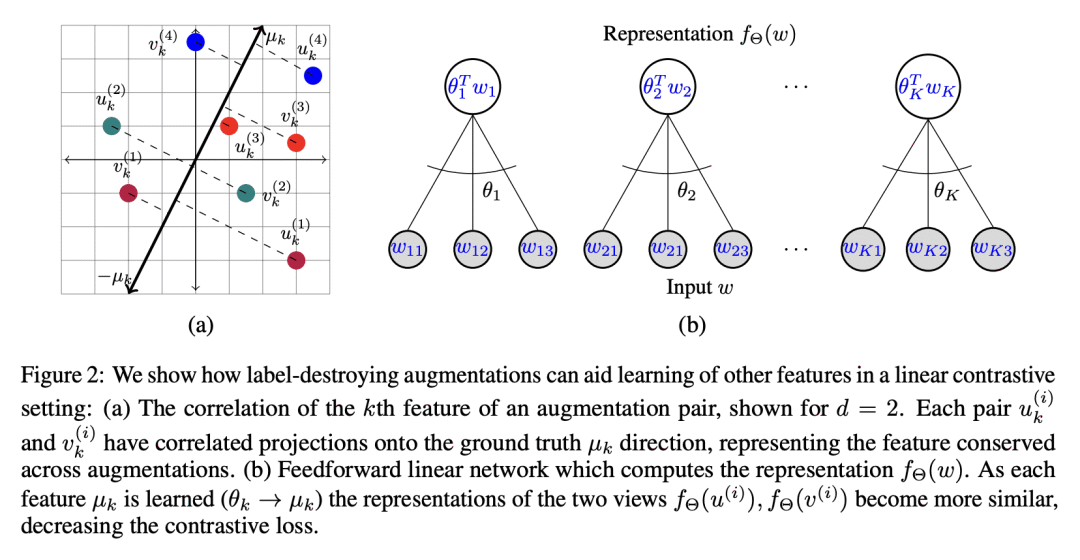

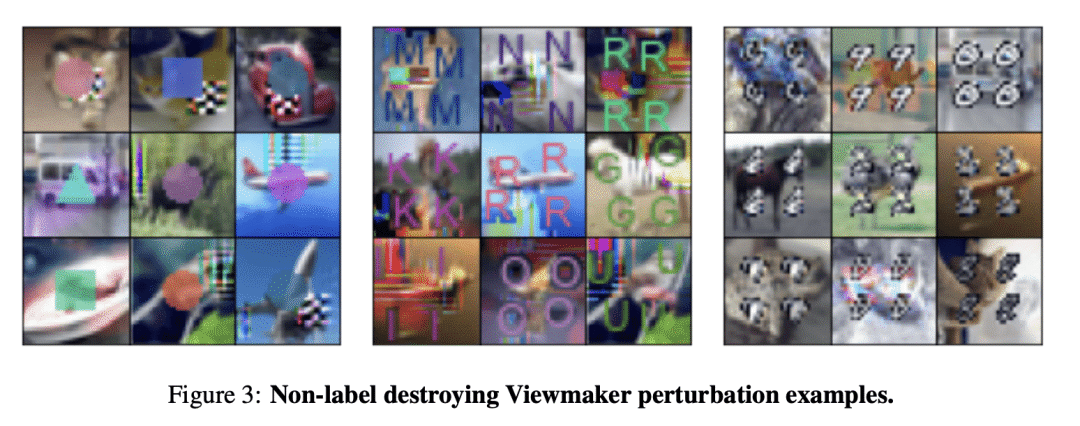

扩增在对比性学习中发挥什么作用?最近的工作表明,对于特定的下游任务来说,好的增强是标签保持性的。本文通过展示标签破坏性增强在基础模型设置下是有用的,目标是为多个下游任务学习不同的、通用的表示,使这一情况变得复杂。在一系列具有多个下游任务的图像和音频数据集上进行了对比学习实验(例如,对于叠加在照片上的数字,预测一个与另一个的类别)。发现Viewmaker网络,一个最近提出的用于对比性学习的增强模型,产生了标签破坏性增强,随机地破坏了不同下游任务所需的特征。这些增强是可解释的(例如改变形状、数字或添加到图像中的字母),而且令人惊讶的是,尽管没有保留标签信息,但与专家设计的增强相比,往往会产生更好的性能。为支持以上经验结果,本文从理论上分析了一个简单的具有线性模型的对比学习环境。在这种情况下,标签破坏性增强对于防止一组特征压制对另一个下游任务有用的特征的学习至关重要。本文结果强调,在试图解释基础模型的成功时,需要分析多个下游任务之间的交互。

What role do augmentations play in contrastive learning? Recent work suggests that good augmentations are label-preserving with respect to a specific downstream task. We complicate this picture by showing that label-destroying augmentations can be useful in the foundation model setting, where the goal is to learn diverse, general-purpose representations for multiple downstream tasks. We perform contrastive learning experiments on a range of image and audio datasets with multiple downstream tasks (e.g. for digits superimposed on photographs, predicting the class of one vs. the other). We find that Viewmaker Networks, a recently proposed model for learning augmentations for contrastive learning, produce label-destroying augmentations that stochastically destroy features needed for different downstream tasks. These augmentations are interpretable (e.g. altering shapes, digits, or letters added to images) and surprisingly often result in better performance compared to expert-designed augmentations, despite not preserving label information. To support our empirical results, we theoretically analyze a simple contrastive learning setting with a linear model. In this setting, label-destroying augmentations are crucial for preventing one set of features from suppressing the learning of features useful for another downstream task. Our results highlight the need for analyzing the interaction between multiple downstream tasks when trying to explain the success of foundation models.

论文链接:https://arxiv.org/abs/2212.08378

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢