来自今天的爱可可AI前沿推介

[CV] Scalable Diffusion Models with Transformers

W Peebles, S Xie

[UC Berkeley & New York University]

基于Transformer的可扩展扩散模型

要点:

-

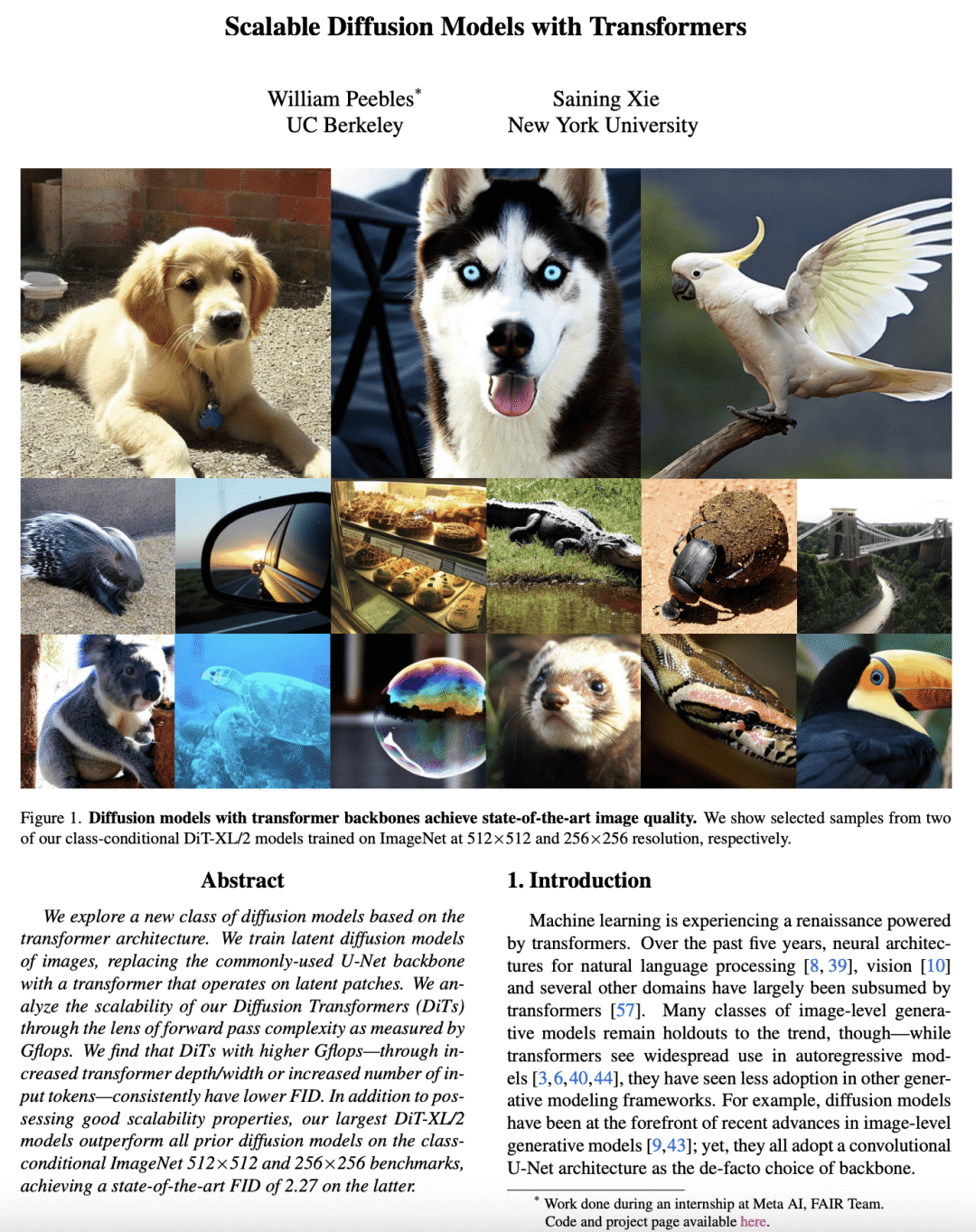

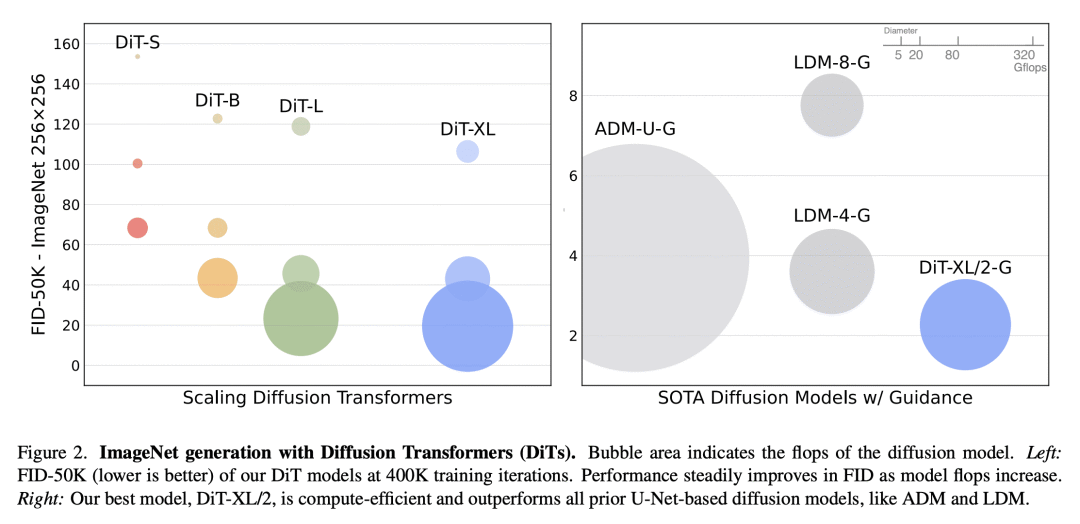

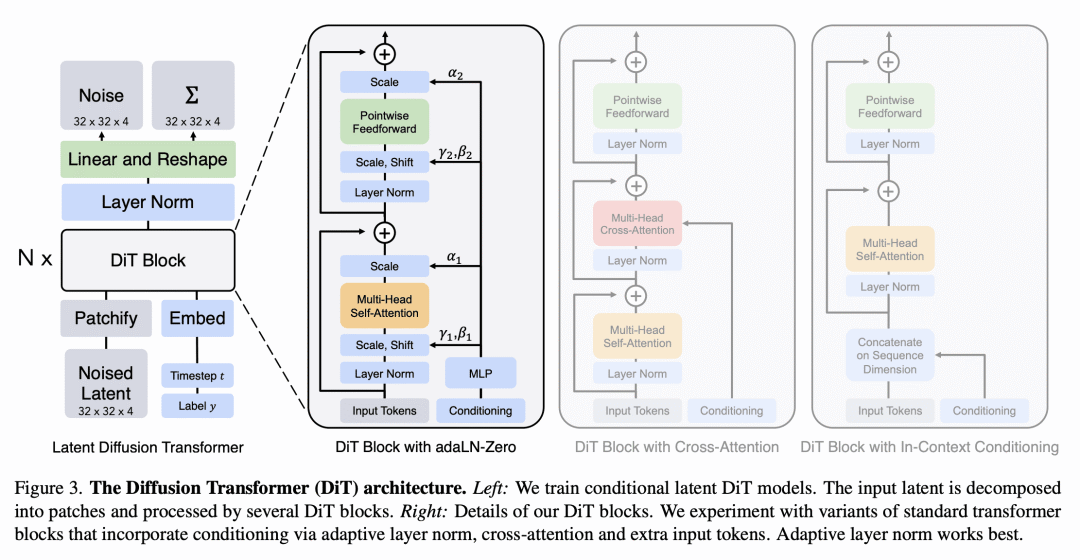

提出Diffusion Transformers(DiT),一种基于Transformer的扩散模型骨干,优于之前的U-Net模型,并具有可扩展的架构; -

DiT可以扩展到更大的模型和Token数量,并用作文本到图像模型的即用型骨干; -

DiT可以从架构统一的趋势中受益,并拥有可扩展性,鲁棒性和效率等特性。

摘要:

本文探索了一类新的基于Transformer架构的扩散模型。训练图像的潜扩散模型,用一个在潜图块上操作的Transformer取代常用的U-Net骨干。通过以Gflops衡量的前向传递复杂度来分析扩散Transformer(DiT)的可扩展性。结果发现,具有较高Gflops的DiT——通过增加Transformer的深度/宽度或增加输入token的数量——始终具有较低的FID。除了拥有良好的可扩展性,所得到的最大的DiT-XL/2模型在类条件ImageNet 512x512和256x256基准上的表现优于之前的所有扩散模型,在后者上实现了最先进的FID为2.27。

We explore a new class of diffusion models based on the transformer architecture. We train latent diffusion models of images, replacing the commonly-used U-Net backbone with a transformer that operates on latent patches. We analyze the scalability of our Diffusion Transformers (DiTs) through the lens of forward pass complexity as measured by Gflops. We find that DiTs with higher Gflops -- through increased transformer depth/width or increased number of input tokens -- consistently have lower FID. In addition to possessing good scalability properties, our largest DiT-XL/2 models outperform all prior diffusion models on the class-conditional ImageNet 512x512 and 256x256 benchmarks, achieving a state-of-the-art FID of 2.27 on the latter.

论文链接:https://arxiv.org/abs/2212.09748

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢