来自今天的爱可可AI前沿推介

[CL] Character-Aware Models Improve Visual Text Rendering

R Liu, D Garrette, C Saharia, W Chan, A Roberts, S Narang, I Blok, R Mical, M Norouzi, N Constant

[Google Research]

用字符感知模型改进视觉文本渲染

要点:

-

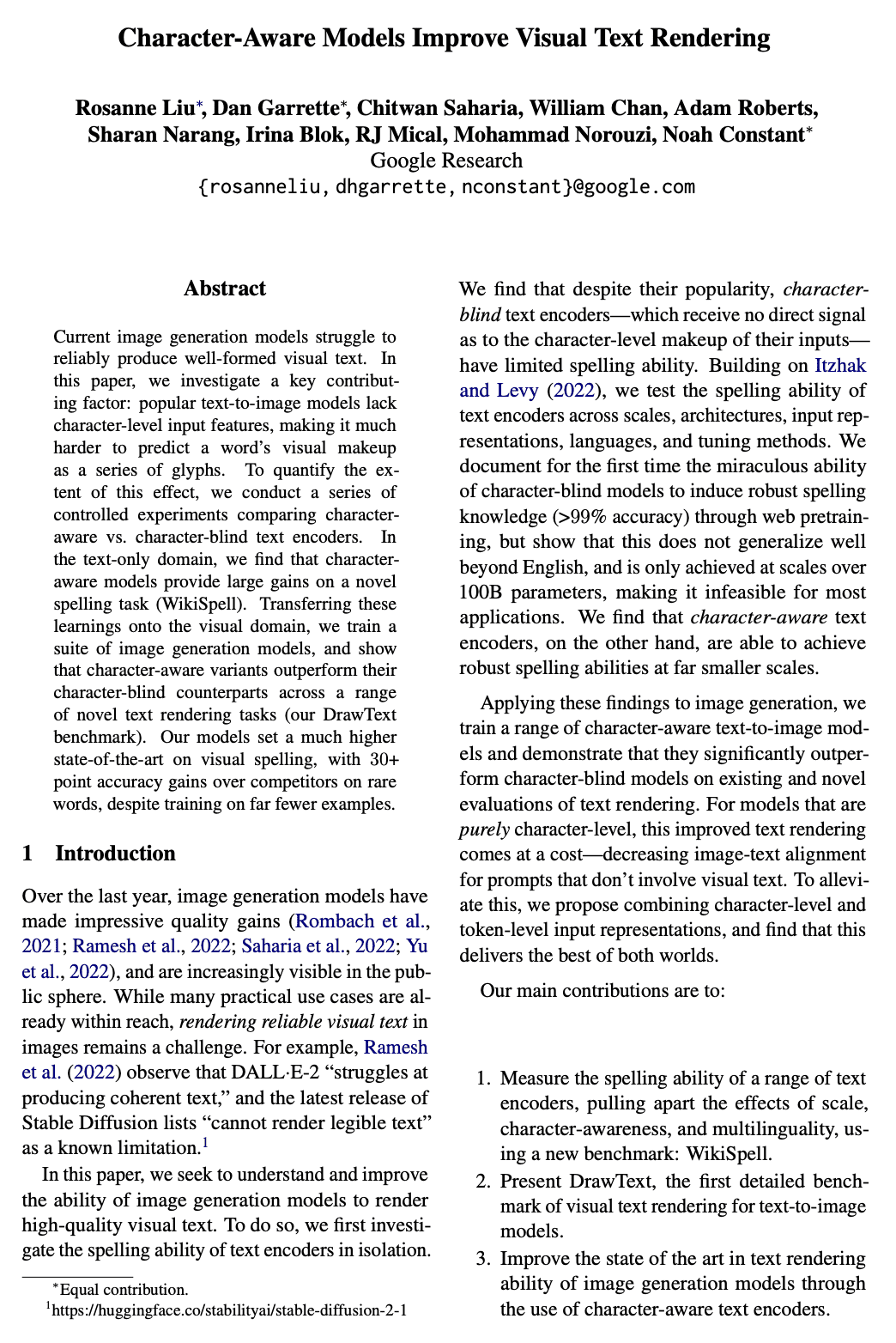

字符感知文本编码器提供了拼写的大幅改善,用于图像生成模型时,可直接转化为改进的视觉文本渲染; -

结合token级和字符级信号的混合模型提供了最佳的双赢方案:在不显著影响整体对齐的情况下,大大改善了视觉文本质量; -

在足够大的尺度下,即使没有直接的字符级视图,也可以通过来自Web预训练的知识来推断出强大的拼写信息 - “拼写奇迹”。

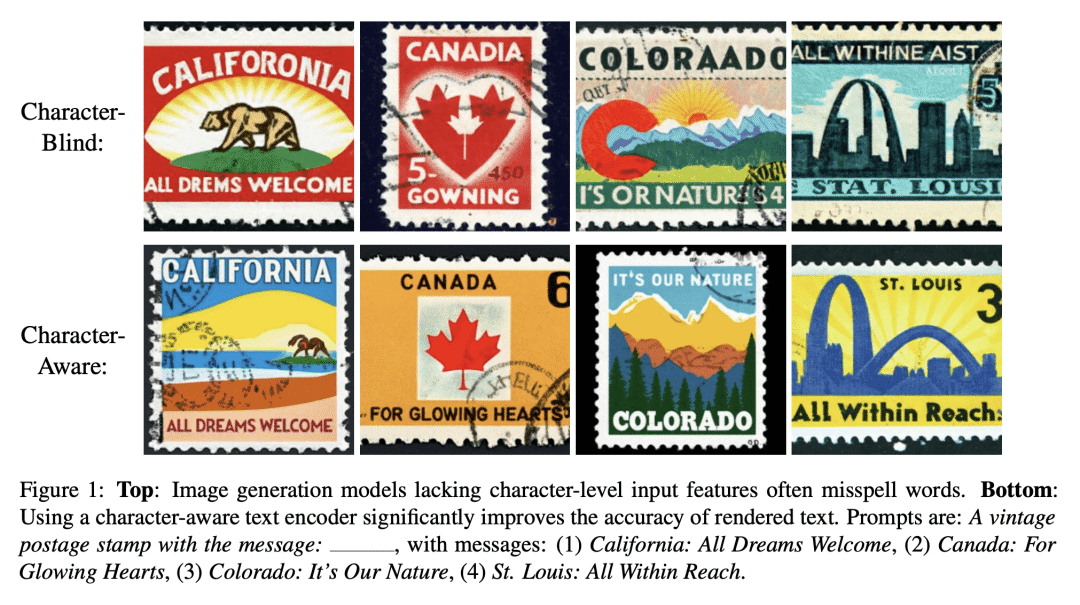

摘要: 目前的图像生成模型很难可靠地生成格式良好的视觉文本。本文研究了一个关键因素:流行的文本到图像模型缺乏字符级输入功能,因此很难将单词的视觉构成预测为一系列字形。为了量化这种效应的程度,本文进行了一系列受控实验,比较了字符感知和字符盲文本编码器。在纯文本领域,发现字符感知模型为新的拼写任务(WikiSpell)提供了巨大的收益。将这些学习迁移到视觉域,训练一套图像生成模型,并表明在一系列新的文本渲染任务(DrawText基准测试)中,字符感知变体的表现优于字符盲变体。所提出的模型在视觉拼写方面达到了更高的最新水平,尽管对少得多的样本进行训练,但在稀有词上比竞争对手提高了30多点。

Current image generation models struggle to reliably produce well-formed visual text. In this paper, we investigate a key contributing factor: popular text-to-image models lack character-level input features, making it much harder to predict a word's visual makeup as a series of glyphs. To quantify the extent of this effect, we conduct a series of controlled experiments comparing character-aware vs. character-blind text encoders. In the text-only domain, we find that character-aware models provide large gains on a novel spelling task (WikiSpell). Transferring these learnings onto the visual domain, we train a suite of image generation models, and show that character-aware variants outperform their character-blind counterparts across a range of novel text rendering tasks (our DrawText benchmark). Our models set a much higher state-of-the-art on visual spelling, with 30+ point accuracy gains over competitors on rare words, despite training on far fewer examples.

论文链接:https://arxiv.org/abs/2212.10562

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢