来自今天的爱可可AI前沿推介

[CV] Do DALL-E and Flamingo Understand Each Other?

H Li, J Gu, R Koner, S Sharifzadeh, V Tresp

[LMU Munich & University of Oxford]

文本到图像和图像到文本的统一改进框架

要点:

-

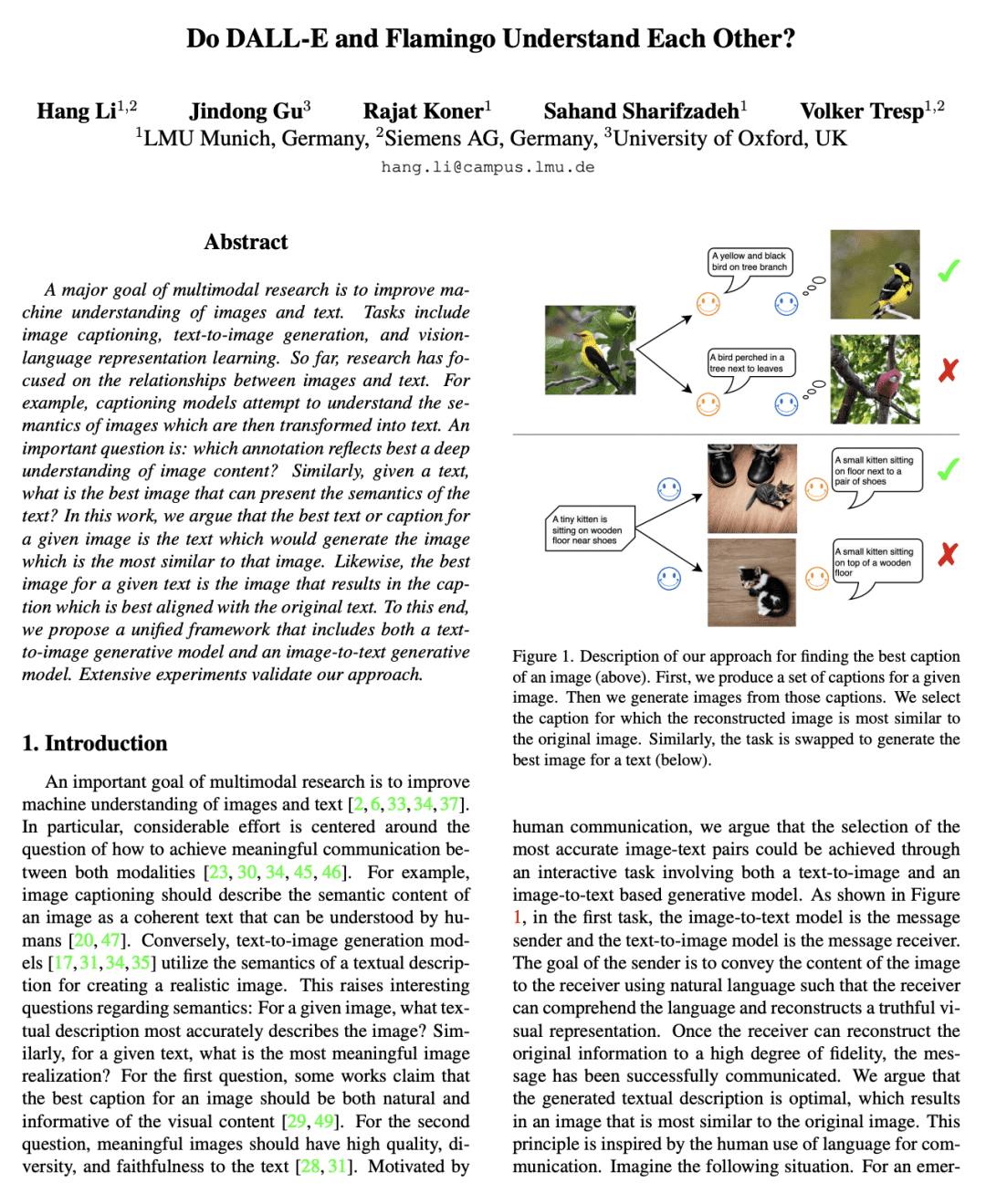

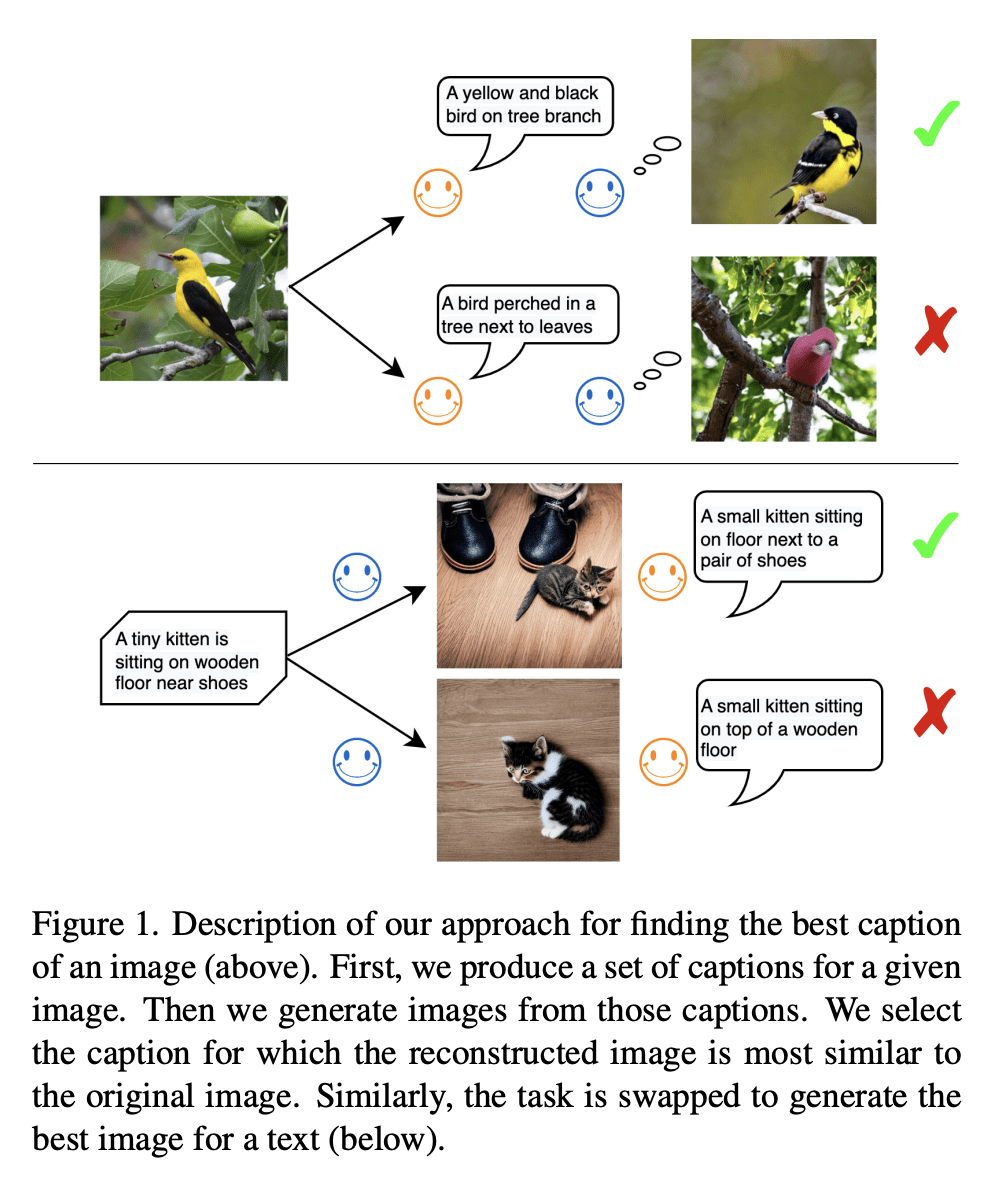

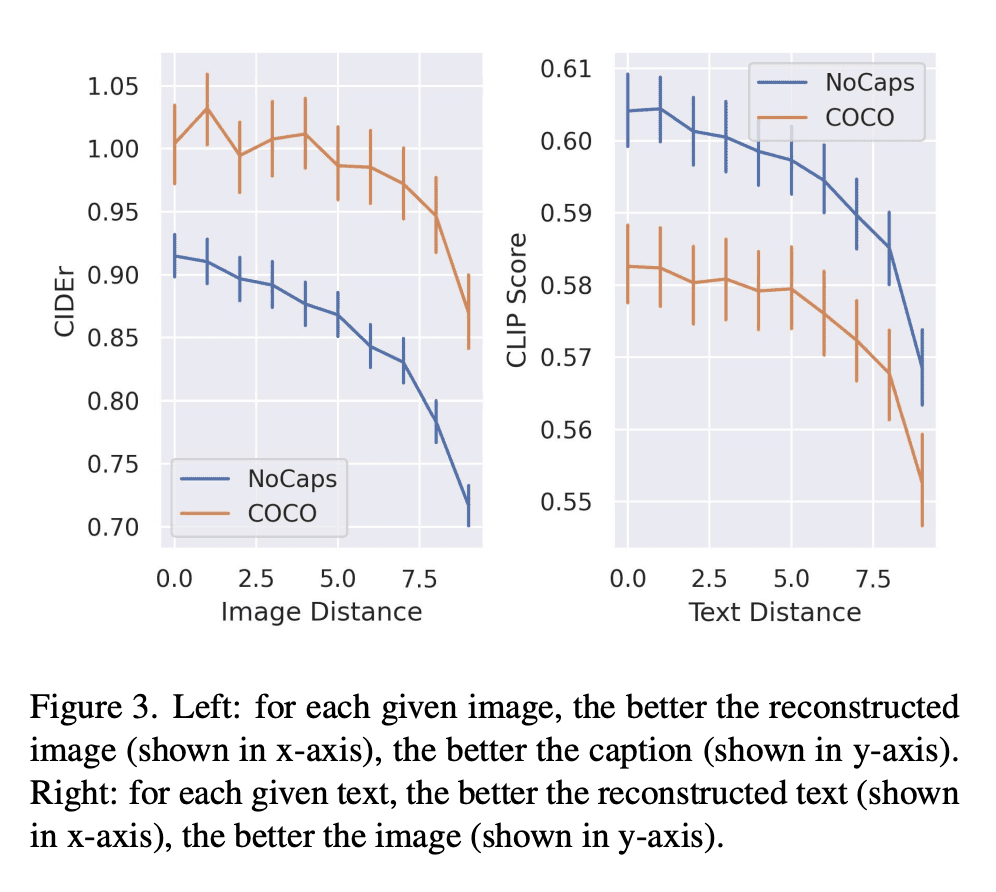

最佳的图像描述是导致最佳重建原图像的描述; -

最佳的图像是导致最佳重建原文本的图像; -

提出一种有效的方法来改进基于文本到图像模型知识的核采样。

摘要: 多模态研究的一个主要目标是提高机器对图像和文本的理解。任务包括图像描述、文本到图像生成和视觉语言表示学习。到目前为止,研究的重点是图像和文本间的关系。例如,图像描述模型试图理解图像的语义,然后将其转换为文本。一个重要的问题是:哪种描述最能反映对图像内容的深刻理解?同样,给定一个文本,可以呈现文本语义的最佳图像是什么?本文认为给定图像的最佳文本或描述是能生成与该图像最相似的图像的文本。同样,给定文本的最佳图像是导致该文本重建的图像,生成文本最好与原始文本对齐。为此,本文提出了一个统一的框架,其中包括文本到图像生成模型和图像到文本生成模型。广泛的实验验证了该方法。

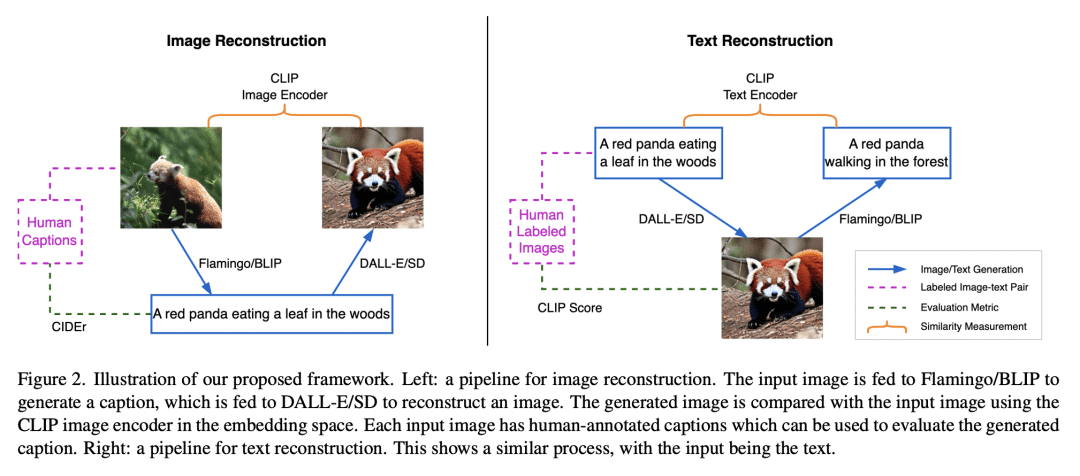

A major goal of multimodal research is to improve machine understanding of images and text. Tasks include image captioning, text-to-image generation, and vision-language representation learning. So far, research has focused on the relationships between images and text. For example, captioning models attempt to understand the semantics of images which are then transformed into text. An important question is: which annotation reflects best a deep understanding of image content? Similarly, given a text, what is the best image that can present the semantics of the text? In this work, we argue that the best text or caption for a given image is the text which would generate the image which is the most similar to that image. Likewise, the best image for a given text is the image that results in the caption which is best aligned with the original text. To this end, we propose a unified framework that includes both a text-to-image generative model and an image-to-text generative model. Extensive experiments validate our approach.

论文链接:https://arxiv.org/abs/2212.12249

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢