来自今天的爱可可AI前沿推介

[CV] Single Image Super-Resolution via a Dual Interactive Implicit Neural Network

Q H. Nguyen, W J. Beksi

[The University of Texas at Arlington]

基于双交互隐神经网络的单图像超分辨率

要点:

-

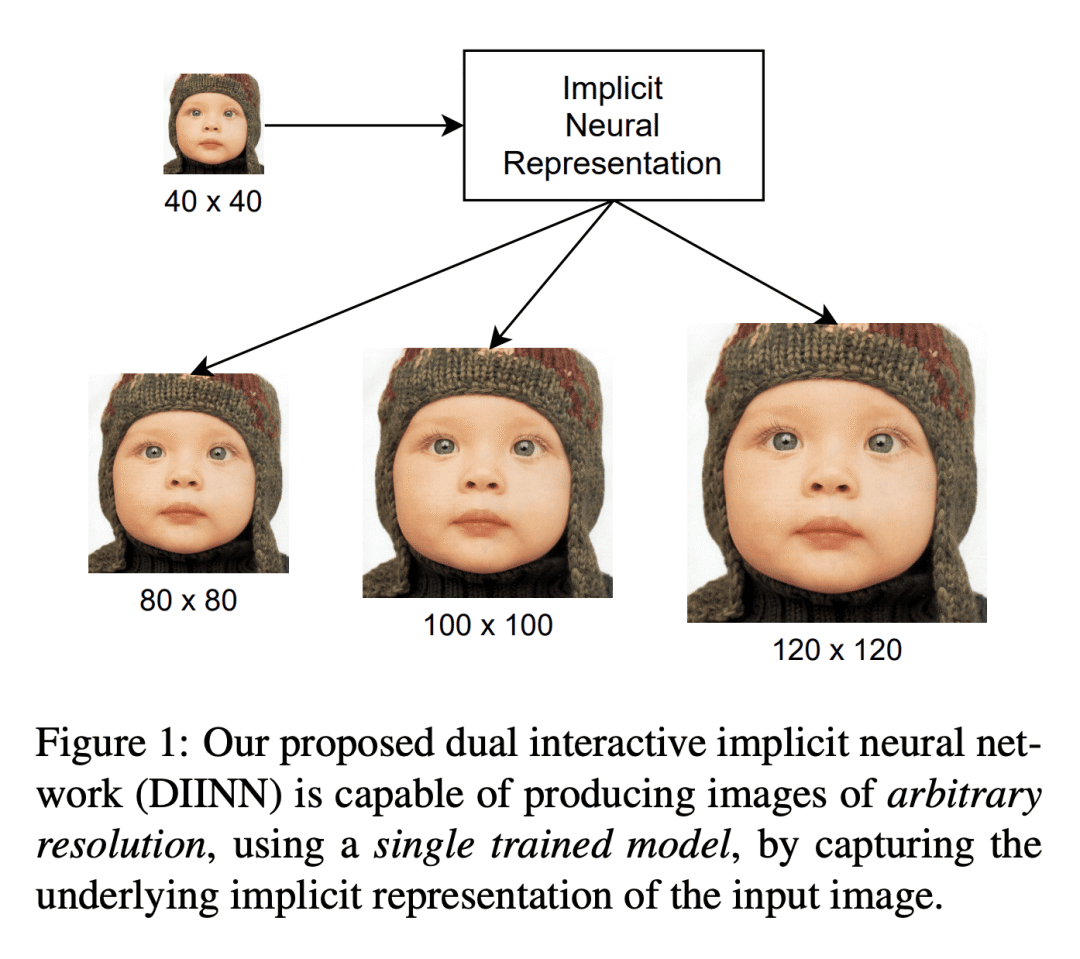

提出一种新的隐神经网络,用于任意比例因子的单图像超分辨率任务; -

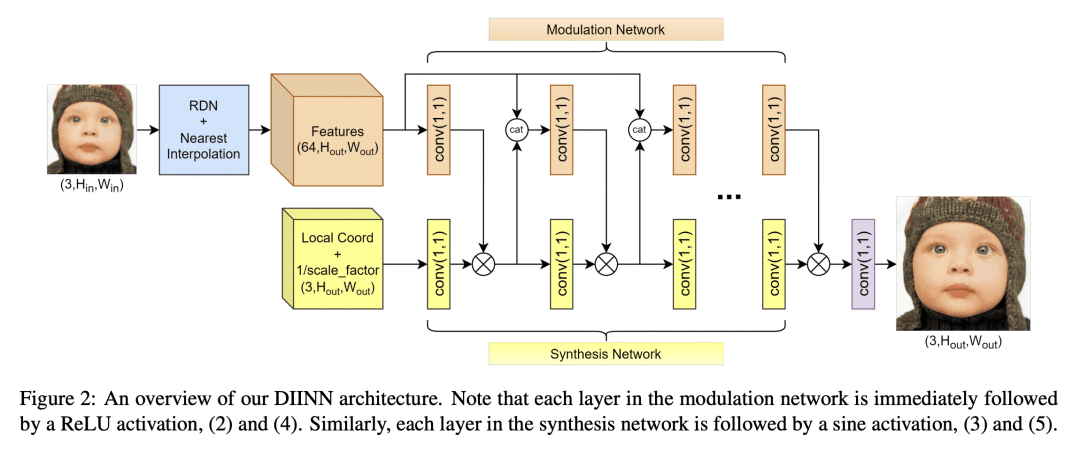

该双交互隐式神经网络分离内容特征和定位特征,同时允许两者之间的交互,从而实现图像的完全隐性表示; -

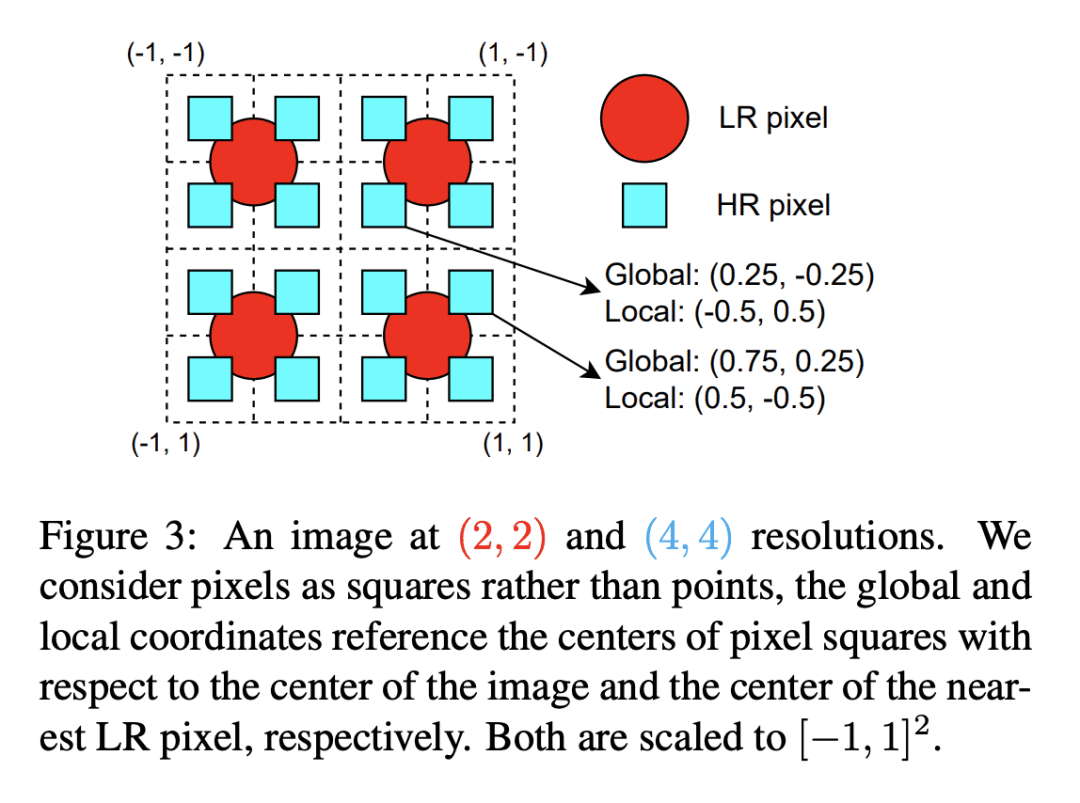

学习了一个具有像素级表示的隐式神经网络,允许对最近的LR像素进行局部连续超分辨率合成。

摘要:

本文提出一种面向任意比例因子的单图像超分辨率任务的新型隐神经网络。将图像表示为解码函数,将图像中的位置及其相关特征映射到其交互像素属性。由于此表示中的像素位置是连续的,该方法可以引用不同分辨率图像中的任意位置。要检索特定分辨率的图像,将解码函数应用于位置网格,每个位置都指向输出图像中像素的中心。与其他技术相比,该双交互式神经网络解耦了内容和位置特征。因此获得了图像的完全隐性表示,该表示使用单个模型在(实值)选择性尺度上解决超分辨率问题。本文展示了该方法在公开可用的基准数据集上对抗最先进的方法的有效性和灵活性。

In this paper, we introduce a novel implicit neural network for the task of single image super-resolution at arbitrary scale factors. To do this, we represent an image as a decoding function that maps locations in the image along with their associated features to their reciprocal pixel attributes. Since the pixel locations are continuous in this representation, our method can refer to any location in an image of varying resolution. To retrieve an image of a particular resolution, we apply a decoding function to a grid of locations each of which refers to the center of a pixel in the output image. In contrast to other techniques, our dual interactive neural network decouples content and positional features. As a result, we obtain a fully implicit representation of the image that solves the super-resolution problem at (real-valued) elective scales using a single model. We demonstrate the efficacy and flexibility of our approach against the state of the art on publicly available benchmark datasets.

论文链接:https://arxiv.org/abs/2210.12593

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢