来自今天的爱可可AI前沿推介

[LG] LAMBADA: Backward Chaining for Automated Reasoning in Natural Language

S M Kazemi, N Kim, D Bhatia, X Xu, D Ramachandran

[Google Research]

LAMBADA: 自然语言自动推理的后向链

要点:

-

通过大型语言模型处理非结构化、自然文本指定的知识取得了显著进展; -

文献表明,从结论到支持它的公理集合的后向推理(即从预期结论到公理集合的反向推理)在寻找证据方面更有效率; -

开发了名为LAMBADA的后向链算法,可以通过少样本提示语言模型推断来简单实现。

摘要:

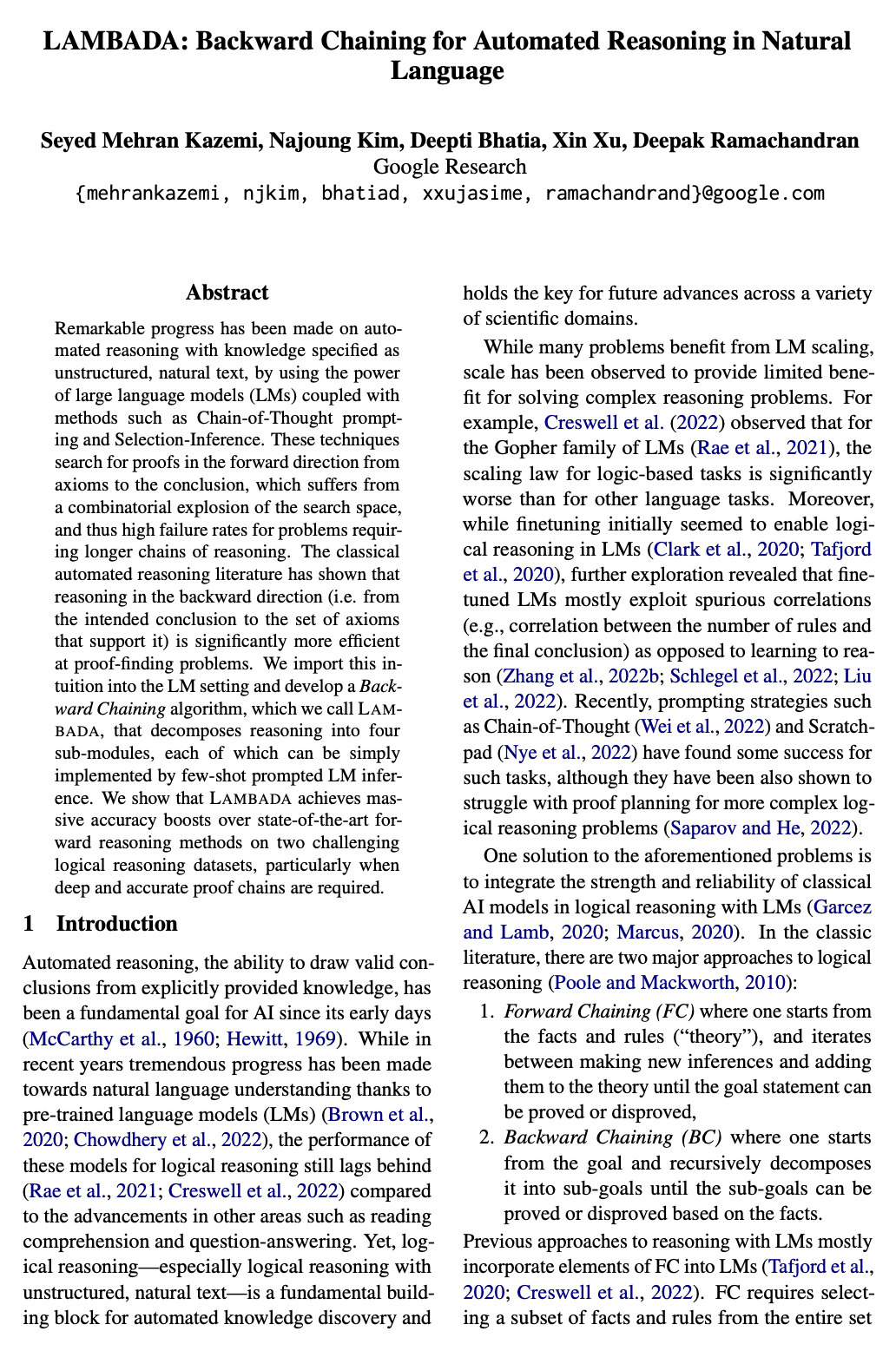

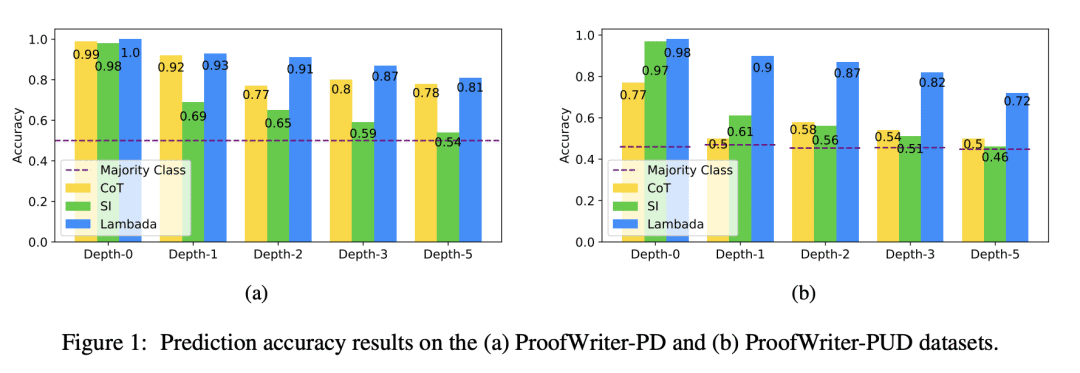

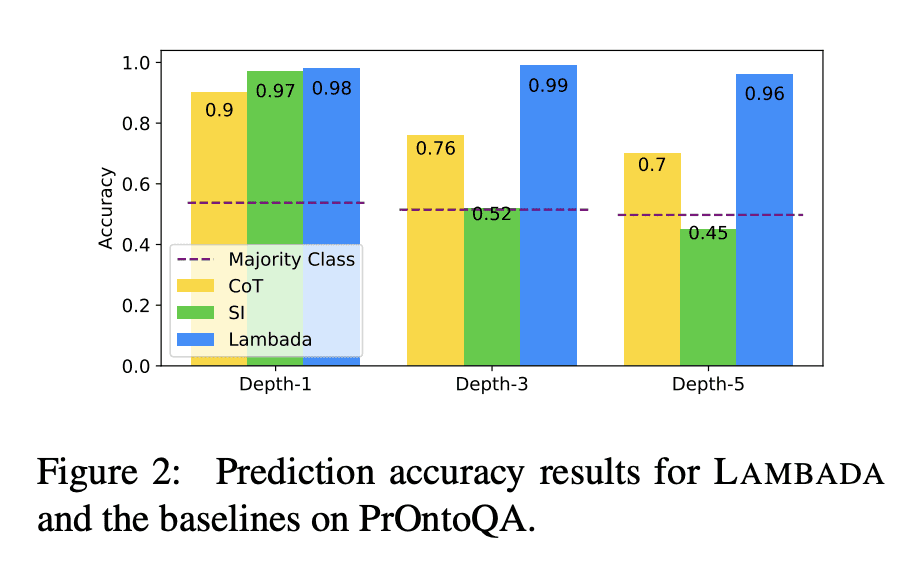

通过用大型语言模型(LM)的能力以及思维链提示和选择推理等方法,在将知识指定为非结构化自然文本的自动推理方面取得了显著进展。这些技术从公理到结论的前向寻找证明,该公理受到搜索空间组合爆炸的影响,因此需要更长推理链的问题故障率很高。经典的自动推理文献表明,后向推理(即从预期的结论到支持它的公理集)在证据发现方面效率要高得多。将此直觉引入语言模型设置,开发了一种后向链算法,称为LAMBADA,将推理分解为四个子模块,每个子模块都可以通过少样本提示语言模型推理简单实现。在两个具有挑战性的逻辑推理数据集上,LAMBADA比最先进的前向推理方法实现了巨大的精度提升,特别是在需要深度和准确的证明链时。

Remarkable progress has been made on automated reasoning with knowledge specified as unstructured, natural text, by using the power of large language models (LMs) coupled with methods such as Chain-of-Thought prompting and Selection-Inference. These techniques search for proofs in the forward direction from axioms to the conclusion, which suffers from a combinatorial explosion of the search space, and thus high failure rates for problems requiring longer chains of reasoning. The classical automated reasoning literature has shown that reasoning in the backward direction (i.e. from the intended conclusion to the set of axioms that support it) is significantly more efficient at proof-finding problems. We import this intuition into the LM setting and develop a Backward Chaining algorithm, which we call LAMBADA, that decomposes reasoning into four sub-modules, each of which can be simply implemented by few-shot prompted LM inference. We show that LAMBADA achieves massive accuracy boosts over state-of-the-art forward reasoning methods on two challenging logical reasoning datasets, particularly when deep and accurate proof chains are required.

论文链接:https://arxiv.org/abs/2212.13894

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢