来自今天的爱可可AI前沿推介

[CL] BLOOM+1: Adding Language Support to BLOOM for Zero-Shot Prompting

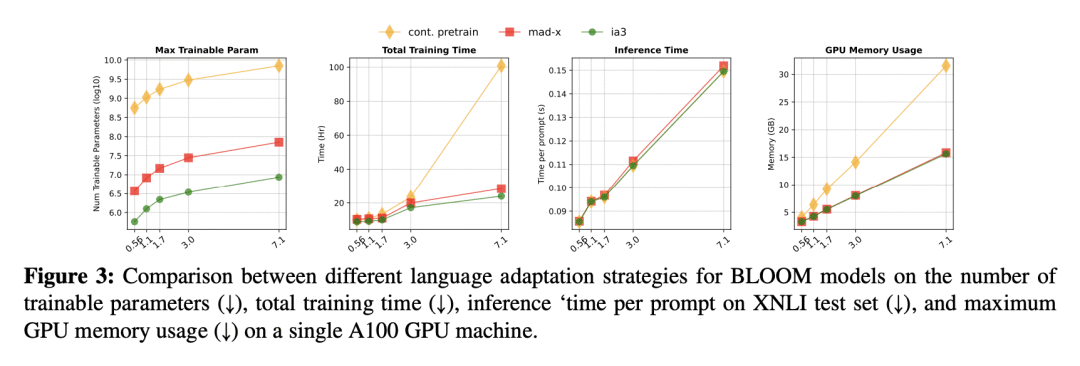

Z Yong, H Schoelkopf, N Muennighoff, A F Aji, D I Adelani...

[Brown University & EleutherAI & Hugging Face]

BLOOM+1: 向BLOOM添加语言支持以进行零样本提示

要点:

-

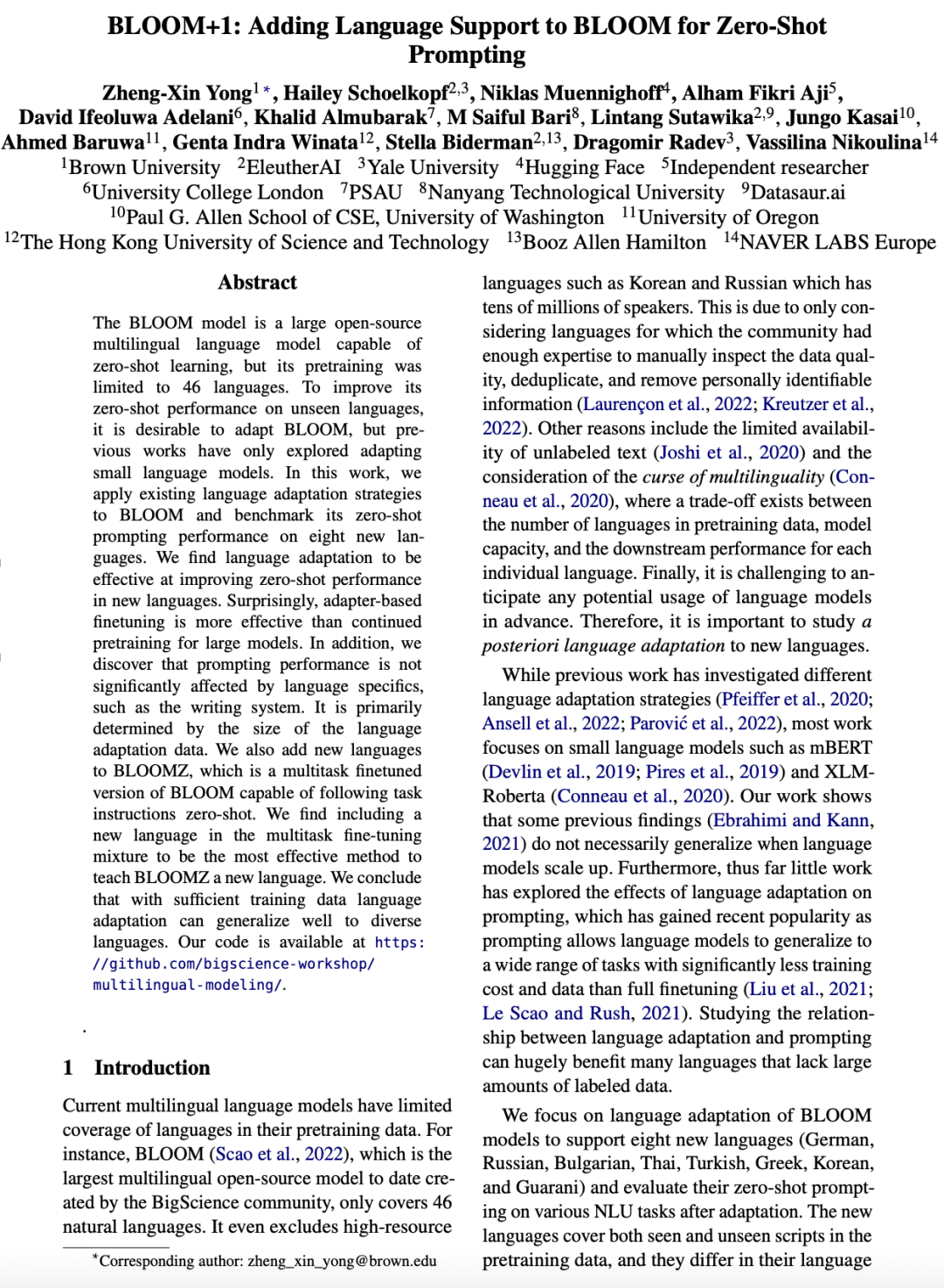

研究语言自适应对零样本提示和指令微调的影响; -

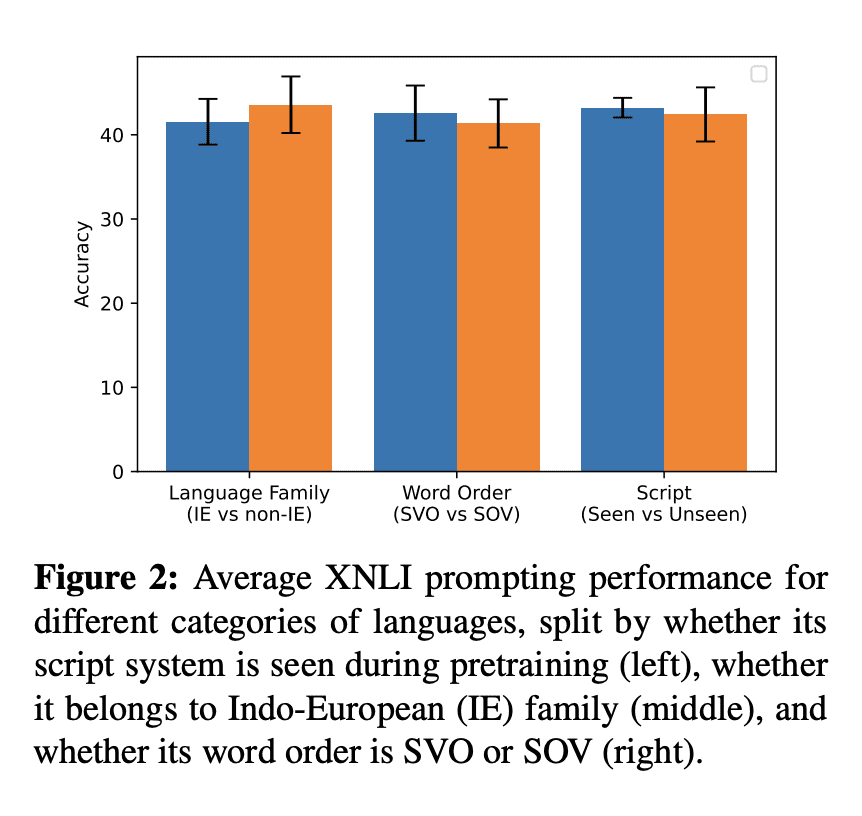

对不同规模的BLOOM模型进行参数高效自适应,并在所需计算量与零样本提示性能之间进行权衡。 -

量化语言自适应数据多少对语言自适应的影响。

摘要:

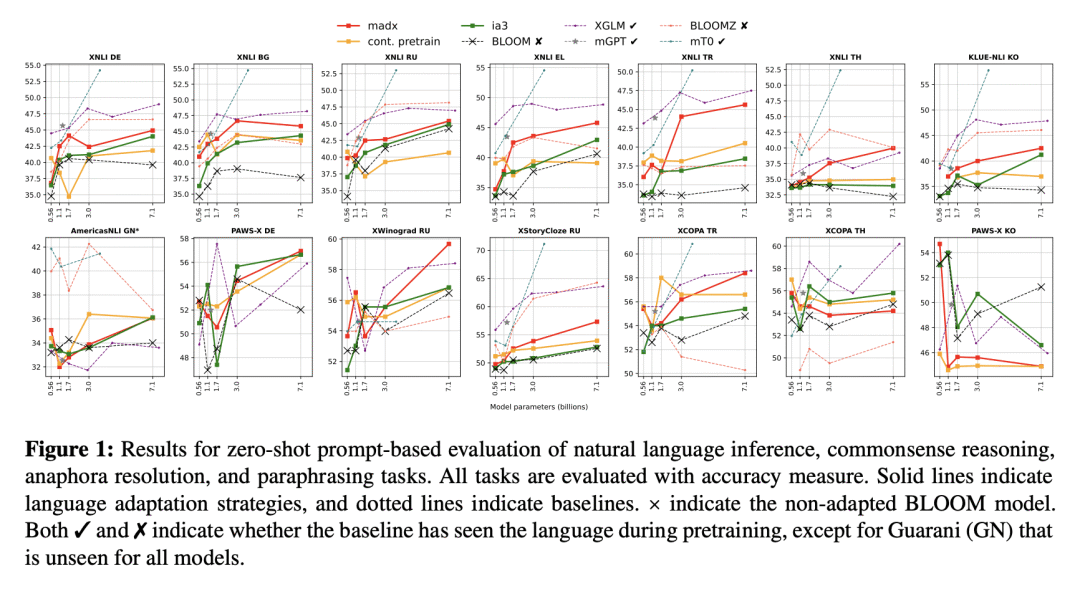

BLOOM模型是一个大型开源多语言模型,能进行零样本学习,但其预训练仅限于46种语言。为了提高其在未见语言上的零样本性能,最好对BLOOM进行改进,但之前的工作只探索了改进小型语言模型。本文将现有的语言自适应策略应用于BLOOM,并将其零样本提示性能与八种新语言进行比较。发现语言自适应可以有效地提高新语言的零样本性能。令人惊讶的是,对于大模型,基于适配器的微调比持续的预训练更有效。提示性能不受语言细节(如书写系统)的显著影响,主要取决于语言自适应数据的多少。本文还向BLOOMZ添加了新语言,BLOOMZ是BLOOM的多任务微调版本,能零样本完成任务指令。在多任务微调混合中加入一种新语言是教授BLOONZ新语言的最有效方法。有足够多的训练数据,语言自适应可以很好地推广到不同的语言。

The BLOOM model is a large open-source multilingual language model capable of zero-shot learning, but its pretraining was limited to 46 languages. To improve its zero-shot performance on unseen languages, it is desirable to adapt BLOOM, but previous works have only explored adapting small language models. In this work, we apply existing language adaptation strategies to BLOOM and benchmark its zero-shot prompting performance on eight new languages. We find language adaptation to be effective at improving zero-shot performance in new languages. Surprisingly, adapter-based finetuning is more effective than continued pretraining for large models. In addition, we discover that prompting performance is not significantly affected by language specifics, such as the writing system. It is primarily determined by the size of the language adaptation data. We also add new languages to BLOOMZ, which is a multitask finetuned version of BLOOM capable of following task instructions zero-shot. We find including a new language in the multitask fine-tuning mixture to be the most effective method to teach BLOOMZ a new language. We conclude that with sufficient training data language adaptation can generalize well to diverse languages.

论文链接:https://arxiv.org/abs/2212.09535

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢