来自今日爱可可的前沿推介

GENIE: Large Scale Pre-training for Text Generation with Diffusion Model

Z Lin, Y Gong, Y Shen, T Wu, Z Fan...

[Microsoft Research Asia & Xiamen University & Tsinghua University & Fudan University]

GENIE: 基于扩散模型的文本生成大规模预训练

要点:

-

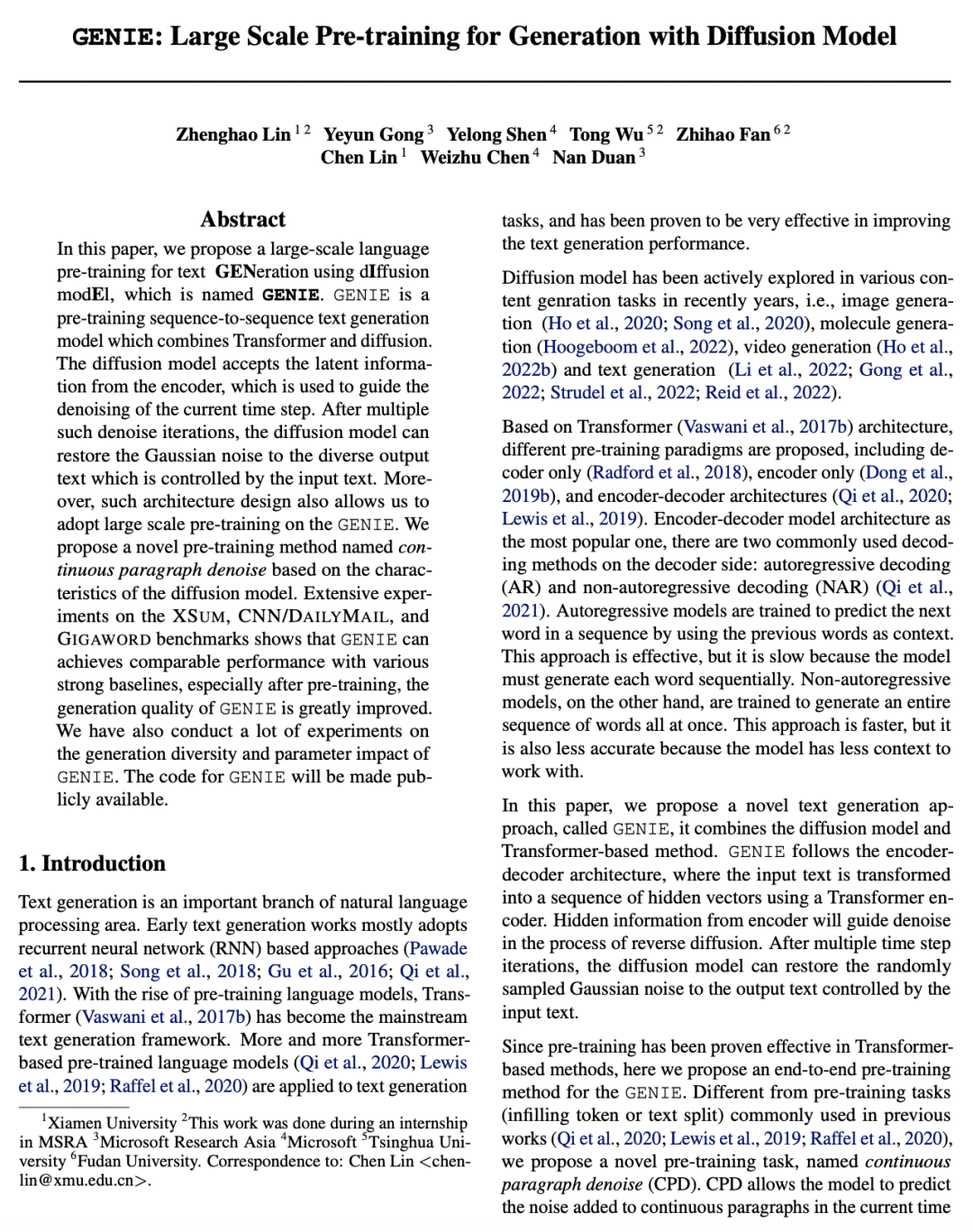

提出一种大规模预训练序列到序列文本生成模型GENIE,结合了Transformer和扩散模型; -

提出一种连续段落去噪的预训练方法,可以在大规模语料上预训练GENIE; -

实验结果表明,GENIE能生成高质量、多样化的文本,证明了对GENIE的大规模预训练的有效性。

摘要:

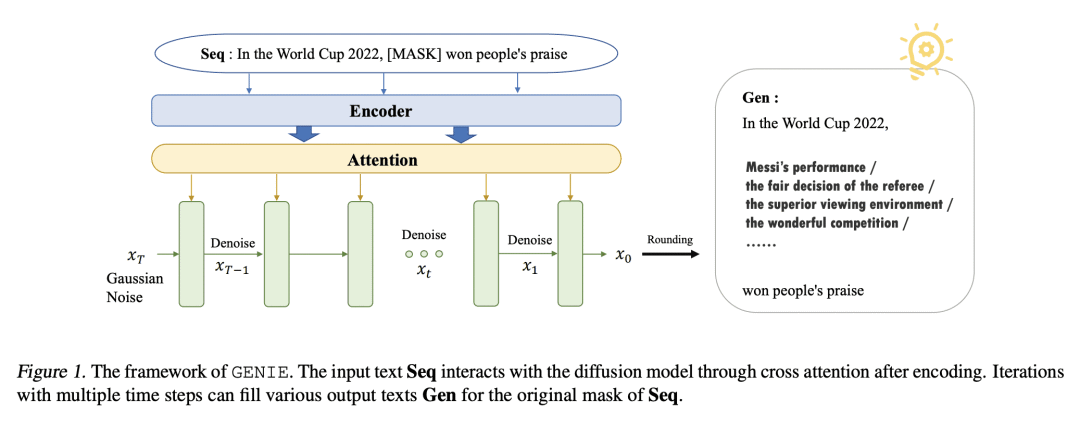

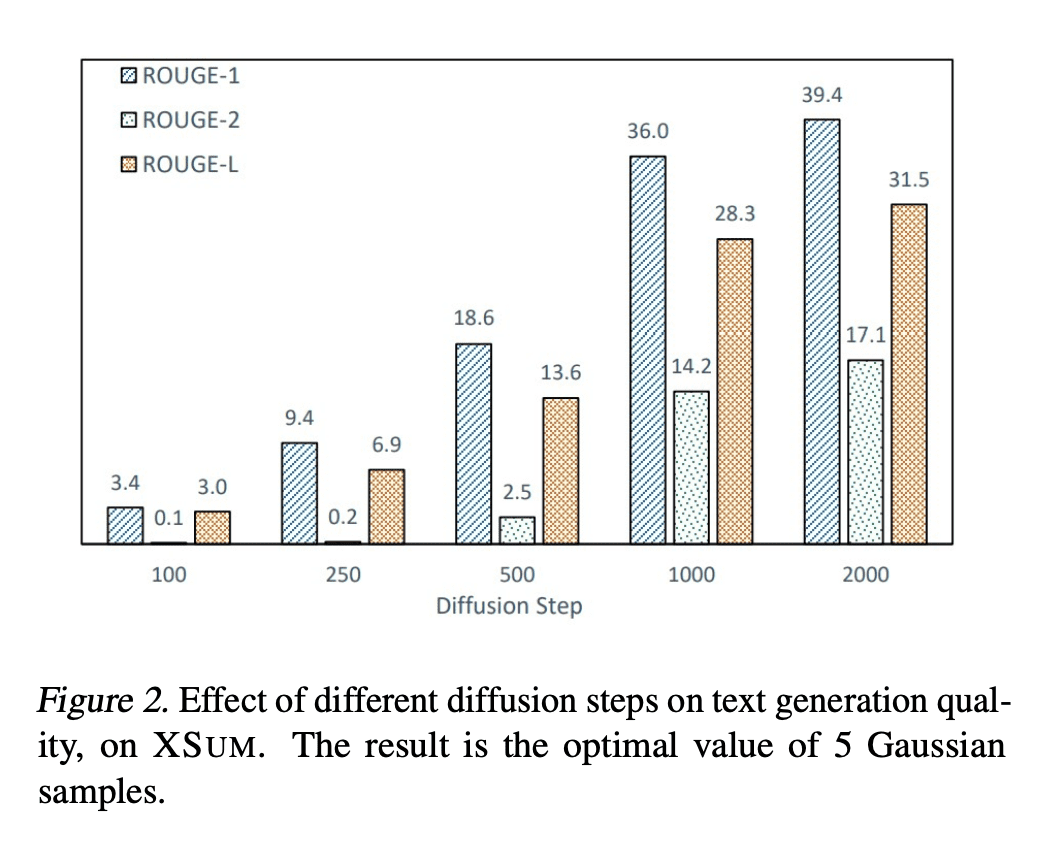

本文提出一种名为GENIE的基于扩散模型的大规模文本生成语言预训练。GENIE是一个预训练的序列到序列文本生成模型,结合了Transformer和扩散。扩散模型接收编码器的潜信息,用于指导当前时间步的去噪。在多次此类去噪迭代后,扩散模型可以将高斯噪声恢复到由输入文本控制的多样化输出文本。此外,这种架构设计还允许对GENIE进行大规模的预训练。根据扩散模型的特点,本文提出一种名为连续段落去噪的新的预训练方法。在XSum、CNN/DailyMail和Gigaword基准上的广泛实验表明,GENIE可以实现具有各种强基线的可比性能,特别是在预训练后,GENIE的生成质量大大提高。

In this paper, we propose a large-scale language pre-training for text GENeration using dIffusion modEl, which is named GENIE. GENIE is a pre-training sequence-to-sequence text generation model which combines Transformer and diffusion. The diffusion model accepts the latent information from the encoder, which is used to guide the denoising of the current time step. After multiple such denoise iterations, the diffusion model can restore the Gaussian noise to the diverse output text which is controlled by the input text. Moreover, such architecture design also allows us to adopt large scale pre-training on the GENIE. We propose a novel pre-training method named continuous paragraph denoise based on the characteristics of the diffusion model. Extensive experiments on the XSum, CNN/DailyMail, and Gigaword benchmarks shows that GENIE can achieves comparable performance with various strong baselines, especially after pre-training, the generation quality of GENIE is greatly improved. We have also conduct a lot of experiments on the generation diversity and parameter impact of GENIE. The code for GENIE will be made publicly available.

https://arxiv.org/abs/2212.11685

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢