来自今天的爱可可AI前沿推介

[CV] NaQ: Leveraging Narrations as Queries to Supervise Episodic Memory

S K Ramakrishnan, Z Al-Halah, K Grauman

[UT Austin]

NaQ: 以旁白为查询来监督情节记忆

要点:

-

提出旁白即查询(NaQ),一种用于训练视频查询定位模型的数据增强方法; -

NaQ显著改进了Ego4D NLQ基准的多个顶级模型(甚至将其精度翻了一番); -

在Ego4D NLQ挑战赛中,NaQ的表现优于之前所有挑战获胜者; -

NaQ展示了独特的特性,例如长尾对象查询的增益以及执行零样本和少样本NLQ的能力。

摘要:

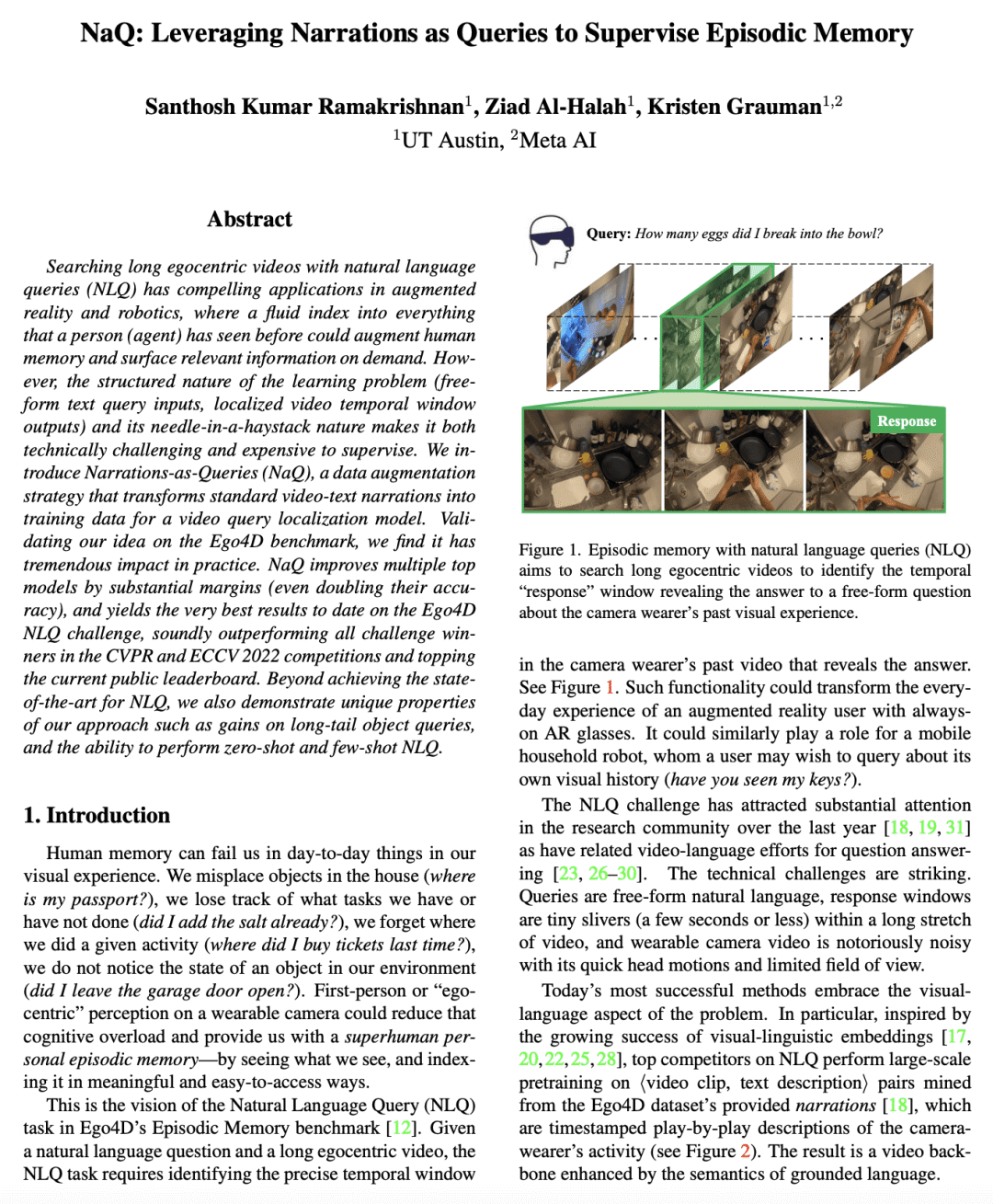

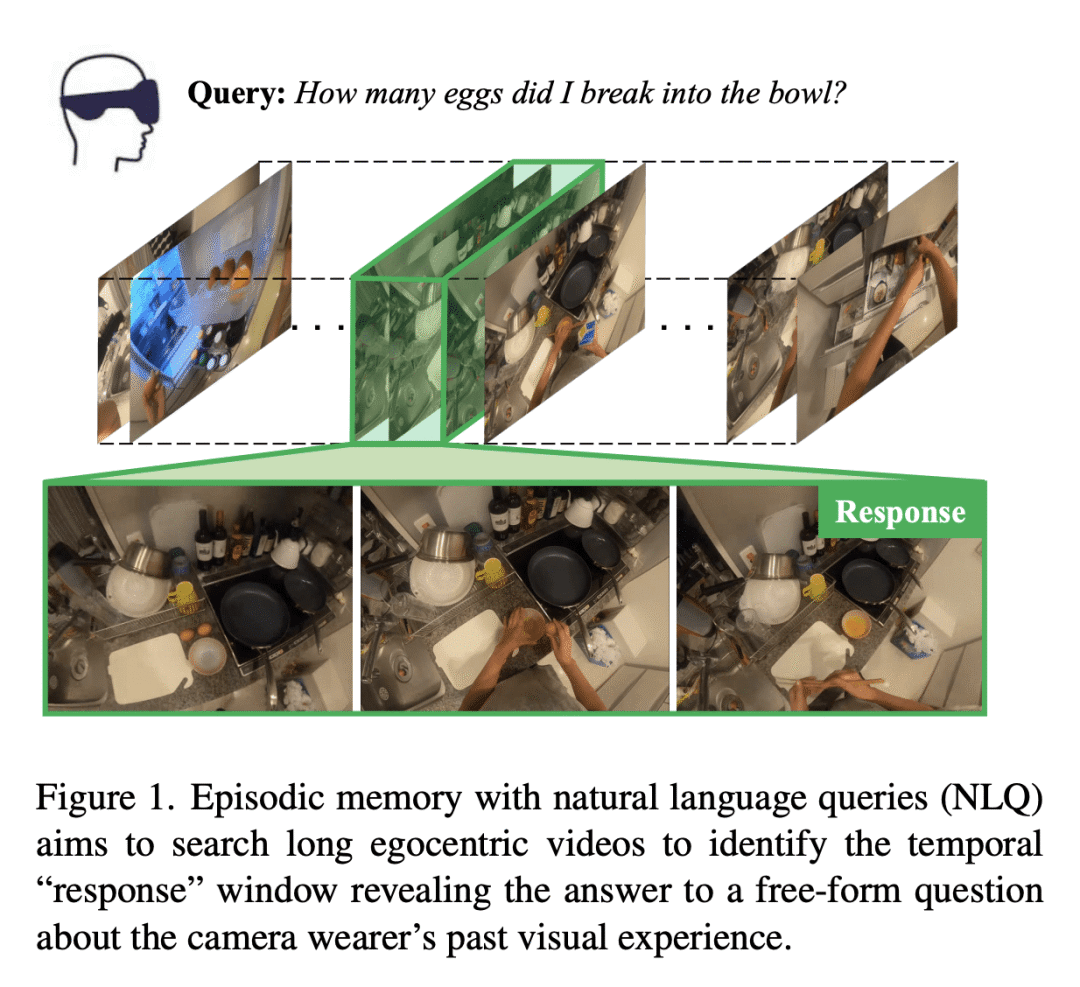

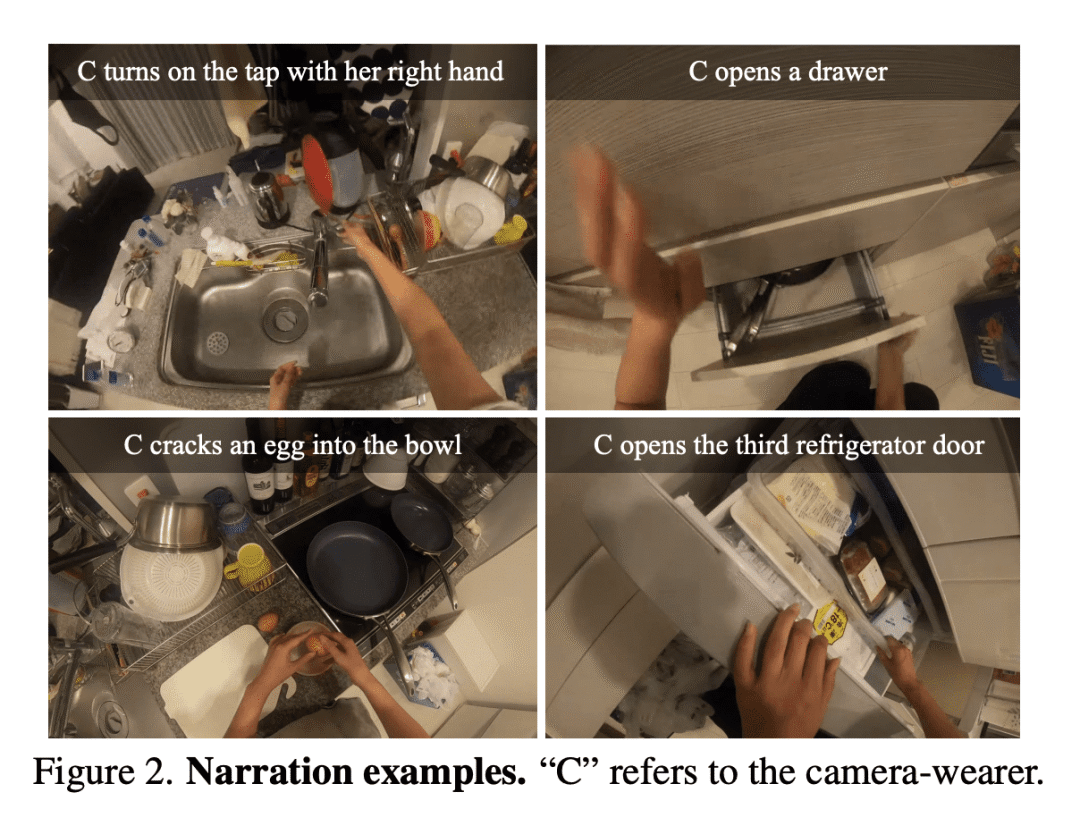

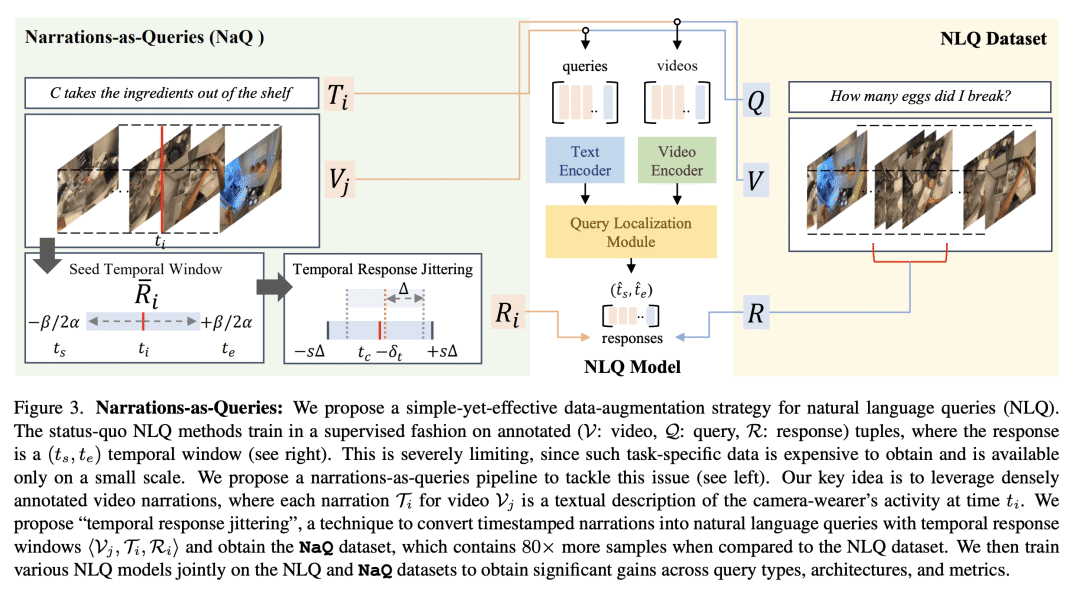

使用自然语言查询(NLQ)搜索以自我为中心的长视频,在增强现实和机器人领域有令人信服的应用,其中对一个人(智能体)之前看到的一切的流索引,可增强记忆并按需显示相关信息。然而,学习问题的结构性(自由格式文本查询输入、定位视频时间窗口输出)及其大海捞针的本质,使其在技术上既具有挑战性,又昂贵。本文提出旁白即查询(NaQ),一种数据增强策略,将标准视频文本旁白转换为视频查询定位模型的训练数据。通过验证对Ego4D基准的想法,发现它在实践中产生了巨大影响。NaQ以可观的收益提高了多个顶级模型(甚至将其精度翻了一番),并在Ego4D NLQ挑战赛上取得了迄今为止最好的结果,在CVPR和ECCV 2022比赛中的表现明显优于所有挑战获胜者,并在当前的公共排行榜上名列前茅。除了实现NLQ的最新水平外,还展示了该方法的特性,例如长尾对象查询的增益,以及执行零样本和少样本NLQ的能力。

Searching long egocentric videos with natural language queries (NLQ) has compelling applications in augmented reality and robotics, where a fluid index into everything that a person (agent) has seen before could augment human memory and surface relevant information on demand. However, the structured nature of the learning problem (free-form text query inputs, localized video temporal window outputs) and its needle-in-a-haystack nature makes it both technically challenging and expensive to supervise. We introduce Narrations-as-Queries (NaQ), a data augmentation strategy that transforms standard video-text narrations into training data for a video query localization model. Validating our idea on the Ego4D benchmark, we find it has tremendous impact in practice. NaQ improves multiple top models by substantial margins (even doubling their accuracy), and yields the very best results to date on the Ego4D NLQ challenge, soundly outperforming all challenge winners in the CVPR and ECCV 2022 competitions and topping the current public leaderboard. Beyond achieving the state-of-the-art for NLQ, we also demonstrate unique properties of our approach such as gains on long-tail object queries, and the ability to perform zero-shot and few-shot NLQ.

论文链接:https://arxiv.org/abs/2301.00746

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢