来自今天的爱可可AI前沿推介

[CV] Learning by Sorting: Self-supervised Learning with Group Ordering Constraints

N Shvetsova, F Petersen, A Kukleva...

[Goethe University Frankfurt & Stanford University & Max-Planck-Institute for Informatics]

通过排序学习:基于组排序约束的自监督对比学习

要点:

-

提出一种使用组排序约束(GroCo)进行自监督对比学习的新方法,该方法比较正负图像组,而不是一对图像; -

利用可微分排序算法强制所有正样本的距离小于所有负图像的距离,从而对局部近邻进行更全面的优化; -

展示了嵌入线性分离的竞争性能,并提高了各种基准的k-NN性能,在一系列最近的邻居任务上,表现优于当前最先进的框架。

一句话总结:

提出了一种新的基于组排序约束(GroCo)的自监督对比学习方法,提高了各种基准的性能,并在最近的邻居任务上表现优于当前最先进框架。

摘要:

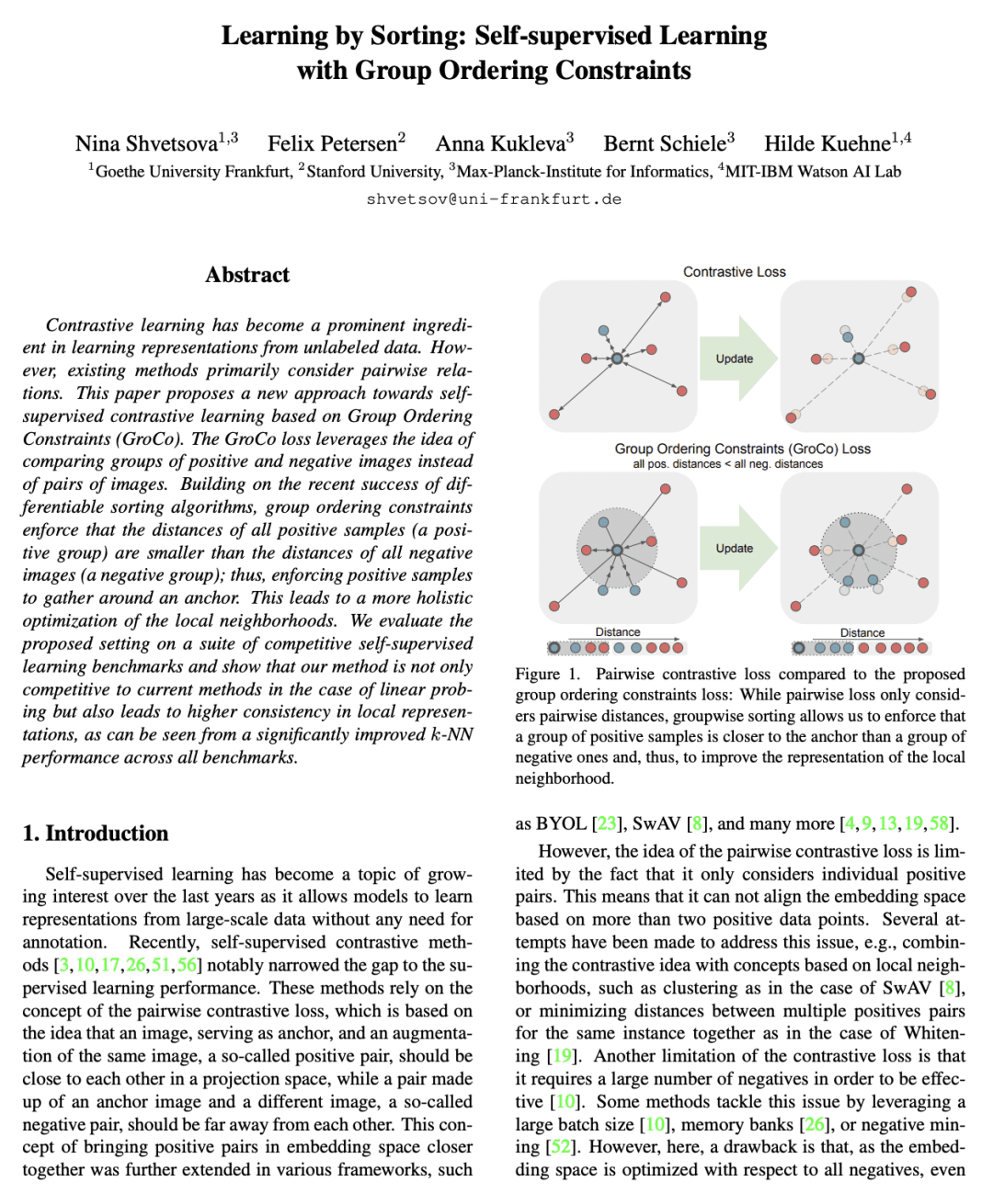

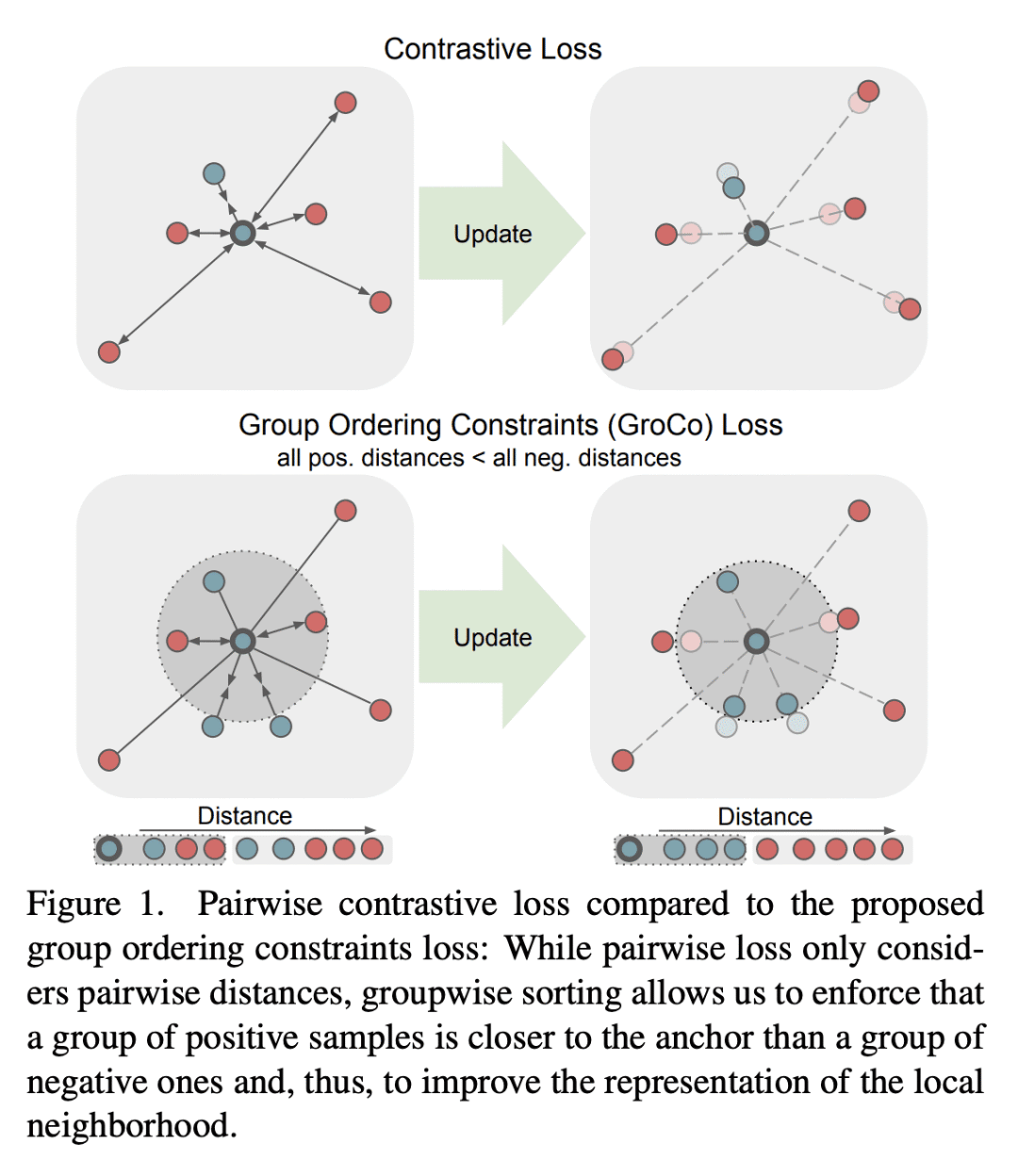

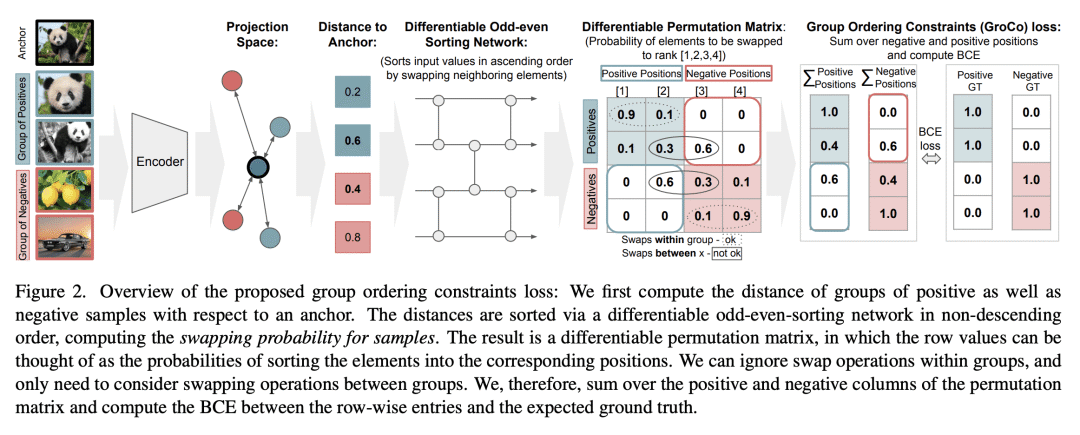

对比学习已成为从未标记数据中学习表示的有效方法。 然而,现有方法主要考虑成对关系。 本文提出一种基于组排序约束(GroCo)的自监督对比学习新方法。 GroCo损失利用了将正面和负面图像组进行比较,而不是对图像进行配对比较的想法。 在不同可微排序算法的最近成功的基础上,组排序约束强制所有正样本(正组)的距离小于所有负面图像(负组)的距离; 因此,强制正样本聚集在锚点附近。 这导致了对局部邻域的更全面的优化。 在一系列竞争的自监督学习基准测试上评估了所提出的设置,并显示该方法不仅在线性探测的情况下与当前方法竞争,而且还导致局部表示更高的一致性,在所有基准测试中显著改善了k-NN性能。

Contrastive learning has become a prominent ingredient in learning representations from unlabeled data. However, existing methods primarily consider pairwise relations. This paper proposes a new approach towards self-supervised contrastive learning based on Group Ordering Constraints (GroCo). The GroCo loss leverages the idea of comparing groups of positive and negative images instead of pairs of images. Building on the recent success of differentiable sorting algorithms, group ordering constraints enforce that the distances of all positive samples (a positive group) are smaller than the distances of all negative images (a negative group); thus, enforcing positive samples to gather around an anchor. This leads to a more holistic optimization of the local neighborhoods. We evaluate the proposed setting on a suite of competitive self-supervised learning benchmarks and show that our method is not only competitive to current methods in the case of linear probing but also leads to higher consistency in local representations, as can be seen from a significantly improved k-NN performance across all benchmarks.

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢