来自今天的爱可可AI前沿推介

[CV] Box2Mask: Box-supervised Instance Segmentation via Level-set Evolution

W Li, W Liu, J Zhu, M Cui, R Yu, X Hua, L Zhang

[Zhejiang University & Alibaba Group & The Hong Kong Polytechnic University]

Box2Mask: 基于水平集演化的框监督实例分割

要点:

-

提出一种新的单样本实例分割方法Box2Mask,使用边框标注而不是像素级掩码标记; -

Box2Mask 用水平集演化模型来实现准确的掩模预测,并将深度神经网络集成到学习水平集曲线中; -

用基于像素亲和力核的局部一致性模块来挖掘局部上下文和空间关系。

一句话总结:

Box2Mask方法是一种新的单样本实例分割方法,使用边框标注并集成水平集演化和深度神经网络,实现了在各种数据集上准确的掩模预测,超越全掩码监督方法。

摘要:

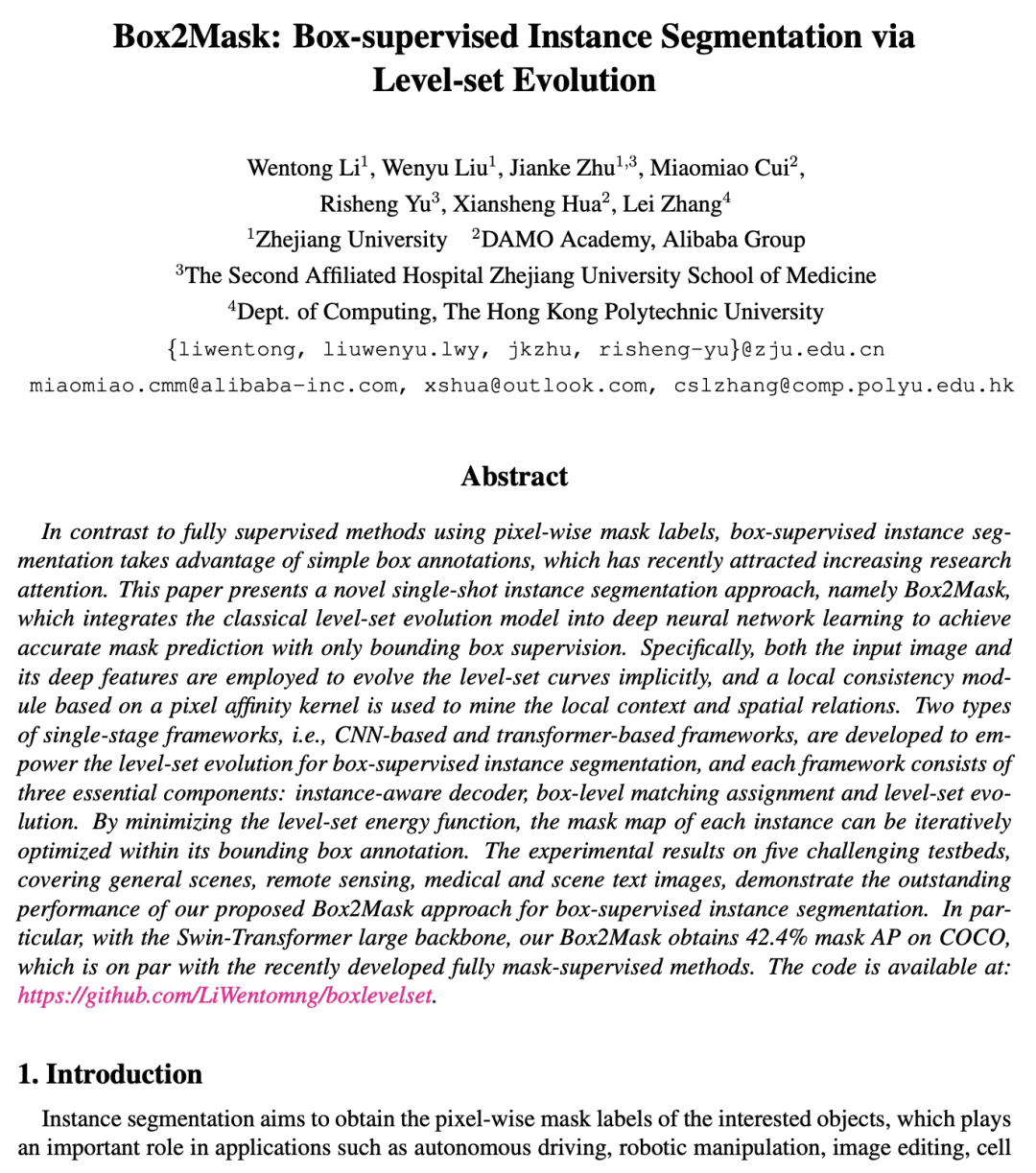

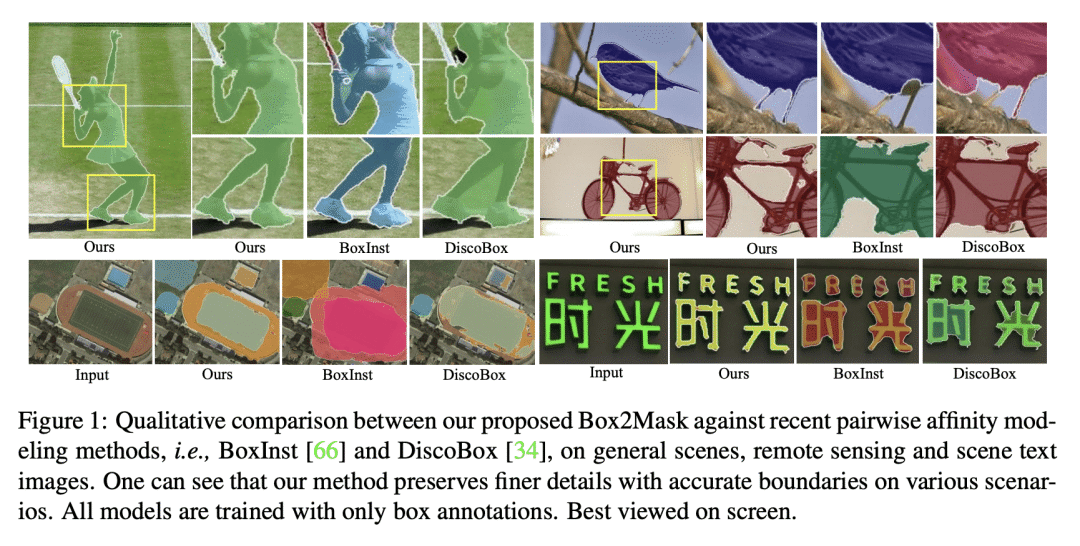

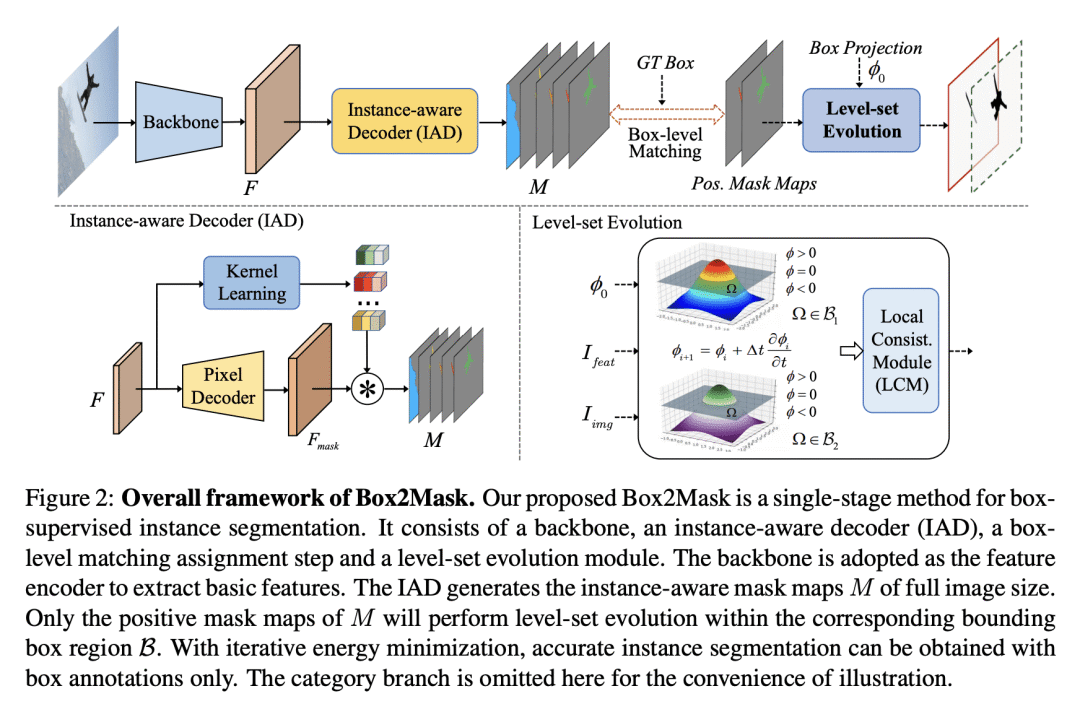

与使用像素掩码标记的全监督方法不同,框监督实例分割利用了简单的框标注,这种方法正引来越来越多的研究关注。本文提出一种新的单样本实例分割方法Box2Mask,将经典的水平集(Level-Set)演化模型集成到深度神经网络学习中,只需边框监督即可实现准确的掩码预测。输入图像及其深度特征都用于隐式地进化水平集曲线,用基于像素亲和力核的局部一致性模块来挖掘局部上下文和空间关系。提出了两种类型的单级框架,即基于CNN和基于 Transformer 的框架,以增强框监督实例分割的水平集进化,每个框架由三个基本组件组成:实例感知解码器、框级匹配分配和水平集进化。通过最小化水平集能量函数,可以在其边框标注中迭代优化每个实例的掩码映射。五个具有挑战性的测试平台的实验结果涵盖了一般场景、遥感、医疗和场景文本图像,展示了所提出的Box2Mask方法在框监督实例分割方面的出色表现。特别是,使用 Swin-Transformer 大规模主干,Box2Mask在COCO上获得了42.4%的掩码AP,与最近提出的全掩码监督方法相当。

In contrast to fully supervised methods using pixel-wise mask labels, box-supervised instance segmentation takes advantage of simple box annotations, which has recently attracted increasing research attention. This paper presents a novel single-shot instance segmentation approach, namely Box2Mask, which integrates the classical level-set evolution model into deep neural network learning to achieve accurate mask prediction with only bounding box supervision. Specifically, both the input image and its deep features are employed to evolve the level-set curves implicitly, and a local consistency module based on a pixel affinity kernel is used to mine the local context and spatial relations. Two types of single-stage frameworks, i.e., CNN-based and transformer-based frameworks, are developed to empower the level-set evolution for box-supervised instance segmentation, and each framework consists of three essential components: instance-aware decoder, box-level matching assignment and level-set evolution. By minimizing the level-set energy function, the mask map of each instance can be iteratively optimized within its bounding box annotation. The experimental results on five challenging testbeds, covering general scenes, remote sensing, medical and scene text images, demonstrate the outstanding performance of our proposed Box2Mask approach for box-supervised instance segmentation. In particular, with the Swin-Transformer large backbone, our Box2Mask obtains 42.4% mask AP on COCO, which is on par with the recently developed fully mask-supervised methods.

论文链接:https://arxiv.org/abs/2212.01579

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢