来自今天的爱可可AI前沿推介

[LG] A Comprehensive Survey to Dataset Distillation

S Lei, D Tao

[The University of Sydney & JD Explore Academy]

数据集蒸馏综述

要点:

-

对数据集蒸馏技术进行了全面综述,数据集蒸馏技术主要用于减少深度学习所需的数据量; -

将现有的数据集蒸馏方法分为两类:元学习和数据匹配框架; -

评估了当前数据集蒸馏方法的局限性,如蒸馏高分辨率数据的困难; -

讨论了数据集蒸馏研究的挑战和未来方向。

一句话总结:

提供了数据集蒸馏技术的全面综述,将现有方法分类为元学习和数据匹配框架,评估当前局限性,并讨论挑战和未来研究方向。

摘要:

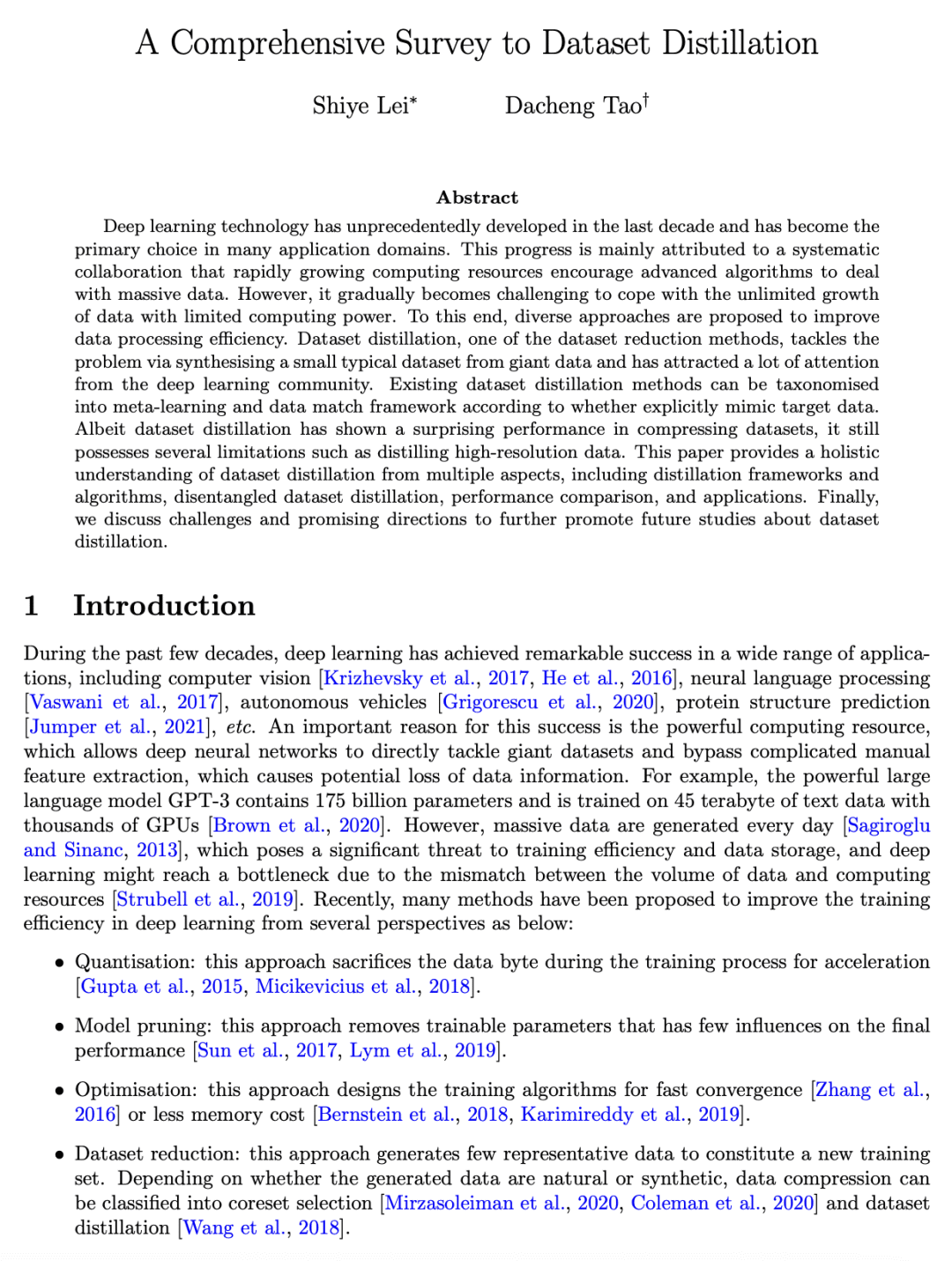

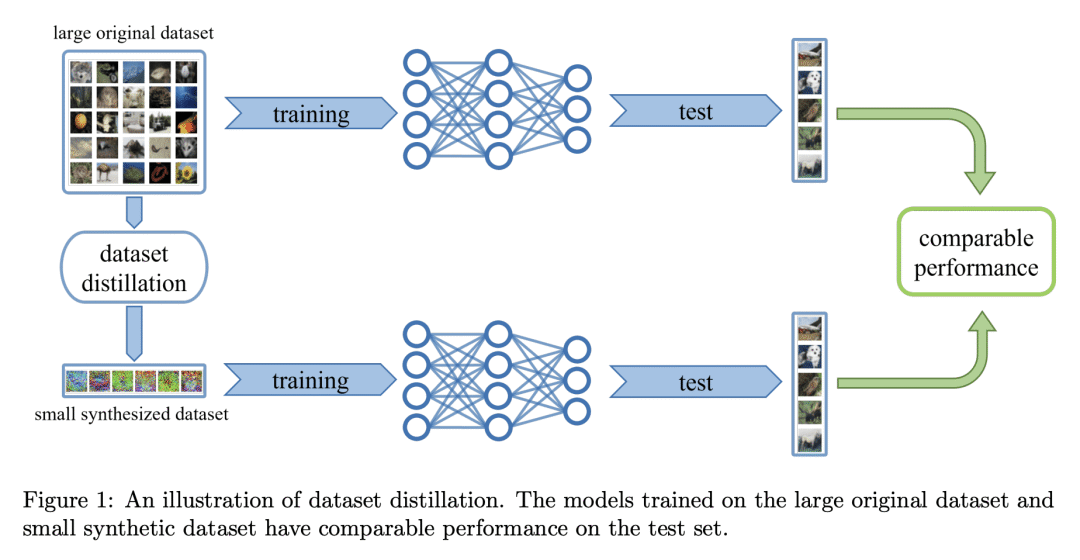

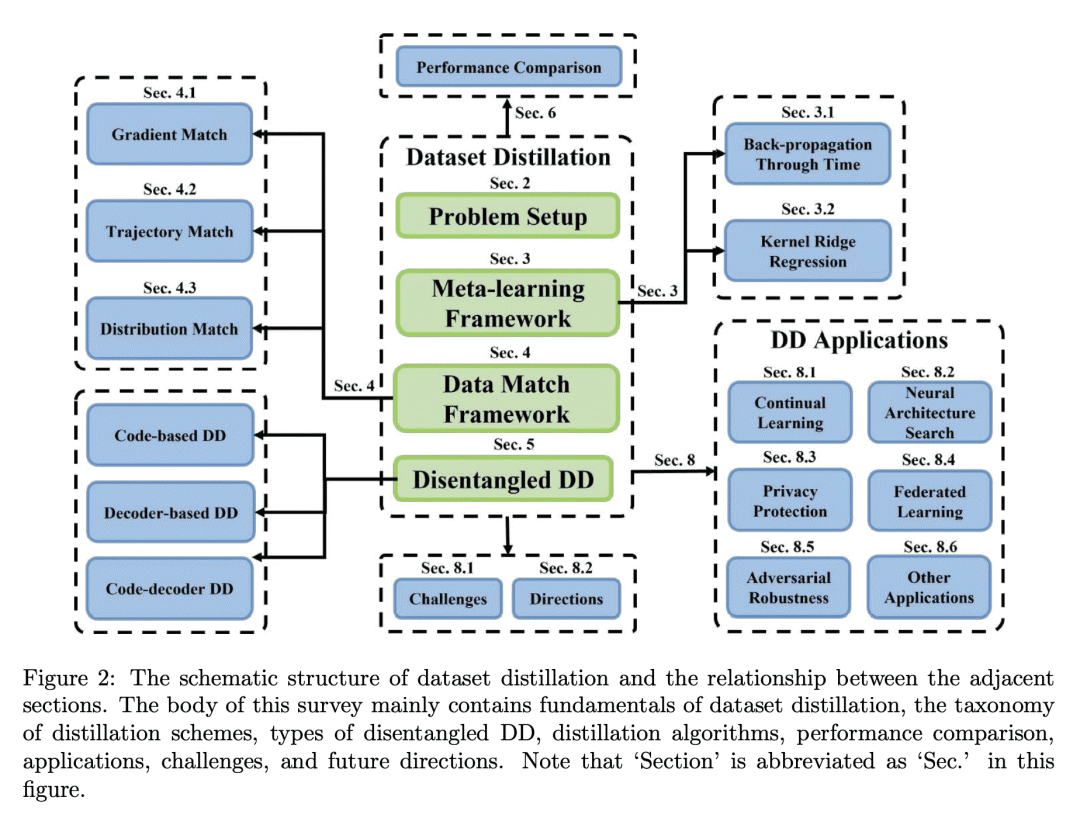

深度学习技术在过去十年中得到了前所未有的发展,并已成为许多应用领域的主要选择。这一进展主要归功于系统协作,即快速增长的计算资源鼓励高级算法处理海量数据。然而,以有限的计算能力应对数据的无限增长,逐渐变得具有挑战性。为此,提出了多种方法来提高数据处理效率。数据集蒸馏是数据集缩减方法之一,通过从巨型数据合成一个小的典型数据集来解决该问题,并引起了深度学习社区的极大关注。根据是否显式模拟目标数据,现有的数据集蒸馏方法可以分类为元学习和数据匹配框架。尽管数据集蒸馏在压缩数据集方面表现出惊人的性能,但它仍然有几个局限性,例如蒸馏高分辨率数据。本文从多个方面全面了解数据集蒸馏,包括蒸馏框架和算法、解缠数据集蒸馏、性能比较和应用。最后,讨论了进一步促进未来数据集蒸馏研究的挑战和有希望的方向。

Deep learning technology has unprecedentedly developed in the last decade and has become the primary choice in many application domains. This progress is mainly attributed to a systematic collaboration that rapidly growing computing resources encourage advanced algorithms to deal with massive data. However, it gradually becomes challenging to cope with the unlimited growth of data with limited computing power. To this end, diverse approaches are proposed to improve data processing efficiency. Dataset distillation, one of the dataset reduction methods, tackles the problem via synthesising a small typical dataset from giant data and has attracted a lot of attention from the deep learning community. Existing dataset distillation methods can be taxonomised into meta-learning and data match framework according to whether explicitly mimic target data. Albeit dataset distillation has shown a surprising performance in compressing datasets, it still possesses several limitations such as distilling high-resolution data. This paper provides a holistic understanding of dataset distillation from multiple aspects, including distillation frameworks and algorithms, disentangled dataset distillation, performance comparison, and applications. Finally, we discuss challenges and promising directions to further promote future studies about dataset distillation.

论文链接:https://arxiv.org/abs/2301.05603

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢