来自今天的爱可可AI前沿推介

[LG] Human-Timescale Adaptation in an Open-Ended Task Space

A A Team, J Bauer, K Baumli, S Baveja, F Behbahani...

[DeepMind]

开放式任务空间中的人-时间尺度自适应

要点:

-

提出AdA,一种能在一系列具有挑战性的任务中进行人-时间尺度自适应的智能体; -

在具有自动化课程的开放式任务空间中使用元强化学习大规模训练 AdA; -

表明自适应受到记忆架构、课程以及训练任务分布的规模和复杂性的影响。

一句话总结:

提出 AdA,一个强化学习智能体,能以类似于人的时间尺度,基于用元强化学习、自动化课程和基于注意力的记忆架构,在广阔的开放式任务空间中实现快速的上下文自适应。

摘要:

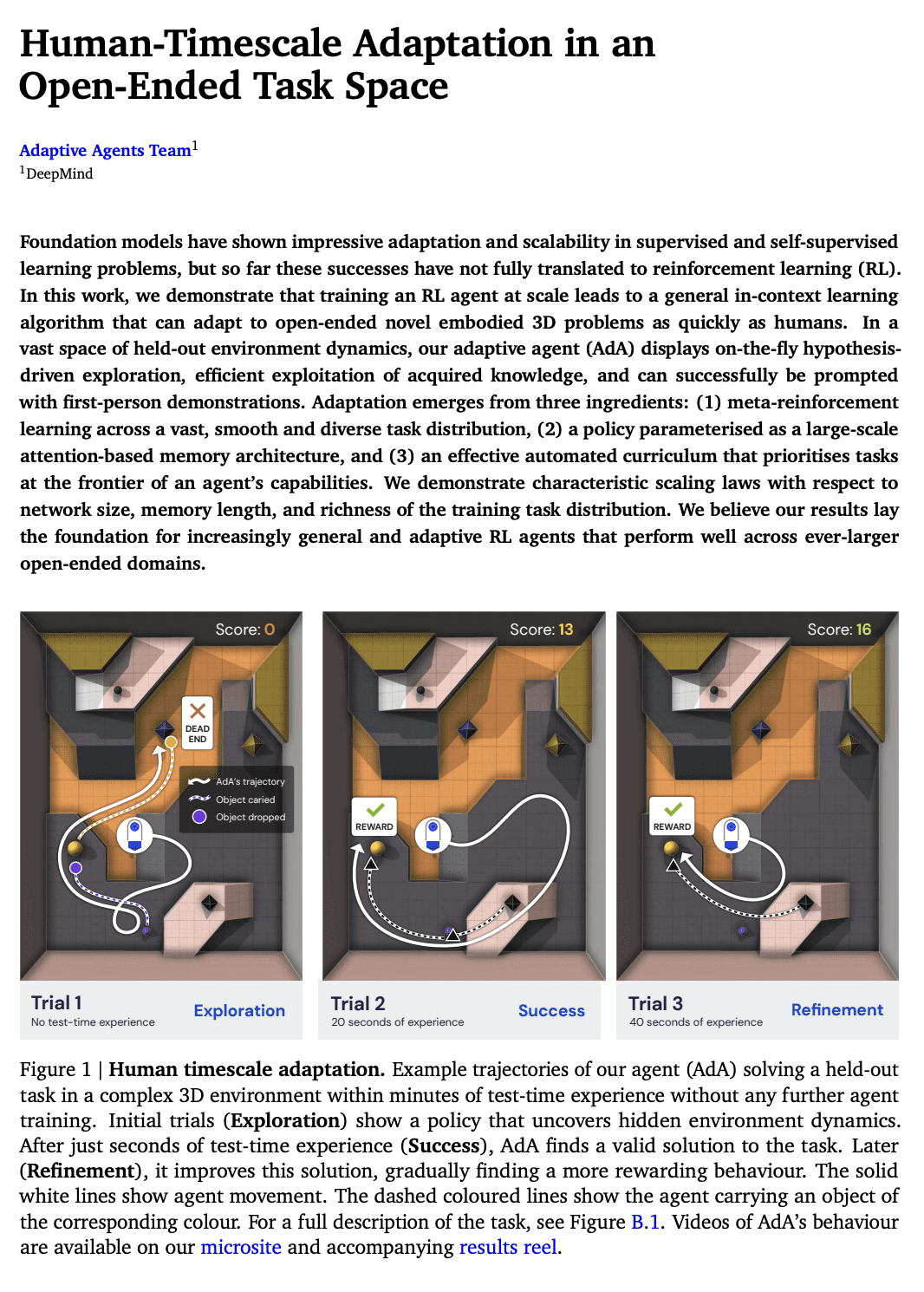

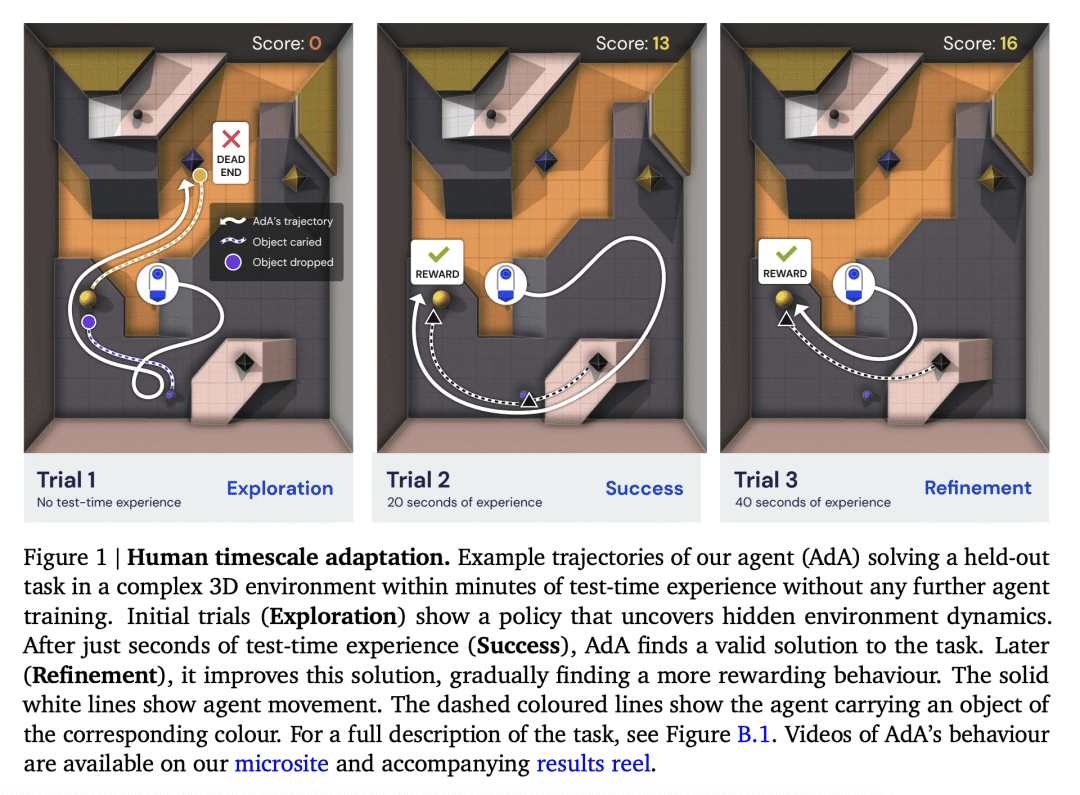

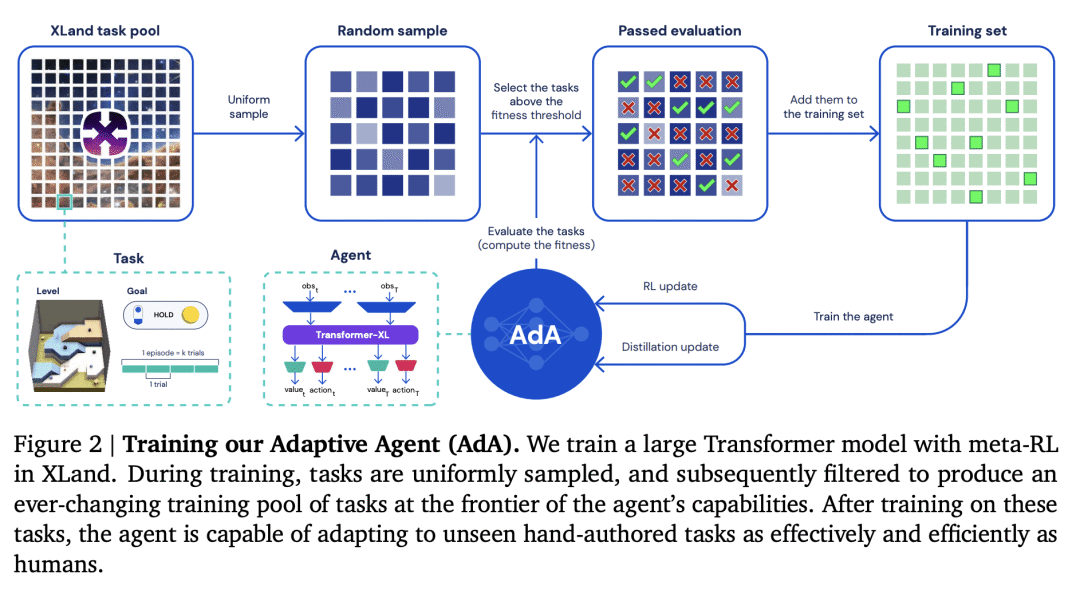

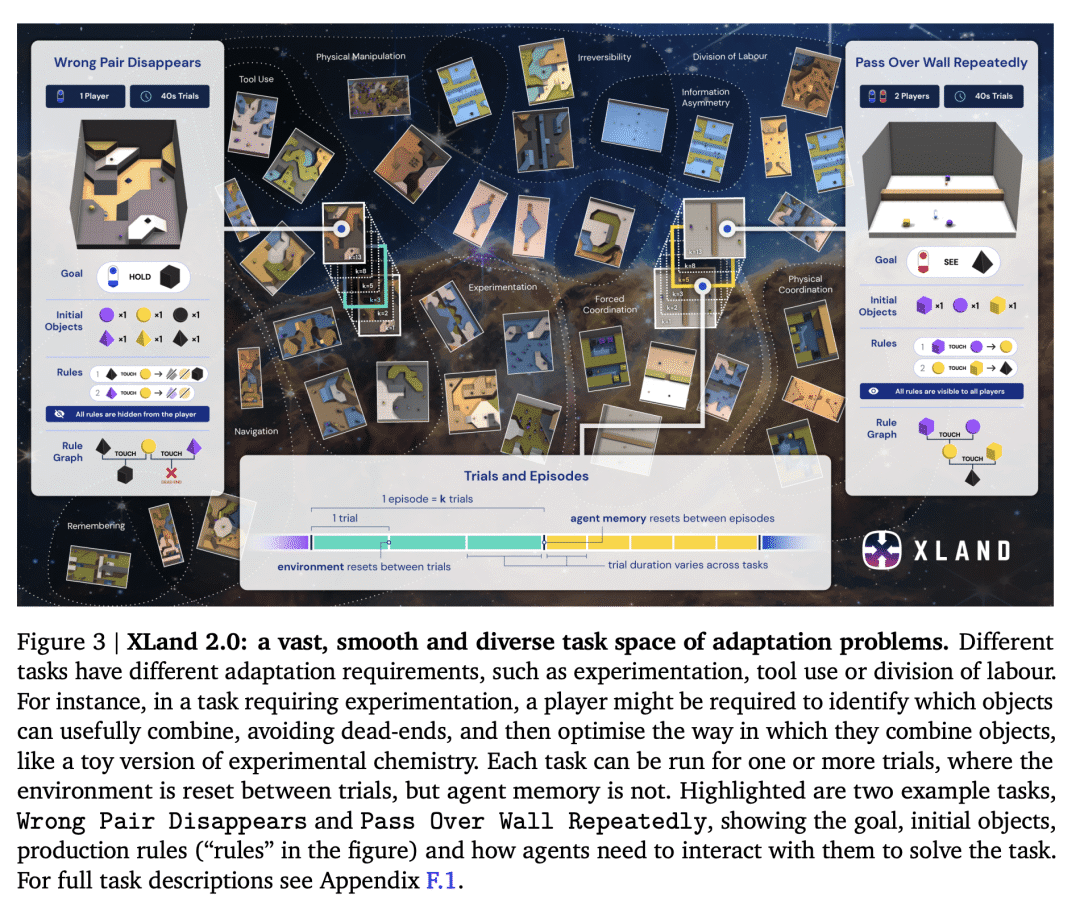

基础模型在监督和自监督学习问题上表现出令人印象深刻的自适应性和可扩展性,但到目前为止,这些成功尚未完全迁移到强化学习(RL)。本文证明了大规模训练强化学习智能体,会导致一种通用的上下文学习算法,该算法可以像人一样快速自适应开放式新3D问题。在广阔的固定环境动力学空间中,所提出的自适应智能体(AdA)展示出实时假设驱动的探索,对获得的知识的有效利用,可通过第一人称演示成功进行提示。其自适应源于三个要素:(1) 跨越广泛、流畅和多样化的任务分布的元强化学习,(2) 参数化为大规模基于注意力记忆架构的策略,以及 (3) 有效的自动化课程,优先考虑智能体能力前沿的任务。演示了有关网络大小、记忆长度和训练任务分布丰富性的特征缩放律。本文结果为越来越通用和自适应的R强化学习智能体奠定了基础,这些智能体在越来越大的开放域中表现良好。

Foundation models have shown impressive adaptation and scalability in supervised and self-supervised learning problems, but so far these successes have not fully translated to reinforcement learning (RL). In this work, we demonstrate that training an RL agent at scale leads to a general in-context learning algorithm that can adapt to open-ended novel embodied 3D problems as quickly as humans. In a vast space of held-out environment dynamics, our adaptive agent (AdA) displays on-the-fly hypothesis-driven exploration, efficient exploitation of acquired knowledge, and can successfully be prompted with first-person demonstrations. Adaptation emerges from three ingredients: (1) meta-reinforcement learning across a vast, smooth and diverse task distribution, (2) a policy parameterised as a large-scale attention-based memory architecture, and (3) an effective automated curriculum that prioritises tasks at the frontier of an agent's capabilities. We demonstrate characteristic scaling laws with respect to network size, memory length, and richness of the training task distribution. We believe our results lay the foundation for increasingly general and adaptive RL agents that perform well across ever-larger open-ended domains.

论文链接:https://arxiv.org/abs/2301.07608

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢