[LG] An SDE for Modeling SAM: Theory and Insights

E M Compagnoni, A Orvieto, L Biggio, H Kersting, F N Proske...

[University of Basel & ETH Zurich & Ecole Normale Superieure PSL Research & niversityUniversity of Oslo]

以SDE形式建模SAM: 理论与见解

要点:

-

在全 full-batch 和 mini-batch 设置下,导出SAM及其非规范化变体 USAM 的连续时间模型(以SDE的形式); -

表明这些SDE是真实离散时间算法的严格近似; -

证明它通过 Hessian 依赖噪声结构将隐式正则化损失降至最低,解释了为什么 SAM 更喜欢平坦最小值而不是尖锐最小值。

一句话总结:

为SAM优化器及其非规范化变体USAM提出了新的连续时间模型(SDE),这些模型被证明可以近似真正的离散时间优化器,并揭示了为什么SAM更喜欢平坦最小值并被鞍点吸引。

摘要:

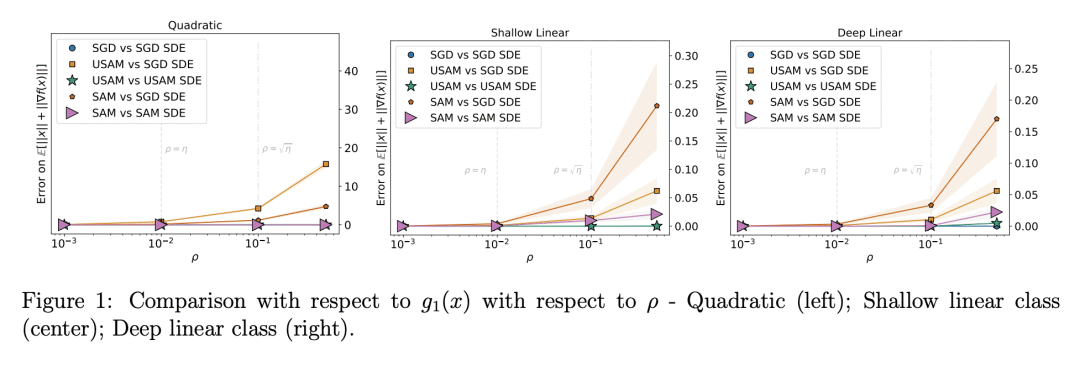

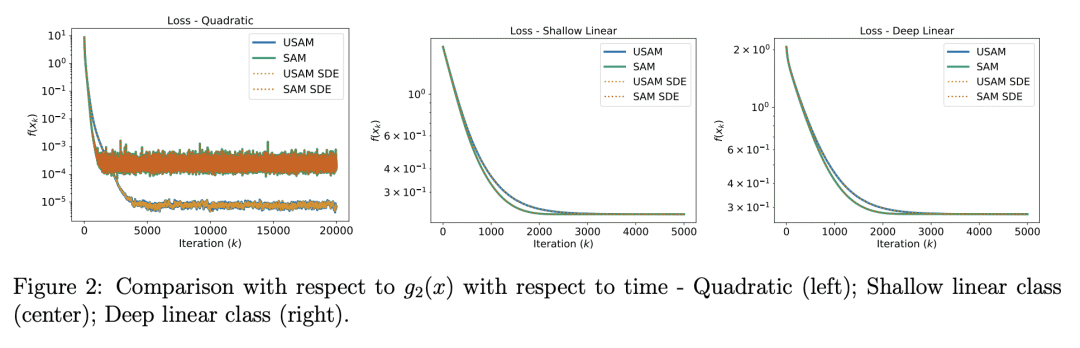

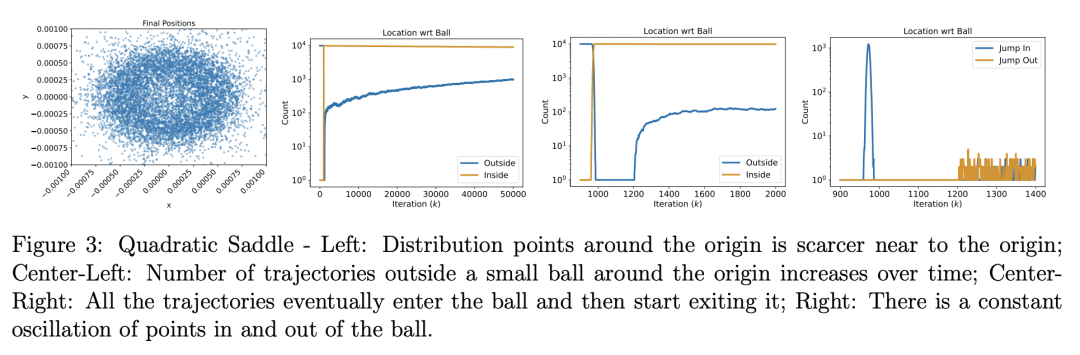

本文研究了SAM(锐度感知最小化)优化器,该优化器最近因其比更经典的随机梯度下降变体提高了性能而引起了极大的兴趣。本文的主要贡献是导出SAM及其非规范化变体 USAM 的连续时间模型(以SDE的形式),包括 full-batch 和 mini-batch 设置。本文证明,这些SDE是真实离散时间算法的严格近似(在弱意义上,随步长线性缩放)。然后,使用这些模型,本文解释了为什么SAM更喜欢平坦最小值而不是尖锐最小值——通过证明它通过Hessian依赖噪声结构将隐式正则化损失降至最低。本文证明,在一些现实的条件下,SAM可能会被鞍点所吸引。所述理论结果得到了详细实验的支持。

We study the SAM (Sharpness-Aware Minimization) optimizer which has recently attracted a lot of interest due to its increased performance over more classical variants of stochastic gradient descent. Our main contribution is the derivation of continuous-time models (in the form of SDEs) for SAM and its unnormalized variant USAM, both for the full-batch and mini-batch settings. We demonstrate that these SDEs are rigorous approximations of the real discrete-time algorithms (in a weak sense, scaling linearly with the step size). Using these models, we then offer an explanation of why SAM prefers flat minima over sharp ones - by showing that it minimizes an implicitly regularized loss with a Hessian-dependent noise structure. Finally, we prove that perhaps unexpectedly SAM is attracted to saddle points under some realistic conditions. Our theoretical results are supported by detailed experiments.

论文链接:https://arxiv.org/abs/2301.08203

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢