来自今天的爱可可AI前沿推介

[LG] AtMan: Understanding Transformer Predictions Through Memory Efficient Attention Manipulation

M Deb, B Deiseroth, S Weinbach, P Schramowski, K Kersting

[Aleph Alpha GmbH & TU Darmstadt]

AtMan: 基于记忆高效注意力操作理解 Transformer 预测

要点:

-

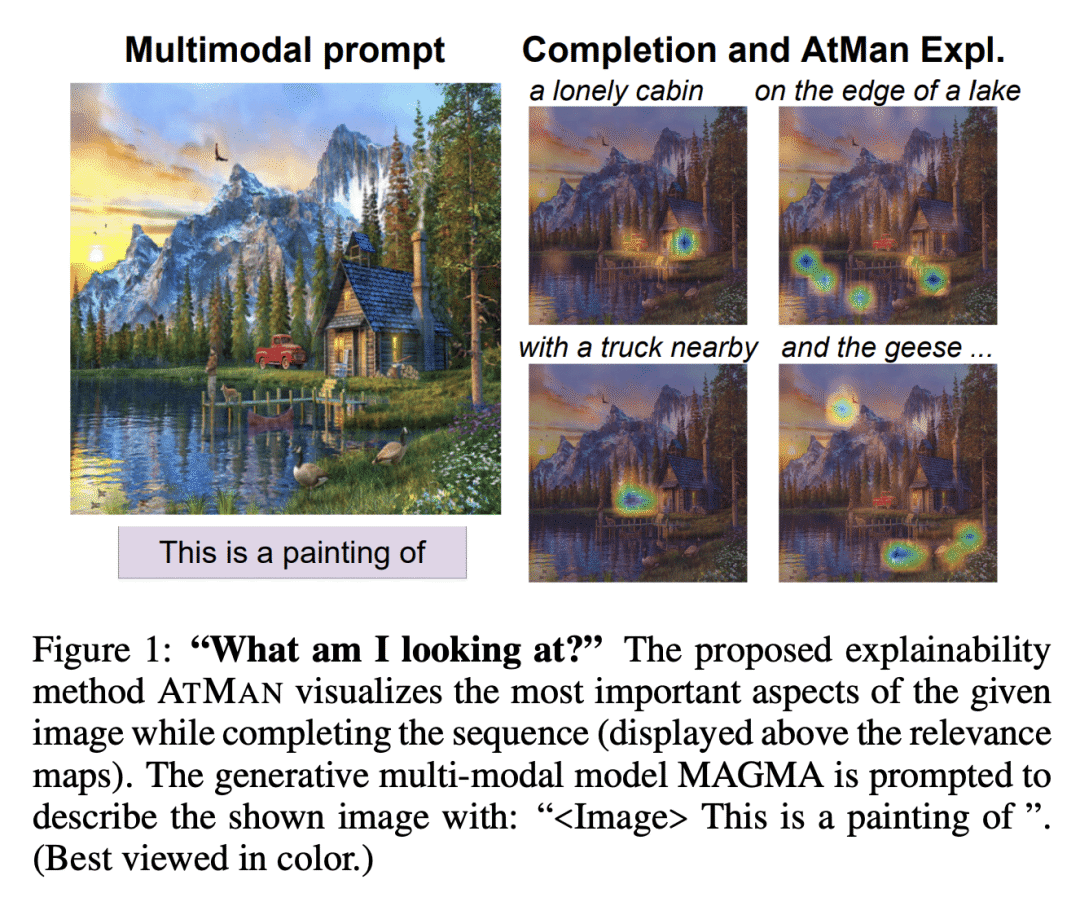

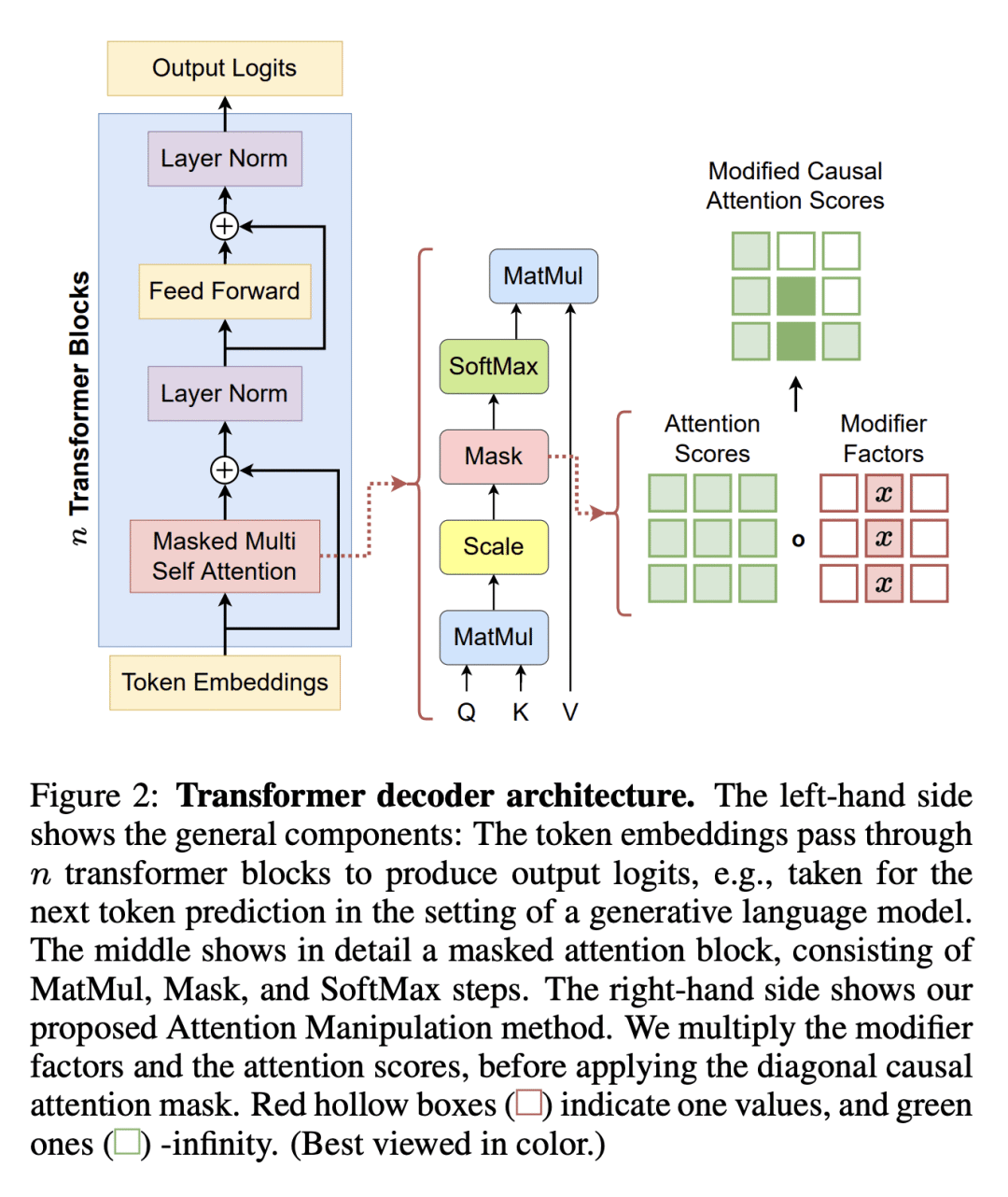

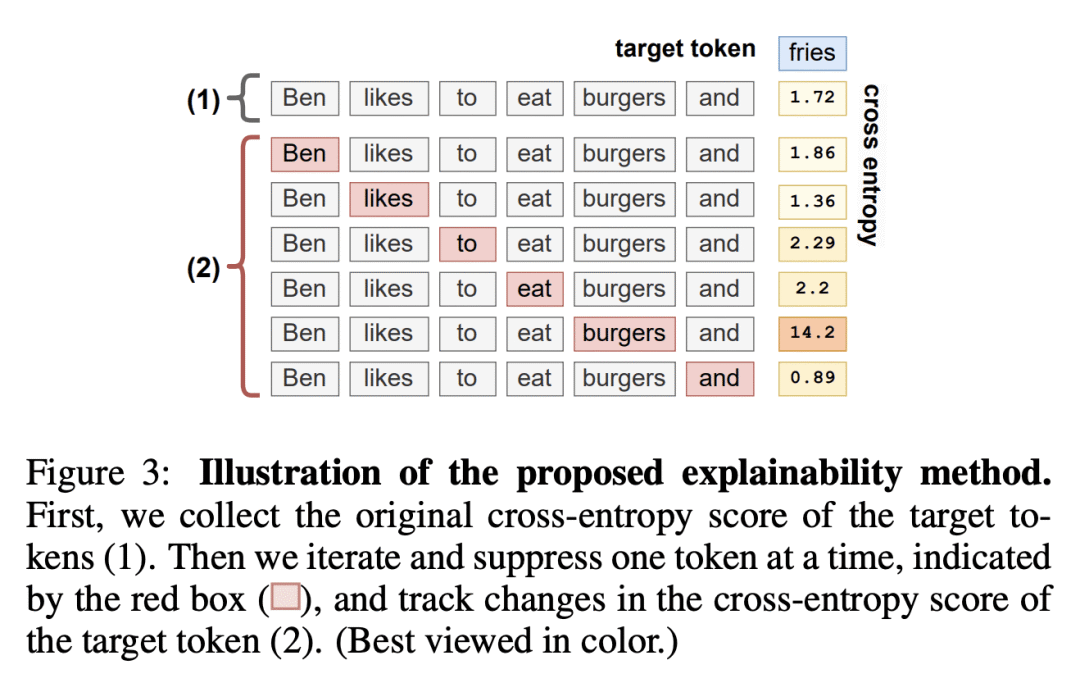

提出名为 AtMan 的生成式 Transformer 模型基于模式态无关的摄动解释方法,操纵 Transformer 注意力机制,面向输出预测生成输入的相关性映射; -

是一种节省内存的方法,使用基于嵌入空间中余弦相似性邻域的可并行化 token 搜索方法,而不是反向传播,反向传播分配的GPU内存几乎是正向传递的两倍; -

在几个文本和图像文本基准以及自回归(AR) Transformer 上对 AtMan 方法进行了详尽的多模态评估,表明其在几个指标上的表现优于当前最先进的基于梯度的方法。

一句话总结:

AtMan 是一种基于内存高效、与模态无关的摄动的生成式 Transformer 模型解释方法,使用基于 token 的搜索方法来生成面向输出预测的输入相关映射,在多个指标上优于当前最先进的基于梯度的方法,并可用于大型模型推断部署。

摘要:

生成式 Transformer 模型变得越来越复杂,具有大量参数和处理多种输入模态的能力。目前解释其预测的方法资源消耗很高。最重要的是,需要过高的大量额外记忆,因为依赖于反向传播,反向传播分配的GPU内存几乎是正向传播的两倍。这使得在生产中使用它们即使不是不可能,也非常困难。本文提出 AtMan,可以几乎零成本地提供生成式 Transformer 模型的解释。AtMan 是一种与模态无关的摄动方法,操纵 Transformer 的注意力机制,以生成面向输出预测的输入相关映射。AtMan不使用反向传播,而是应用了基于嵌入空间中余弦相似性邻域的可并行化 token 搜索方法。对文本和图像文本基准的实验表明,AtMan 在几个指标上的表现优于当前最先进的基于梯度的方法,同时具有高计算效率,适用于大型模型推断部署。

Generative transformer models have become increasingly complex, with large numbers of parameters and the ability to process multiple input modalities. Current methods for explaining their predictions are resource-intensive. Most crucially, they require prohibitively large amounts of extra memory, since they rely on backpropagation which allocates almost twice as much GPU memory as the forward pass. This makes it difficult, if not impossible, to use them in production. We present AtMan that provides explanations of generative transformer models at almost no extra cost. Specifically, AtMan is a modality-agnostic perturbation method that manipulates the attention mechanisms of transformers to produce relevance maps for the input with respect to the output prediction. Instead of using backpropagation, AtMan applies a parallelizable token-based search method based on cosine similarity neighborhood in the embedding space. Our exhaustive experiments on text and image-text benchmarks demonstrate that AtMan outperforms current state-of-the-art gradient-based methods on several metrics while being computationally efficient. As such, AtMan is suitable for use in large model inference deployments.

论文链接:https://arxiv.org/abs/2301.08110

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢