来自今天的爱可可AI前沿推介

[LG] Regeneration Learning: A Learning Paradigm for Data Generation

X Tan, T Qin, J Bian, T Liu, Y Bengio

[Microsoft Research & University of Montreal]

再生学习:面向数据生成的学习范式

要点:

-

面向数据生成任务提出了一种新的学习范式——"再生学习”; -

再生学习首先从 X 生成一个中间表示 Y’ (Y的抽象/表示),然后通过训练从 Y’ 生成 Y ; -

该方法将表示学习的概念扩展到了数据生成任务,可视为传统表示学习的一种对应方法。

一句话总结:

面向数据生成任务提出一种称为“再生学习”的新学习范式,生成目标数据的中间表示,并将其用于训练模型,可视为传统表示学习的对应方法,提供了宝贵的见解,可广泛用于数据生成任务。

摘要:

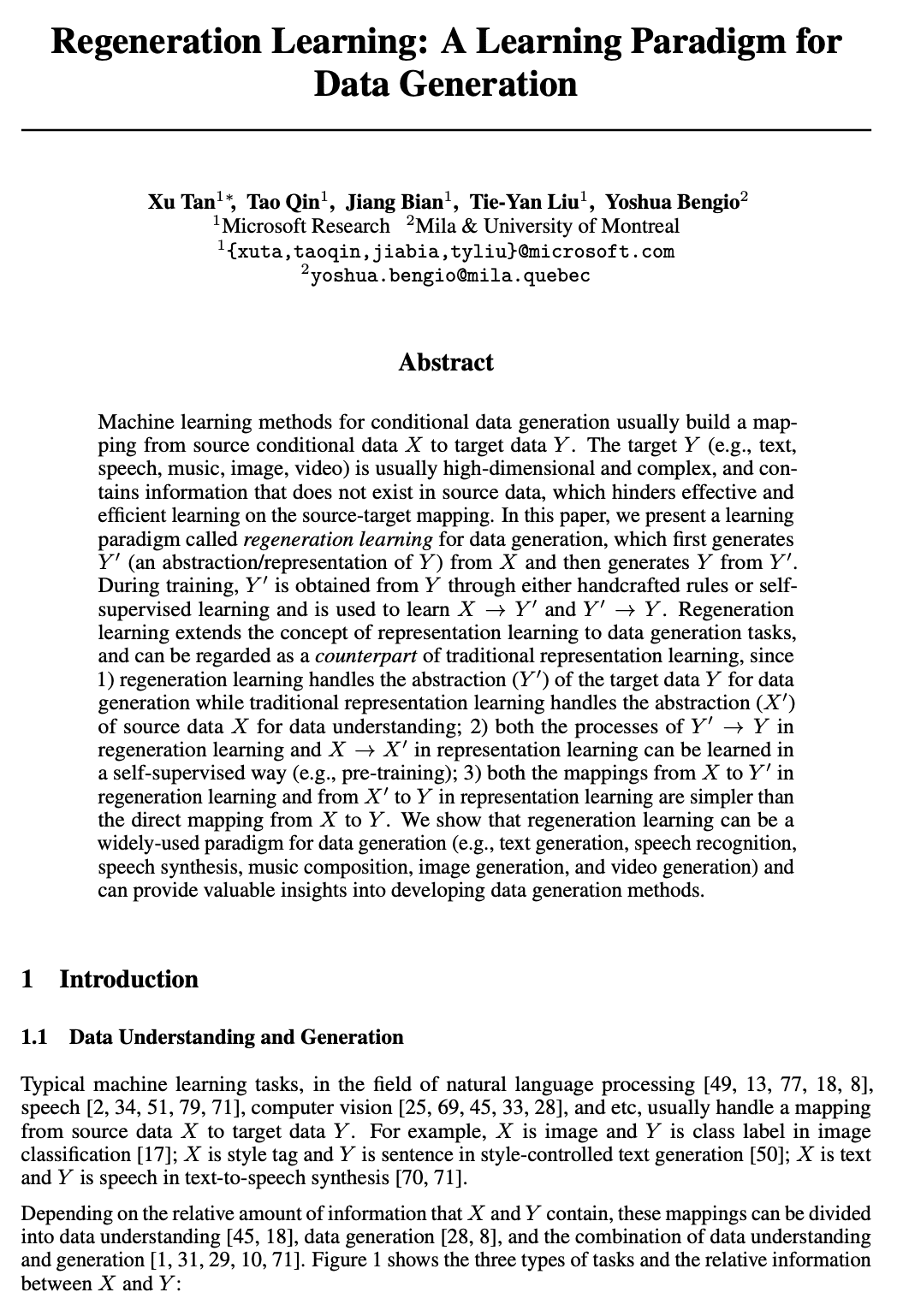

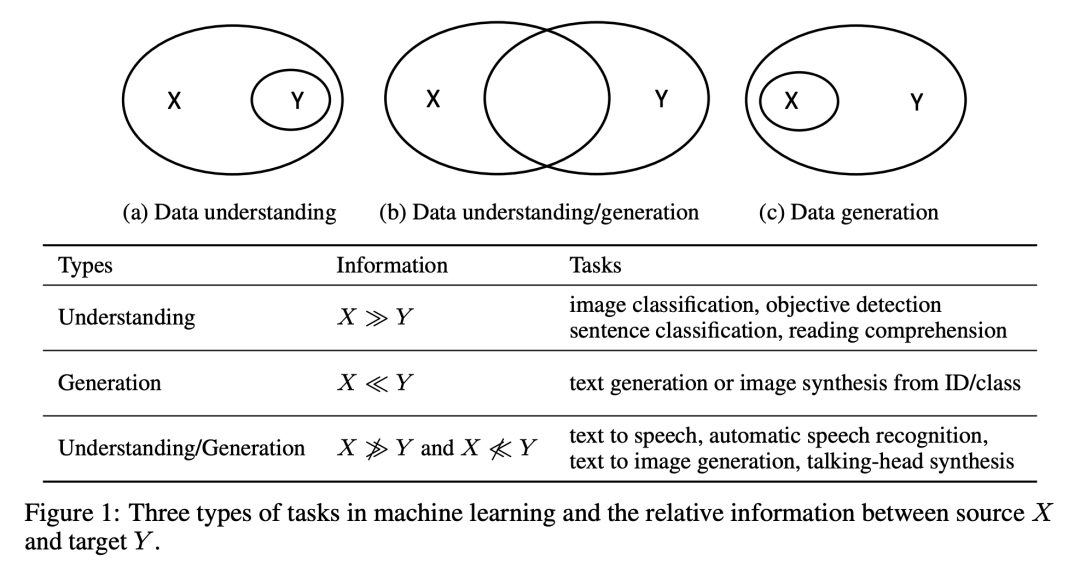

用于条件数据生成的机器学习方法,通常建立一个从源条件数据 X 到目标数据 Y 的映射。目标 Y (如文本、语音、音乐、图像、视频)通常是高维和复杂的,并且包含源数据中不存在的信息,这阻碍了对 源-目标映射 的有效和高效学习。本文提出一种学习范式,称为数据生成的再生学习,首先从 X 中生成Y’ (Y的抽象/表示),然后从 Y’ 中生成 Y。在训练过程中,Y’ 是通过手工制作的规则或自监督学习从 Y 中获得的,并被用来学习 X-->Y’ 和 Y'—>Y 。再生学习将表示学习的概念扩展到数据生成任务中,并可视为传统表示学习的对应方法,因为:1) 再生学习处理目标数据 Y 的抽象(Y’)用于数据生成,而传统表示学习处理源数据 X 的抽象(X’)用于数据理解;2) 再生学习中的 Y'->Y 和表示学习中的 X->X’ 过程都可以以自监督方式学习(例如预训练)。3) 再生学习中从 X 到 Y’ 的映射和表示学习中从 X’ 到 Y 的映射都比从 X 到 Y 的直接映射更简单。再生学习可以成为一种广泛使用的数据生成范式(如文本生成、语音识别、语音合成、音乐创作、图像生成和视频生成),并可为开发数据生成方法提供有价值的见解。

Machine learning methods for conditional data generation usually build a mapping from source conditional data X to target data Y. The target Y (e.g., text, speech, music, image, video) is usually high-dimensional and complex, and contains information that does not exist in source data, which hinders effective and efficient learning on the source-target mapping. In this paper, we present a learning paradigm called regeneration learning for data generation, which first generates Y' (an abstraction/representation of Y) from X and then generates Y from Y'. During training, Y' is obtained from Y through either handcrafted rules or self-supervised learning and is used to learn X-->Y' and Y'-->Y. Regeneration learning extends the concept of representation learning to data generation tasks, and can be regarded as a counterpart of traditional representation learning, since 1) regeneration learning handles the abstraction (Y') of the target data Y for data generation while traditional representation learning handles the abstraction (X') of source data X for data understanding; 2) both the processes of Y'-->Y in regeneration learning and X-->X' in representation learning can be learned in a self-supervised way (e.g., pre-training); 3) both the mappings from X to Y' in regeneration learning and from X' to Y in representation learning are simpler than the direct mapping from X to Y. We show that regeneration learning can be a widely-used paradigm for data generation (e.g., text generation, speech recognition, speech synthesis, music composition, image generation, and video generation) and can provide valuable insights into developing data generation methods.

论文链接:https://arxiv.org/abs/2301.08846

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢