来自今天的爱可可AI前沿推介

[LG] Mathematical Capabilities of ChatGPT

S Frieder, L Pinchetti, R Griffiths, T Salvatori, T Lukasiewicz, P C Petersen, A Chevalier, J Berner

[University of Oxford & University of Cambridge & University of Vienna]

ChatGPT的数学能力

要点:

-

引入一个新的数据集 GHOSTS,以涵盖研究生水平的数学,并提供语言模型数学能力的全面概括; -

将 ChatGPT 在 GHOSTS 上进行基准测试,并根据细粒度标准评估性能; -

识别ChatGPT的故障模式及其功能的局限。

一句话总结:

研究了 ChatGPT 的数学能力,引入新的数据集 GHOSTS,根据细粒度标准对 ChatGPT 进行基准测试,结论是 ChatGPT 的数学能力明显低于一般的数学研究生。

摘要:

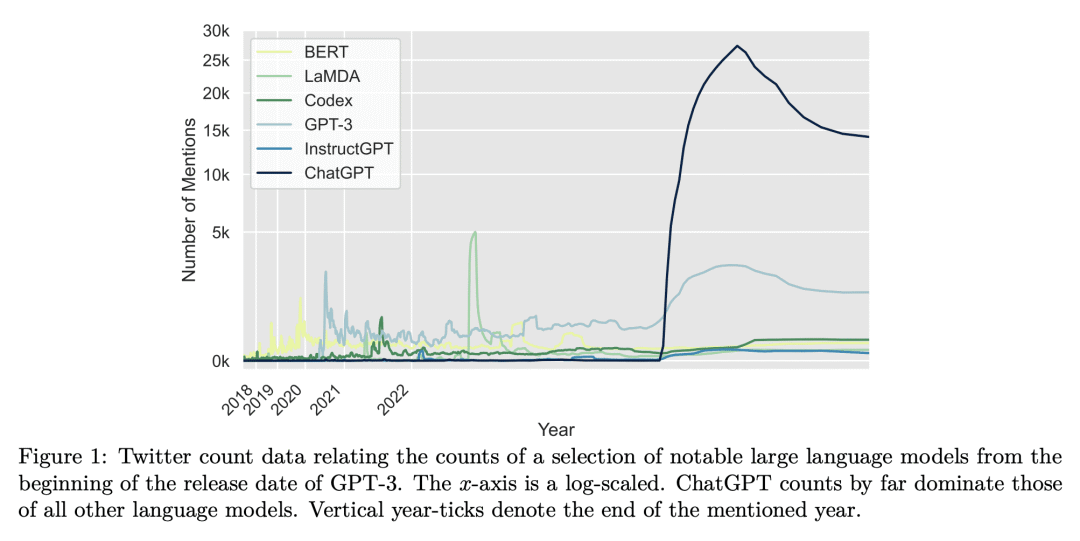

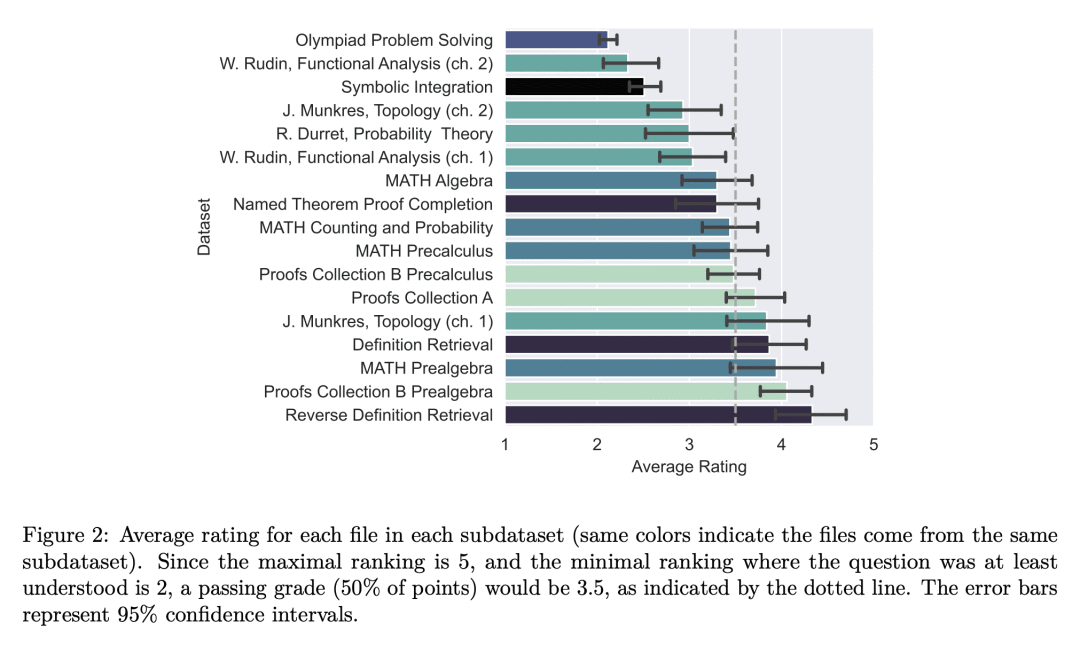

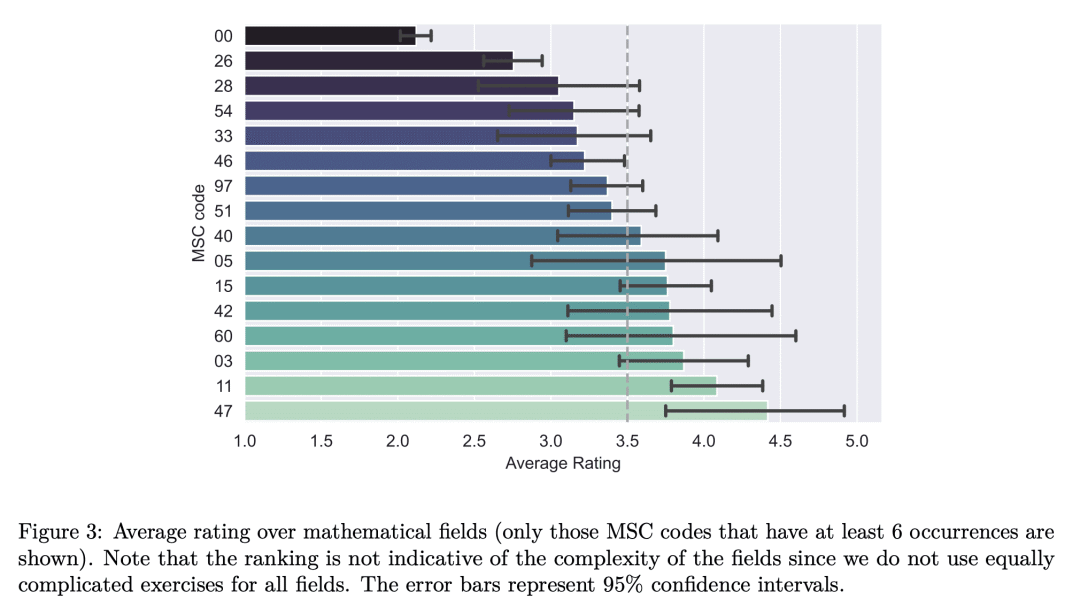

本文通过在公开数据集和手工制作的数据集上测试 ChatGPT 的数学能力,并将其性能与在数学语料库上训练的其他模型(如Minerva)进行比较。本文还通过模拟数学家日常职业活动中出现的各种用例(回答问题、搜索定理)来测试 ChatGPT 是否能成为专业数学家的有用助手。与形式数学不同的是,形式数学有大型的形式证明数据库(如 Lean Mathematical Library),而目前用于衡量语言模型的自然语言数学数据集只包括初等数学。本文通过引入一个新的数据集 GHOSTS 来解决该问题,这是第一个由数学领域的工作研究人员制作和策划的自然语言数据集,(1) 旨在涵盖研究生水平的数学,(2) 提供语言模型数学能力的全面概括。本文在 GHOSTS 上对 ChatGPT 进行了基准测试,并根据细化的标准评估其性能。与媒体的许多正面报道相反(可能存在选择性偏差),ChatGPT 的数学能力明显低于普通数学研究生的能力。结果显示,ChatGPT 经常能理解问题,但不能提供正确的解答。

We investigate the mathematical capabilities of ChatGPT by testing it on publicly available datasets, as well as hand-crafted ones, and measuring its performance against other models trained on a mathematical corpus, such as Minerva. We also test whether ChatGPT can be a useful assistant to professional mathematicians by emulating various use cases that come up in the daily professional activities of mathematicians (question answering, theorem searching). In contrast to formal mathematics, where large databases of formal proofs are available (e.g., the Lean Mathematical Library), current datasets of natural-language mathematics, used to benchmark language models, only cover elementary mathematics. We address this issue by introducing a new dataset: GHOSTS. It is the first natural-language dataset made and curated by working researchers in mathematics that (1) aims to cover graduate-level mathematics and (2) provides a holistic overview of the mathematical capabilities of language models. We benchmark ChatGPT on GHOSTS and evaluate performance against fine-grained criteria. We make this new dataset publicly available to assist a community-driven comparison of ChatGPT with (future) large language models in terms of advanced mathematical comprehension. We conclude that contrary to many positive reports in the media (a potential case of selection bias), ChatGPT's mathematical abilities are significantly below those of an average mathematics graduate student. Our results show that ChatGPT often understands the question but fails to provide correct solutions. Hence, if your goal is to use it to pass a university exam, you would be better off copying from your average peer!

论文链接: https://arxiv.org/abs/2301.13867

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢