来自今天的爱可可AI前沿推介

[LG] Massively Scaling Heteroscedastic Classifiers

M Collier, R Jenatton, B Mustafa, N Houlsby, J Berent, E Kokiopoulou

[Google AI]

大规模尺度异方差分类器

要点:

-

提出 HET-XL,一种异方差分类器,参数数量减少,在大规模问题上不需要温度超参数调整; -

HET 和 HET-H 在三个基准图像分类中表现一致; -

将 HET-XL 扩展到对比学习,提高了ImageNet 零样本分类的准确性。

一句话总结:

HET-XL 是一种异方差分类器,大大降低了部署成本,需要更少的参数,也不需要调整温度超参数。

摘要:

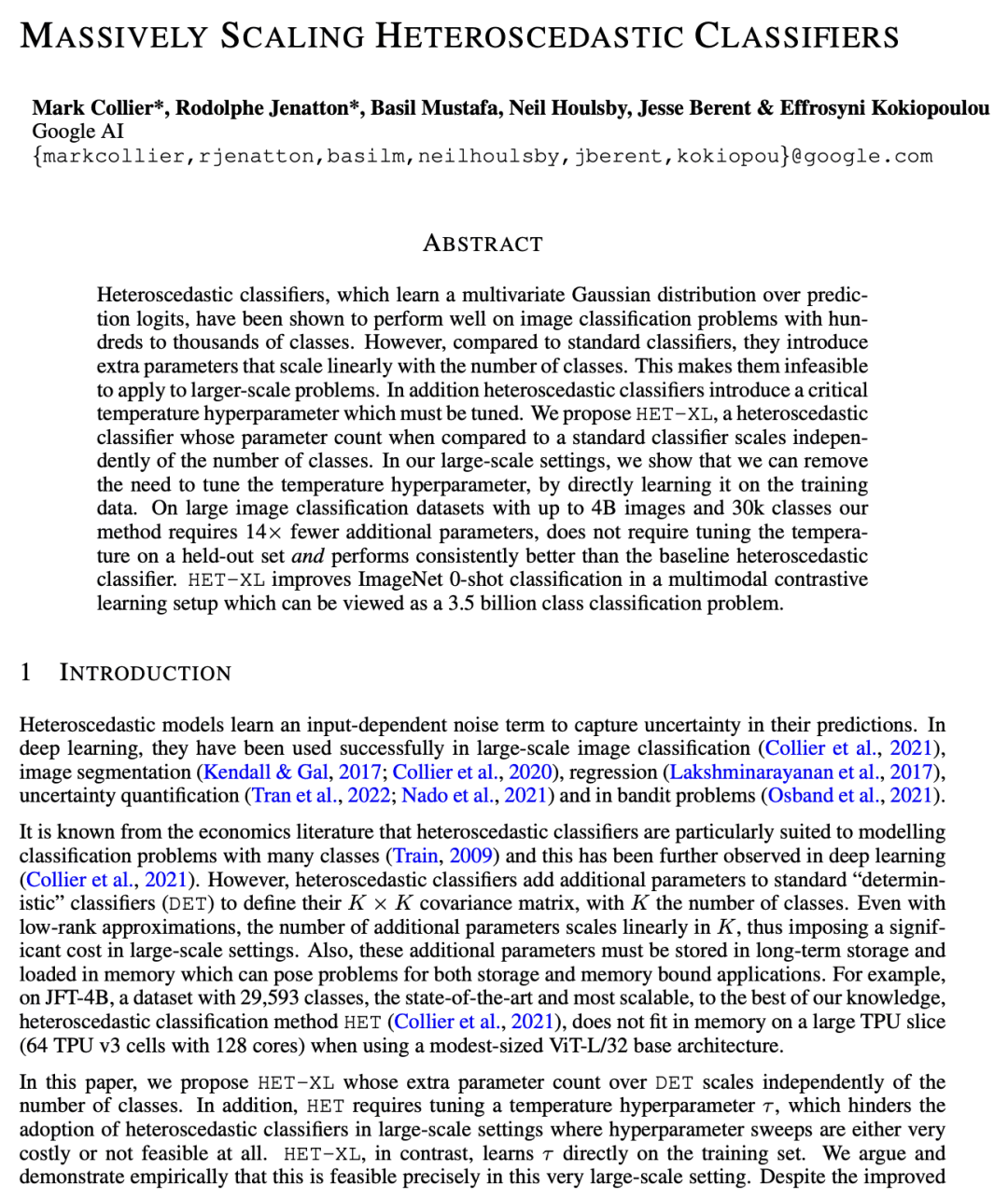

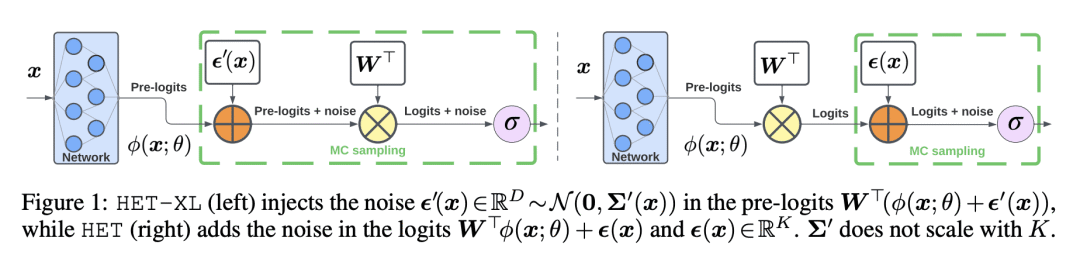

异方差分类器,在预测对数上学习多变量高斯分布,已被证明在有数百到数千个类的图像分类问题上表现良好。然而,与标准分类器相比,它们引入了额外的参数,这些参数与类的数量成线性比例。这使得它们不可能应用于更大规模的问题。此外,异方差分类器还引入了一个临界温度超参数,必须对其进行调整。本文提出 HET-XL,一种异方差分类器,与标准分类器相比,其参数数量与分类数无关。在所述大规模设置中,可以通过直接在训练数据上学习温度超参数来消除调整温度超参数的需要。在大型图像分类数据集上,所提方法需要的额外参数少了14倍,不需要在保持的数据集上调整温度,而且性能始终比基线异方差分类器好。HET-XL 在多模态对比学习设置中改善了 ImageNet 的零样本分类,该设置可被视为一个35亿类的分类问题。

Heteroscedastic classifiers, which learn a multivariate Gaussian distribution over prediction logits, have been shown to perform well on image classification problems with hundreds to thousands of classes. However, compared to standard classifiers, they introduce extra parameters that scale linearly with the number of classes. This makes them infeasible to apply to larger-scale problems. In addition heteroscedastic classifiers introduce a critical temperature hyperparameter which must be tuned. We propose HET-XL, a heteroscedastic classifier whose parameter count when compared to a standard classifier scales independently of the number of classes. In our large-scale settings, we show that we can remove the need to tune the temperature hyperparameter, by directly learning it on the training data. On large image classification datasets with up to 4B images and 30k classes our method requires 14X fewer additional parameters, does not require tuning the temperature on a held-out set and performs consistently better than the baseline heteroscedastic classifier. HET-XL improves ImageNet 0-shot classification in a multimodal contrastive learning setup which can be viewed as a 3.5 billion class classification problem.

论文链接:https://arxiv.org/abs/2301.12860

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢