来自今天的爱可可AI前沿推介

[LG] Supervision Complexity and its Role in Knowledge Distillation

H Harutyunyan, A S Rawat, A K Menon, S Kim, S Kumar

[Google Research NYC& USC]

监督复杂性及其在知识蒸馏中的作用

要点:

-

提出一种新的知识蒸馏的理论框架,利用监督复杂性,一种衡量教师和学生预测之间一致性的标准; -

强调了在控制学生的泛化性方面,教师的准确性、学生预测的边缘和监督复杂性之间的相互作用; -

在线蒸馏被证明是增加教师复杂性而不降低学生边缘的有效方法。

一句话总结:

提出一个新的理论框架,利用监督复杂性来理解为什么知识蒸馏能有效改善学生模型的泛化行为。

摘要:

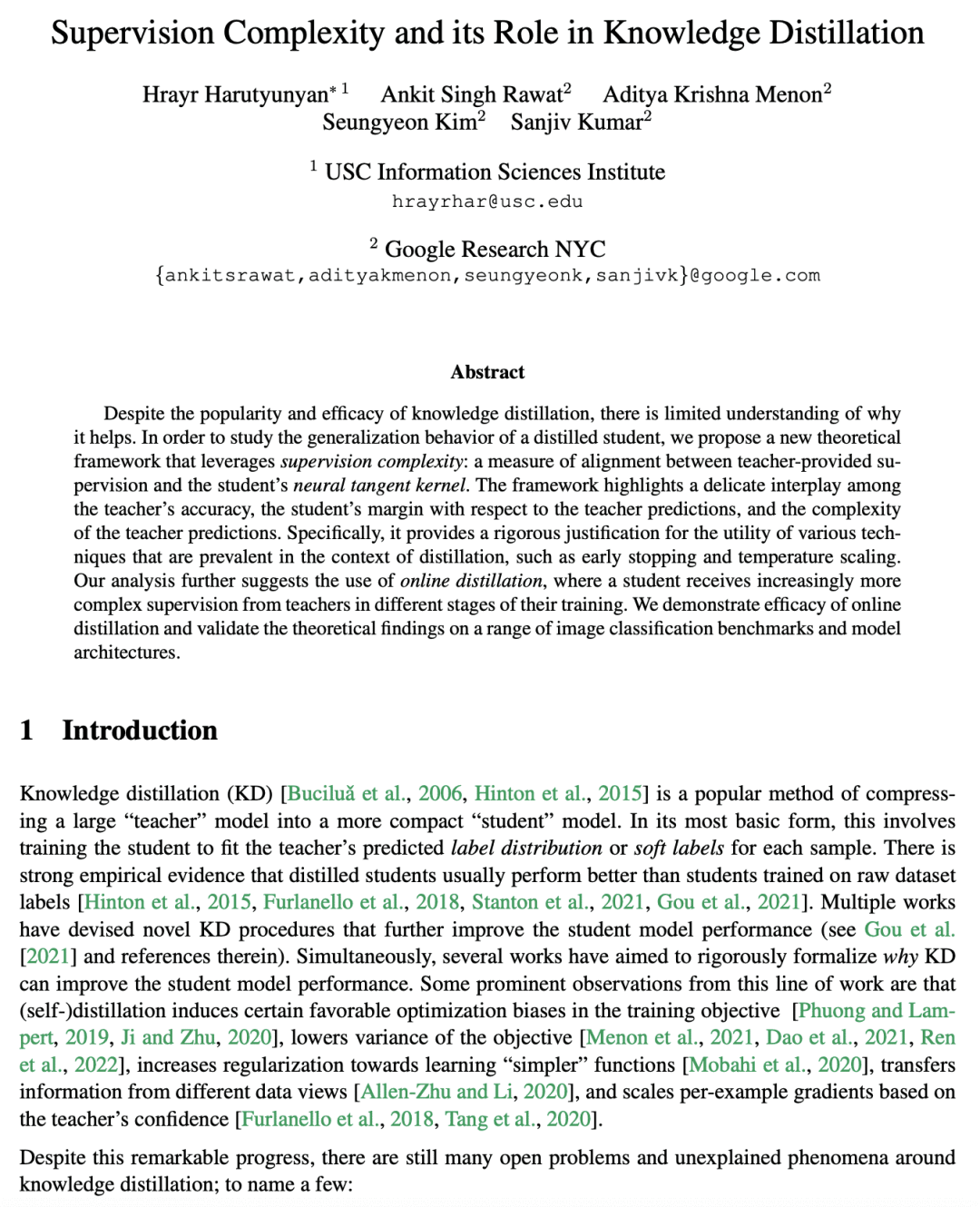

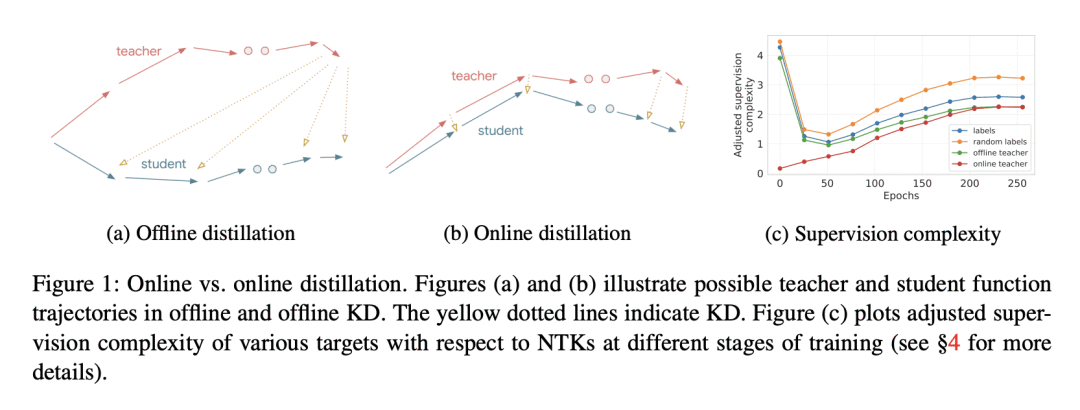

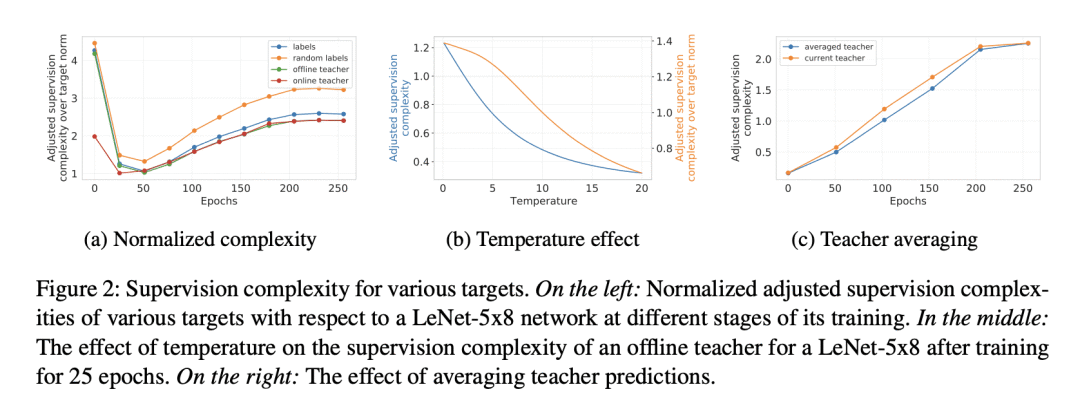

尽管知识蒸馏很受欢迎,也很有效,但人们对它为什么有作用的理解却很有限。为了研究蒸馏得到学生的泛化行为,本文提出一种新的理论框架,利用监督复杂性:衡量教师提供的监督和学生的神经切线核之间的一致性。该框架强调了教师的准确性、学生相对于教师预测的边缘以及教师预测的复杂性之间的微妙相互作用。为蒸馏背景下普遍存在的各种技术的效用提供了严格的论证,如提前停止和温度缩放等。本文分析进一步建议使用在线蒸馏法,即学生在训练的不同阶段接受教师越来越复杂的监督。本文证明了在线蒸馏的功效,并在一系列的图像分类基准和模型架构上验证了理论结论。

Despite the popularity and efficacy of knowledge distillation, there is limited understanding of why it helps. In order to study the generalization behavior of a distilled student, we propose a new theoretical framework that leverages supervision complexity: a measure of alignment between teacher-provided supervision and the student's neural tangent kernel. The framework highlights a delicate interplay among the teacher's accuracy, the student's margin with respect to the teacher predictions, and the complexity of the teacher predictions. Specifically, it provides a rigorous justification for the utility of various techniques that are prevalent in the context of distillation, such as early stopping and temperature scaling. Our analysis further suggests the use of online distillation, where a student receives increasingly more complex supervision from teachers in different stages of their training. We demonstrate efficacy of online distillation and validate the theoretical findings on a range of image classification benchmarks and model architectures.

论文链接:https://arxiv.org/abs/2301.12245

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢