来自今天的爱可可AI前沿推介

[CV] CHiLS: Zero-Shot Image Classification with Hierarchical Label Sets

Z Novack, S Garg, J McAuley, Z C. Lipton

[UC San Diego & CMU]

CHiLS: 基于层次标签集的零样本图像分类

要点:

-

提出 CHiLS,一种改善类结构定义不明确和/或过于粗放的零样本 CLIP 性能的新方法; -

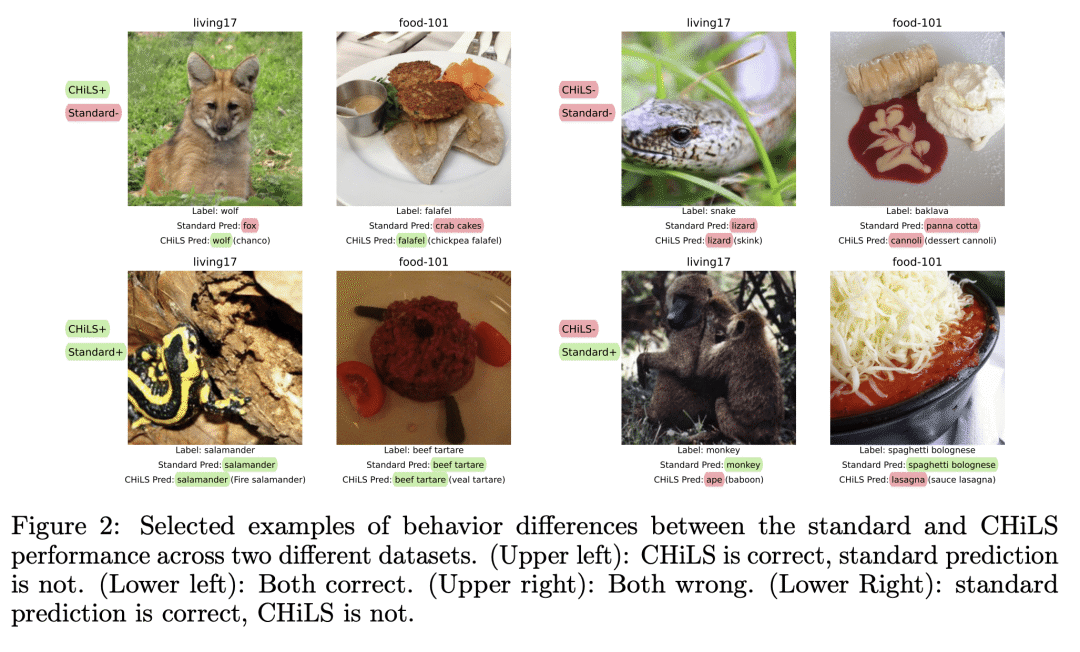

CHiLS 的表现总是很好,甚至优于标准的零样本方法,即使只有合成的层次结构; -

在有真实层次的情况下,CHiLS 实现了高达 30% 的精度提升。

一句话总结:

CHiLS 是一种用分层标签集进行零样本图像分类的新方法,提高了现有模型的性能,并且不需要额外的训练或微调。

摘要:

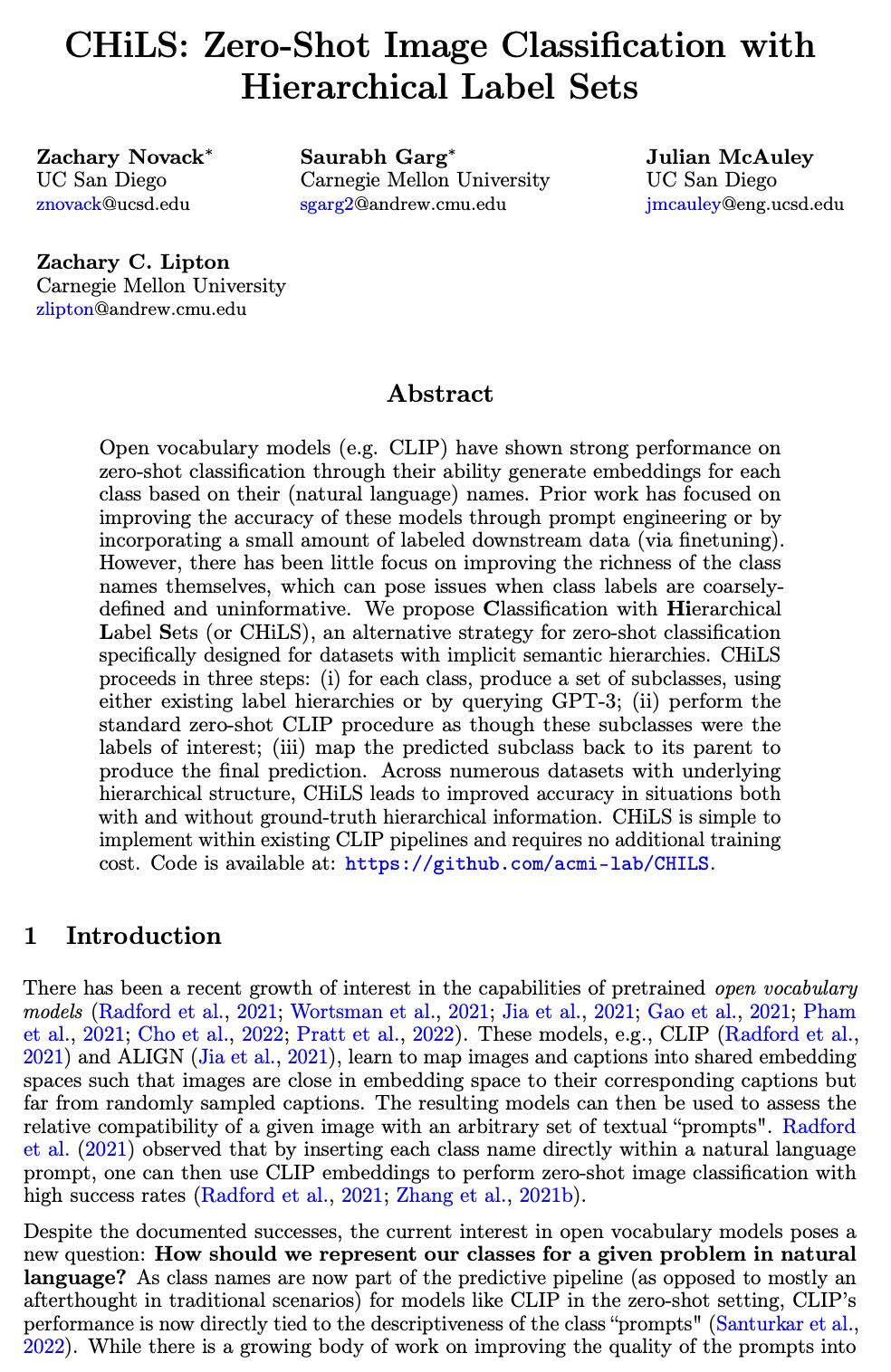

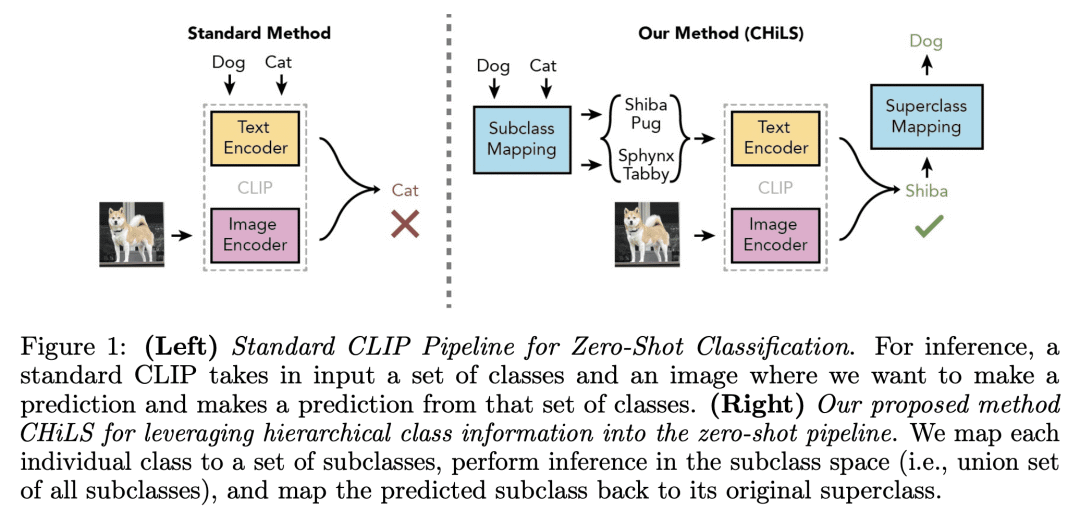

开放词表模型(如CLIP)通过其基于(自然语言)名称为每个类生成嵌入的能力,在零样本分类中表现出强大的性能。之前的工作集中在通过提示工程或通过纳入少量标注的下游数据(通过微调)来提高这些模型的准确性。然而,很少有人关注改善类名称本身的丰富性,当类标签定义粗略且不具信息性时,就会产生问题。本文提出基于分层标签集的分类(CHiLS),一种专门为具有隐含语义层次的数据集设计的零样本分类的替代策略。CHiLS 分三个步骤进行。(i) 对于每个类,使用现有的标签层次或通过查询GPT-3,产生一组子类;(ii) 执行标准的零样本 CLIP 程序,好像这些子类是感兴趣的标签;(iii) 将预测的子类映射回它的父类,产生最终的预测。在众多具有底层层次结构的数据集中,CHiLS 在有和没有基础层次信息的情况下都能提高准确性。CHiLS很容易在现有的CLIP管道中实现,且不需要额外的训练成本。

Open vocabulary models (e.g. CLIP) have shown strong performance on zero-shot classification through their ability generate embeddings for each class based on their (natural language) names. Prior work has focused on improving the accuracy of these models through prompt engineering or by incorporating a small amount of labeled downstream data (via finetuning). However, there has been little focus on improving the richness of the class names themselves, which can pose issues when class labels are coarsely-defined and uninformative. We propose Classification with Hierarchical Label Sets (or CHiLS), an alternative strategy for zero-shot classification specifically designed for datasets with implicit semantic hierarchies. CHiLS proceeds in three steps: (i) for each class, produce a set of subclasses, using either existing label hierarchies or by querying GPT-3; (ii) perform the standard zero-shot CLIP procedure as though these subclasses were the labels of interest; (iii) map the predicted subclass back to its parent to produce the final prediction. Across numerous datasets with underlying hierarchical structure, CHiLS leads to improved accuracy in situations both with and without ground-truth hierarchical information. CHiLS is simple to implement within existing CLIP pipelines and requires no additional training cost.

论文链接:https://arxiv.org/abs/2302.02551

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢