作者:K M Choromanski, S Li, V Likhosherstov, K A Dubey, S Luo, D He, Y Yang, T Sarlos, T Weingarten, A Weller [Google Research & CMU & University of Cambridge & Peking University] (2023)

要点:

-

提出 FourierLearner-Transformers(FLT),一类新的线性 Transformer,包含了一系列的相对位置编码(RPE)机制; -

最优 RPE 机制是通过在 FLT 中学习其频谱表示而隐式构建的,保持了实际的内存使用,并允许使用结构性归纳偏差技术; -

在各种任务和数据模态上对 FLT 进行了广泛的经验评估,包括语言建模、图像分类和 3D 分子特性预测。 -

与其他线性 Transformer 相比,FLT 提供了准确的结果,其中局部 RPE 变体在语言建模任务中取得了最佳性能。

总结:

提出 FLT,一类新的基于 RPE 增强的线性注意力的 Transformer,与其他线性 Transformer 相比,具有更好的准确性和多功能性,并且是第一个为 3D 数据提供这种能力的 Transformer。

https://arxiv.org/abs/2302.01925

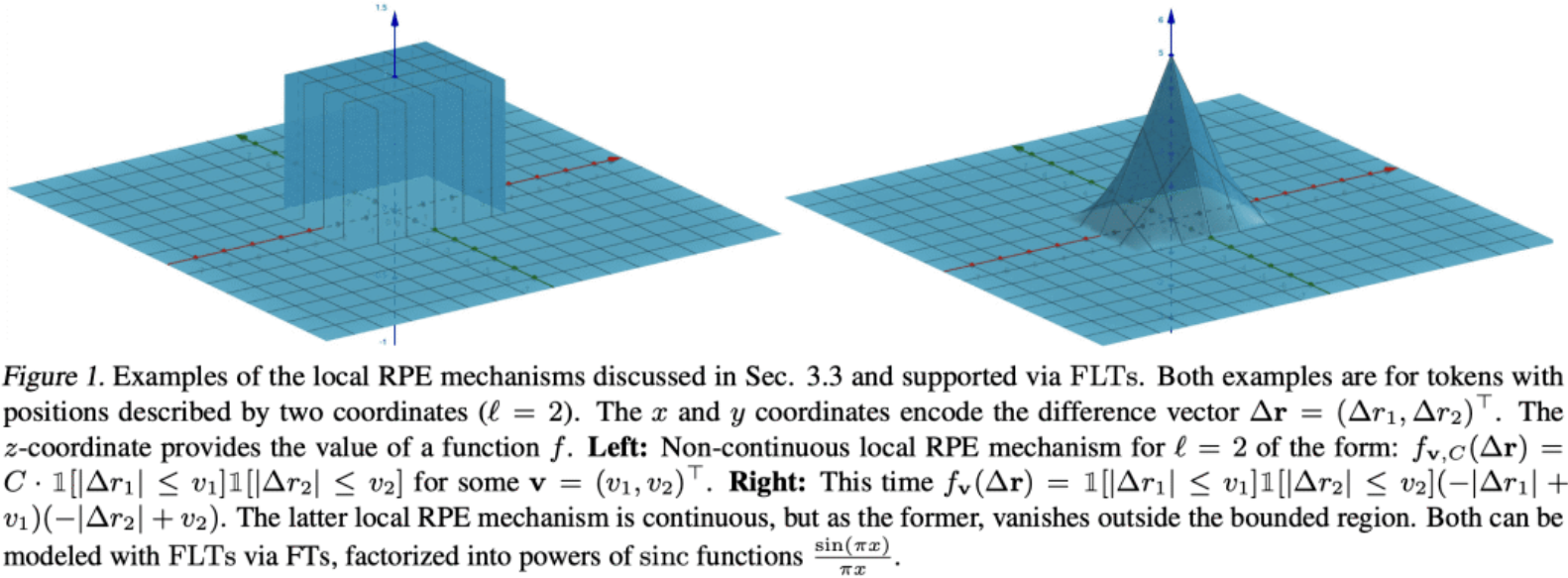

We propose a new class of linear Transformers called FourierLearner-Transformers (FLTs), which incorporate a wide range of relative positional encoding mechanisms (RPEs). These include regular RPE techniques applied for nongeometric data, as well as novel RPEs operating on the sequences of tokens embedded in higher-dimensional Euclidean spaces (e.g. point clouds). FLTs construct the optimal RPE mechanism implicitly by learning its spectral representation. As opposed to other architectures combining efficient low-rank linear attention with RPEs, FLTs remain practical in terms of their memory usage and do not require additional assumptions about the structure of the RPE-mask. FLTs allow also for applying certain structural inductive bias techniques to specify masking strategies, e.g. they provide a way to learn the so-called local RPEs introduced in this paper and providing accuracy gains as compared with several other linear Transformers for language modeling. We also thoroughly tested FLTs on other data modalities and tasks, such as: image classification and 3D molecular modeling. For 3D-data FLTs are, to the best of our knowledge, the first Transformers architectures providing RPE-enhanced linear attention.

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢