来自今天的爱可可AI前沿推介

[LG] Leveraging Domain Relations for Domain Generalization

H Yao, X Yang, X Pan, S Liu

[Stanford University & Shanghai Jiao Tong University & Quebec Artificial Intelligence Institute & University of Washington]

基于域关系的域泛化

要点:

-

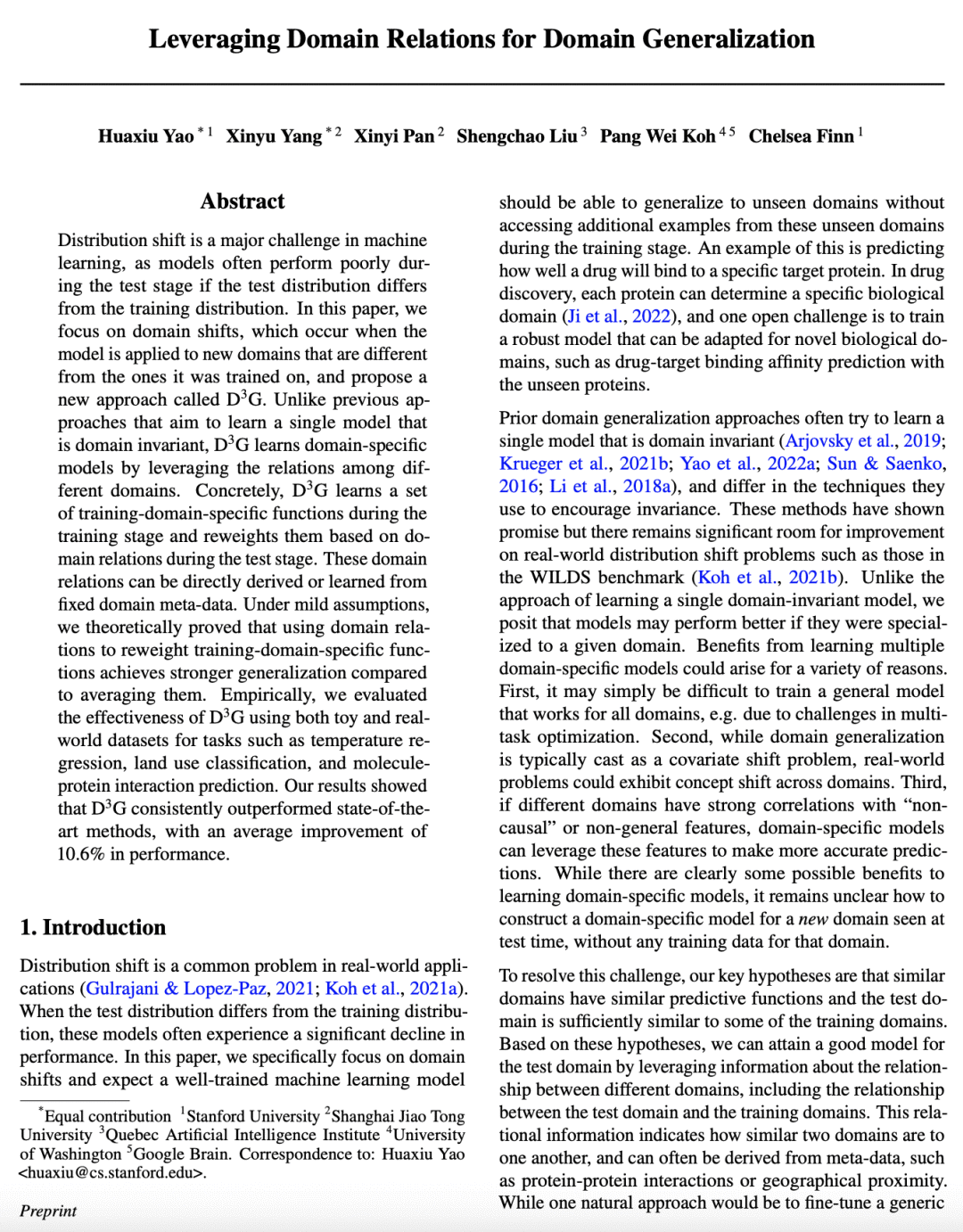

提出一种新方法D³G,用于解决机器学习中的域漂移问题; -

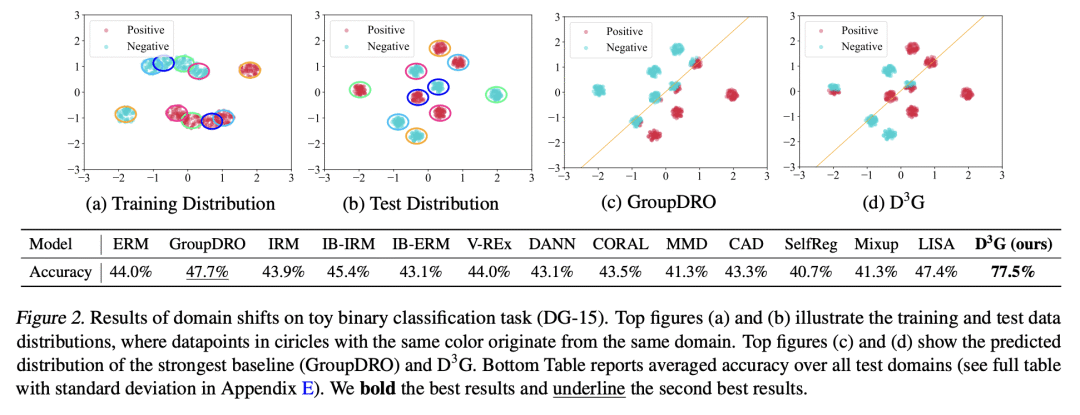

D³G 在训练阶段学习一组不同的、针对训练域的函数,并在测试阶段根据域关系对其进行重加权; -

理论分析表明,与平均相比,用域关系对训练域特定函数进行重加权会带来更好的泛化效果。 -

对各种数据集的实证评估显示,与最先进的方法相比,有一致的改进,平均改进率为10.6%。

一句话总结:

D³G 方法通过在测试阶段基于域关系对域特定函数进行重加权,提高机器学习模型对域漂移的鲁棒性。

摘要:

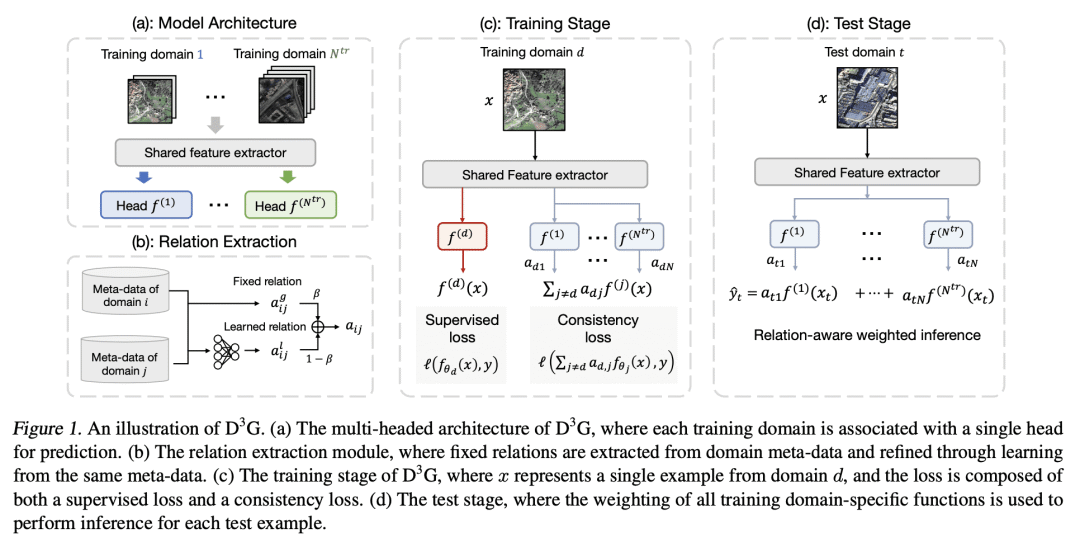

分布漂移是机器学习的一个主要挑战,如果测试分布与训练分布不同,模型在测试阶段往往表现不佳。本文专注于域漂移,即当模型被应用于与它所训练的域不同的新域时发生的漂移,并提出了一种称为 D³G 的新方法。与之前旨在学习一个域不变的单个模型的方法不同,D³G 通过利用不同域之间的关系学习特定域的模型。D³G 在训练阶段学习一组训练域特定函数,并在测试阶段基于域关系对其进行重加权。这些域关系可以直接派生或从固定的域元数据中学习。在温和假设条件下,本文从理论上证明了用域关系来重加权训练域特定函数,与平均化相比,能达到更强的泛化效果。在经验上,本文用玩具和真实世界数据集对 D³G 的有效性进行了评估,这些任务包括温度回归、土地利用分类和分子-蛋白质交互预测。结果表明,D³G 总是优于最先进的方法,其性能平均提高了10.6%。

Distribution shift is a major challenge in machine learning, as models often perform poorly during the test stage if the test distribution differs from the training distribution. In this paper, we focus on domain shifts, which occur when the model is applied to new domains that are different from the ones it was trained on, and propose a new approach called D^3G. Unlike previous approaches that aim to learn a single model that is domain invariant, D^3G learns domain-specific models by leveraging the relations among different domains. Concretely, D^3G learns a set of training-domain-specific functions during the training stage and reweights them based on domain relations during the test stage. These domain relations can be directly derived or learned from fixed domain meta-data. Under mild assumptions, we theoretically proved that using domain relations to reweight training-domain-specific functions achieves stronger generalization compared to averaging them. Empirically, we evaluated the effectiveness of D^3G using both toy and real-world datasets for tasks such as temperature regression, land use classification, and molecule-protein interaction prediction. Our results showed that D^3G consistently outperformed state-of-the-art methods, with an average improvement of 10.6% in performance.

论文链接:https://arxiv.org/abs/2302.02609

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢