来自今天的爱可可AI前沿推介

[LG] Hard Prompts Made Easy: Gradient-Based Discrete Optimization for Prompt Tuning and Discovery

Y Wen, N Jain, J Kirchenbauer, M Goldblum, J Geiping...

[University of Maryland & New York University]

轻松生成硬提示: 面向提示调优与发现的基于梯度的离散优化

要点:

-

作者提出一种通过高效的基于梯度的优化学习硬文本提示的简单方案,该方案改编自梯度重投影方案和针对量化网络的大规模离散优化文献; -

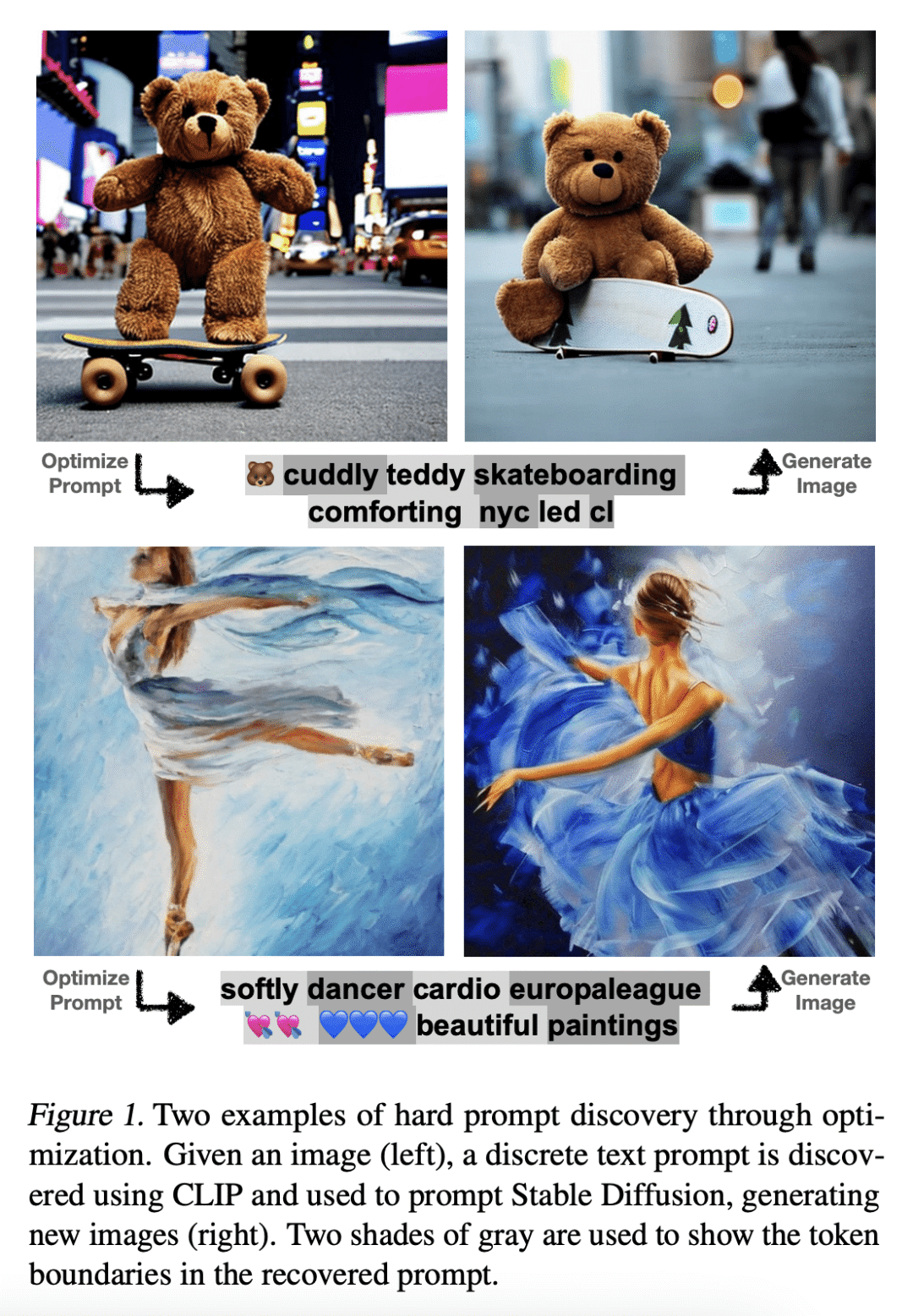

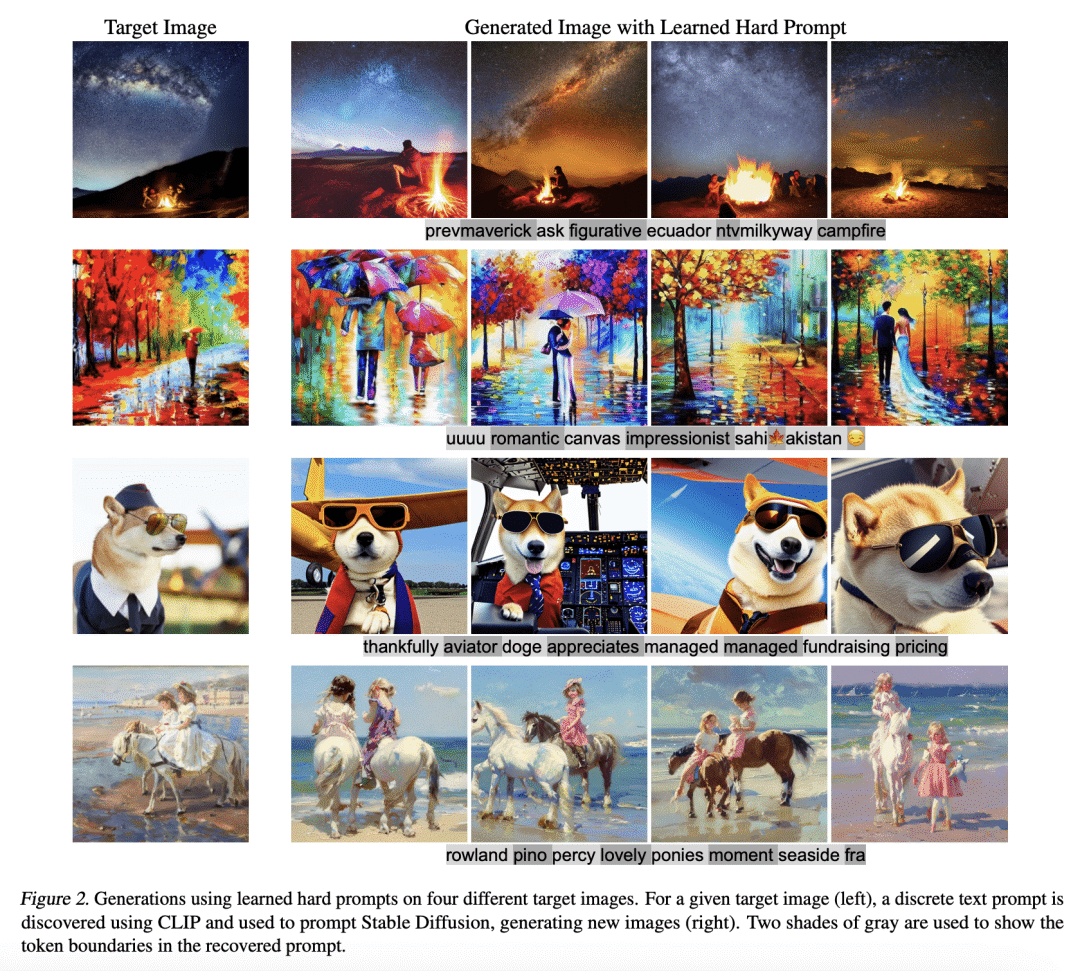

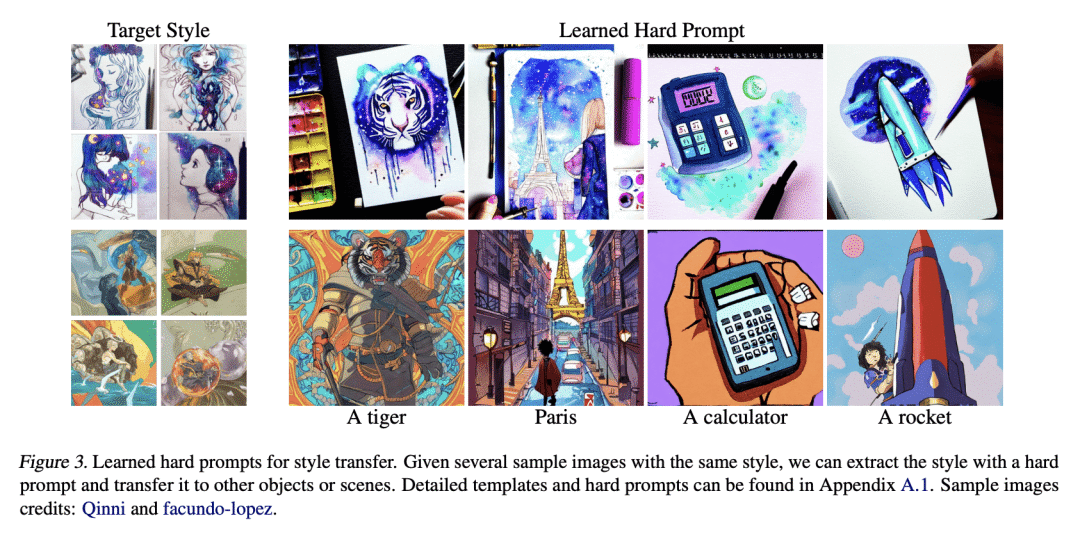

所提出的方法在优化硬提示的过程中使用连续的"软"提示作为中间变量,从而实现了鲁棒的优化并促进了提示的探索和发现; -

该方法被用于文本到图像和文本到文本的应用,学到的硬提示在图像生成和语言分类任务中都表现良好。

一句话总结:

提出一种基于梯度的优化方法,为文本到图像和文本到文本的应用生成鲁棒和灵活的硬文本提示。

摘要:

现代生成式模型的优势,在于它们能够通过基于文本的提示进行控制。典型的"硬”提示,是由可解释的词和token组成的,必须由人手工制作。也有一些"软"提示,由连续的特征向量组成。这些可以用强大的优化方法发现,但它们不容易被解释,不容易在不同的模型中重复使用,也不容易插入到基于文本的界面中。本文描述了一种通过有效的基于梯度的优化来鲁棒地优化硬文本提示的方法。该方法为文本到图像和文本到文本的应用自动生成了基于硬文本的提示语。在文本到图像的设置中,该方法为扩散模型创建了硬提示,允许API用户轻松生成、发现、混合和匹配图像概念,而无需事先了解如何提示模型。在文本到文本的设置中,本文表明硬提示可以被自动发现,从而有效地调整语言模型进行分类。

The strength of modern generative models lies in their ability to be controlled through text-based prompts. Typical "hard" prompts are made from interpretable words and tokens, and must be hand-crafted by humans. There are also "soft" prompts, which consist of continuous feature vectors. These can be discovered using powerful optimization methods, but they cannot be easily interpreted, re-used across models, or plugged into a text-based interface.We describe an approach to robustly optimize hard text prompts through efficient gradient-based optimization. Our approach automatically generates hard text-based prompts for both text-to-image and text-to-text applications. In the text-to-image setting, the method creates hard prompts for diffusion models, allowing API users to easily generate, discover, and mix and match image concepts without prior knowledge on how to prompt the model. In the text-to-text setting, we show that hard prompts can be automatically discovered that are effective in tuning LMs for classification.

论文链接:https://arxiv.org/abs/2302.03668

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢