来自今天的爱可可AI前沿推介

[LG] Probabilistic Contrastive Learning Recovers the Correct Aleatoric Uncertainty of Ambiguous Inputs

M Kirchhof, E Kasneci, S J Oh

[University of Tubingen & TUM University]

用概率对比学习恢复模糊性输入的正确后验不确定性

要点:

-

将非线性 ICA 扩展到非主观非决定性生成过程,以模拟输入的模糊性; -

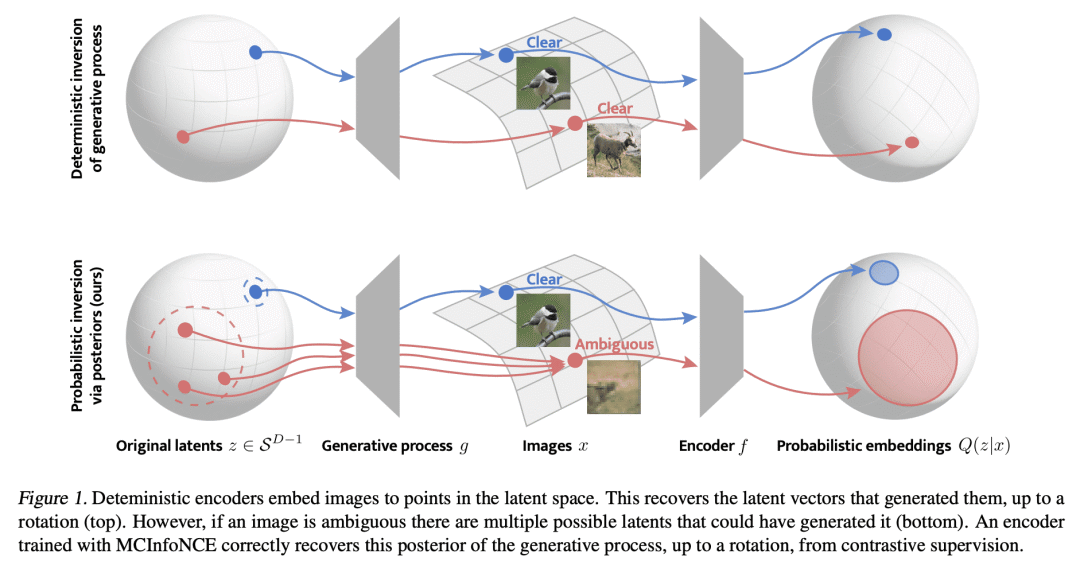

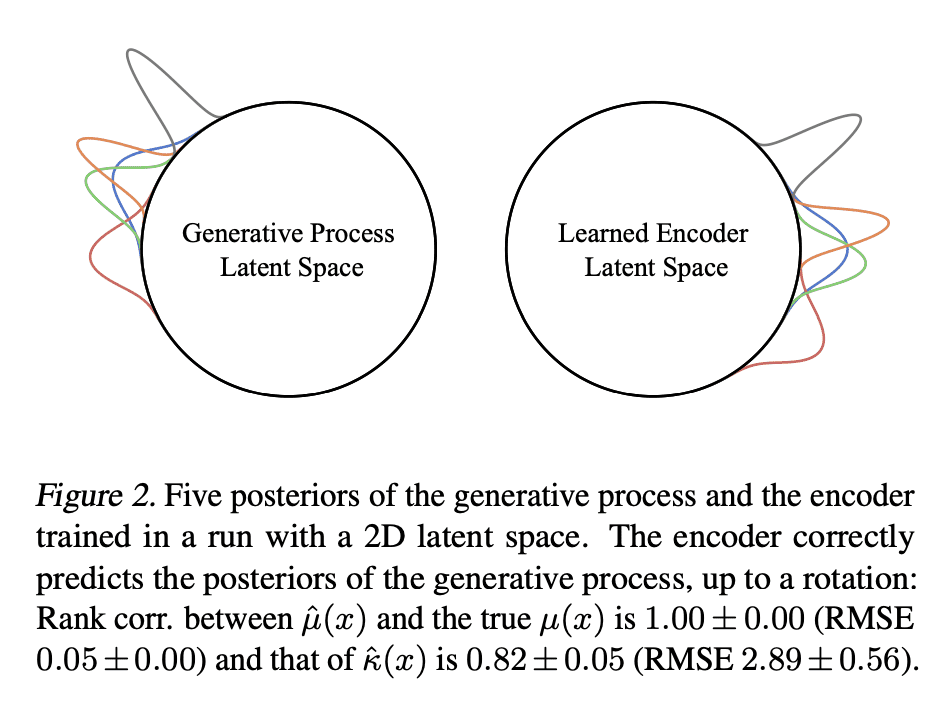

提出 MCInfoNCE,用于训练预测概率嵌入的编码器; -

理论和经验证明,预测的后验是正确的,反映了真正的不确定性。

一句话总结:

提出 MCInfoNCE,一种预测数据生成过程的正确后验的概率性对比损失,并在图像检索中提供校准的不确定性估计和可信区间。

摘要:

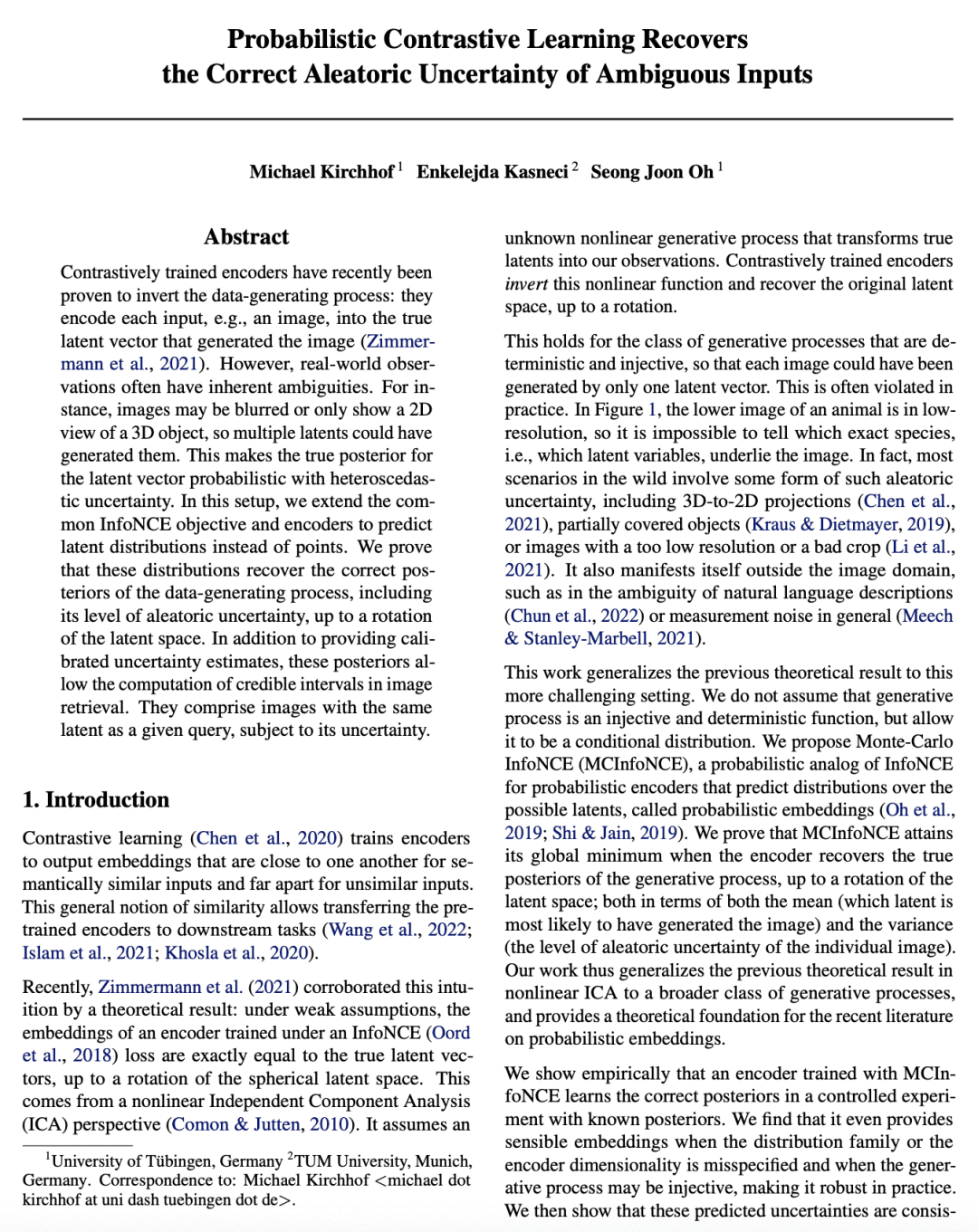

对比训练的编码器最近被证明可以反演数据生成过程:将每个输入,例如图像,编码为生成图像的真实潜向量。然而,现实世界的观察往往具有内在的模糊性。例如,图像可能是模糊的,或者只显示一个 3D 物体的 2D 视图,所以可能有多个潜量产生了它们。这使得潜向量的真实后验具有异方差不确定性的概率。在这种情况下,本文扩展了常见的InfoNCE目标和编码器,以预测潜分布而不是点。本文证明,这些分布恢复了数据生成过程的正确后验,包括不确定性水平,直到潜空间的旋转。除了提供校准的不确定性估计外,这些后验允许计算图像检索中的可信区间。包括具有与给定查询相同的潜量的图像,受其不确定性的影响。

Contrastively trained encoders have recently been proven to invert the data-generating process: they encode each input, e.g., an image, into the true latent vector that generated the image (Zimmermann et al., 2021). However, real-world observations often have inherent ambiguities. For instance, images may be blurred or only show a 2D view of a 3D object, so multiple latents could have generated them. This makes the true posterior for the latent vector probabilistic with heteroscedastic uncertainty. In this setup, we extend the common InfoNCE objective and encoders to predict latent distributions instead of points. We prove that these distributions recover the correct posteriors of the data-generating process, including its level of aleatoric uncertainty, up to a rotation of the latent space. In addition to providing calibrated uncertainty estimates, these posteriors allow the computation of credible intervals in image retrieval. They comprise images with the same latent as a given query, subject to its uncertainty.

论文连接:https://arxiv.org/abs/2302.02865

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢