来自今天的爱可可AI前沿推介

[LG] A modern look at the relationship between sharpness and generalization

M Andriushchenko, F Croce, M Müller, M Hein, N Flammarion

[EPFL & Tubingen AI Center]

锐度和泛化间关系的现代视角

要点:

-

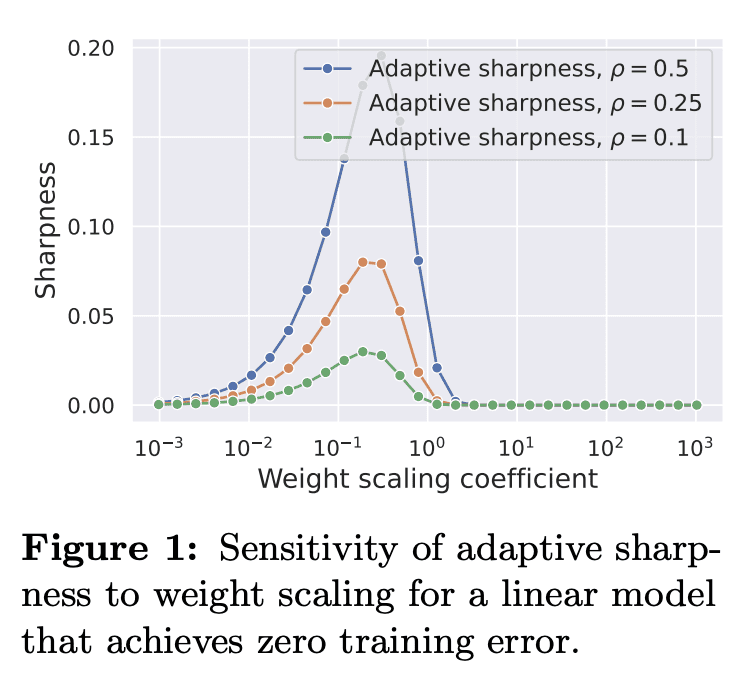

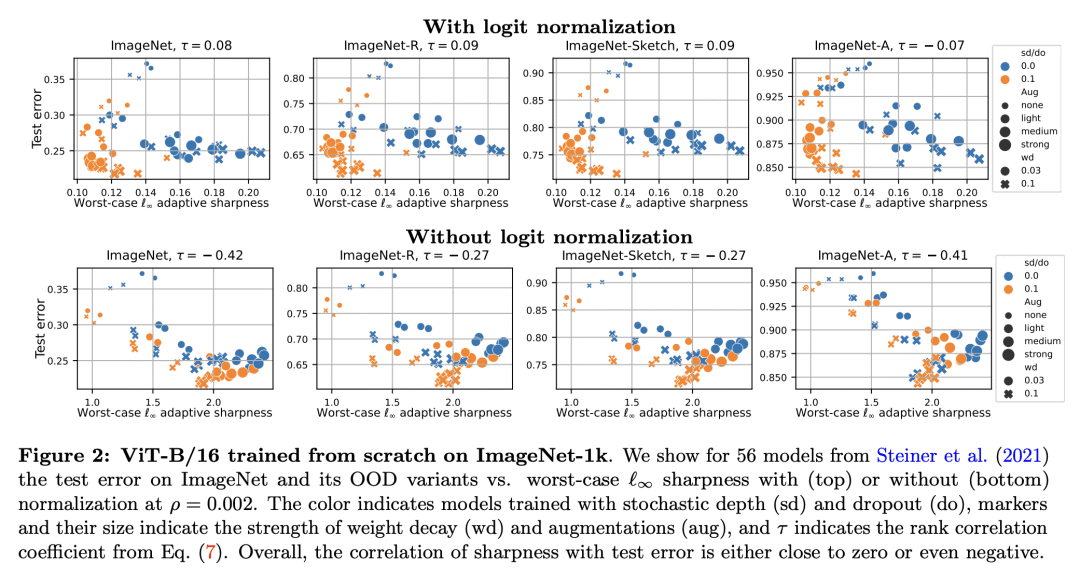

在 ImageNet 和 MNLI 上,从从头开始训练到微调 Transformer 的各种设置中,评估了多种重参数化不变锐度措施; -

锐度与泛化没有很好的关联,而是与一些训练参数如学习率相关; -

在多种情况下,锐度与 OOD 泛化存在一致的负相关关系,这意味着更锐利的最小值可以更好地泛化。 -

正确的锐度测量是高度依赖于数据的。

一句话总结:

在现代深度神经网络中,重参数化不变锐度可能不是一个好的泛化指标。

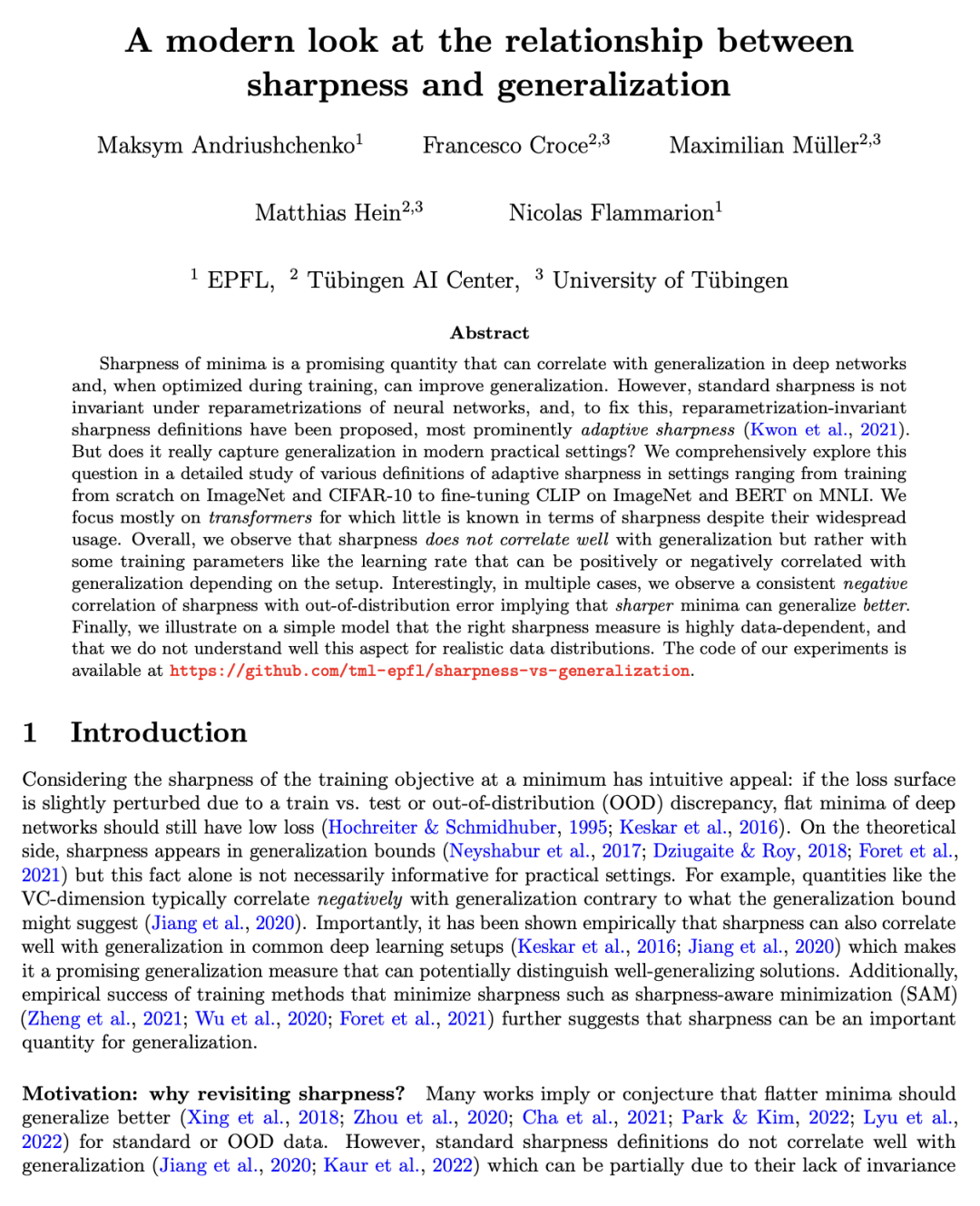

Sharpness of minima is a promising quantity that can correlate with generalization in deep networks and, when optimized during training, can improve generalization. However, standard sharpness is not invariant under reparametrizations of neural networks, and, to fix this, reparametrization-invariant sharpness definitions have been proposed, most prominently adaptive sharpness (Kwon et al., 2021). But does it really capture generalization in modern practical settings? We comprehensively explore this question in a detailed study of various definitions of adaptive sharpness in settings ranging from training from scratch on ImageNet and CIFAR-10 to fine-tuning CLIP on ImageNet and BERT on MNLI. We focus mostly on transformers for which little is known in terms of sharpness despite their widespread usage. Overall, we observe that sharpness does not correlate well with generalization but rather with some training parameters like the learning rate that can be positively or negatively correlated with generalization depending on the setup. Interestingly, in multiple cases, we observe a consistent negative correlation of sharpness with out-of-distribution error implying that sharper minima can generalize better. Finally, we illustrate on a simple model that the right sharpness measure is highly data-dependent, and that we do not understand well this aspect for realistic data distributions. The code of our experiments is available at this https URL.

论文链接:https://arxiv.org/abs/2302.07011

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢