来自今天的爱可可AI前沿推介

[CL] UniMax: Fairer and More Effective Language Sampling for Large-Scale Multilingual Pretraining

H W Chung, X Garcia, A Roberts, Y Tay, O Firat, S Narang, N Constant

[Google]

UniMax: 面向大规模多语言预训练的更公平更有效的语言抽样

要点:

-

在不同模型规模下,UniMax在多语言预训练中的表现优于基于温度的采样; -

UniMax 对高资源语言的覆盖更均匀,并减轻了对低资源语言的过拟合; -

提出一种改进和刷新的 mC4 多语言语料库变体,包括107种语言的29万亿字符。

一句话总结:

UniMax 是一种用于大规模多语言预训练的新的语言抽样方法,能更均匀地覆高资源语言,同时减轻低资源语言的过拟合。

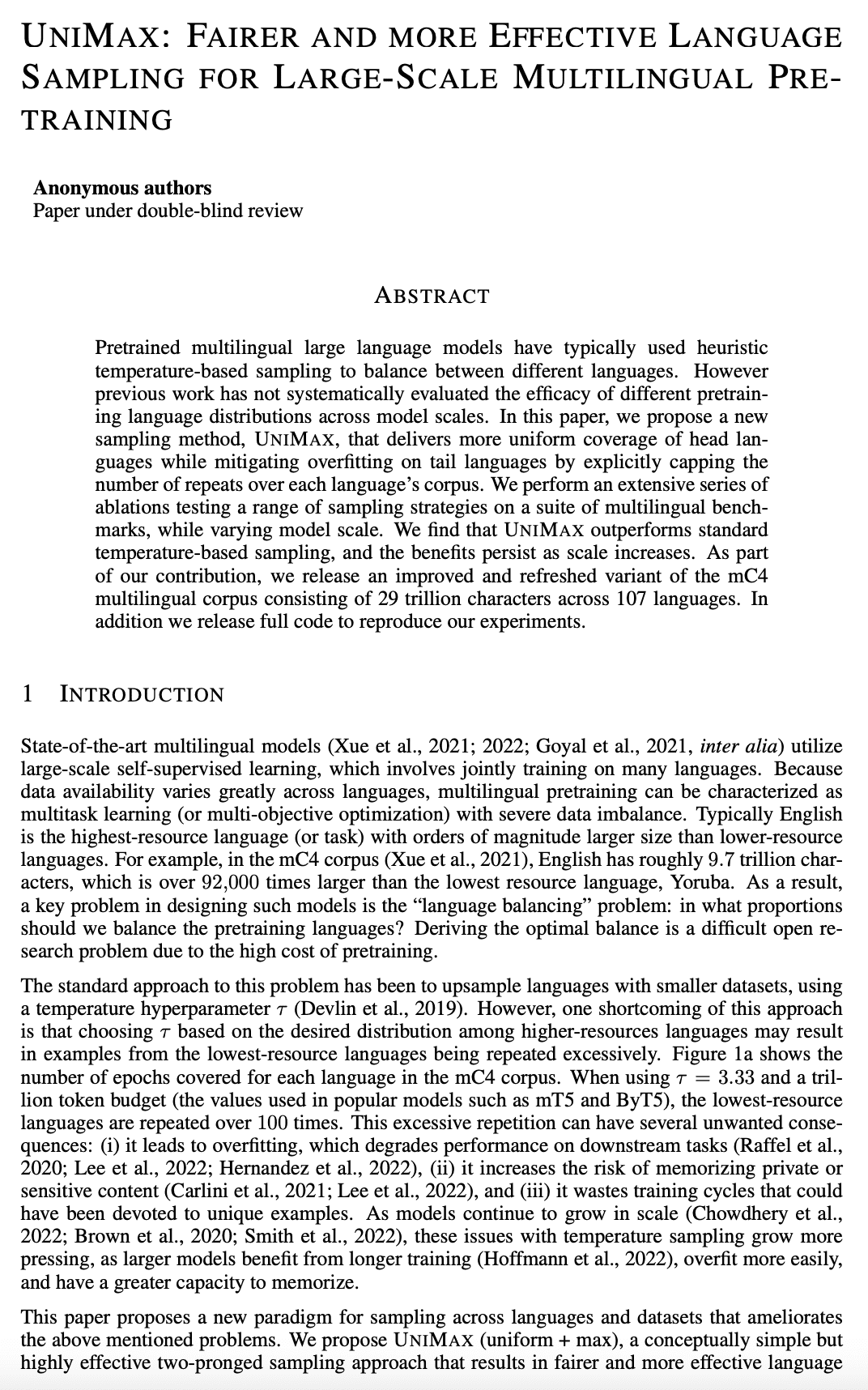

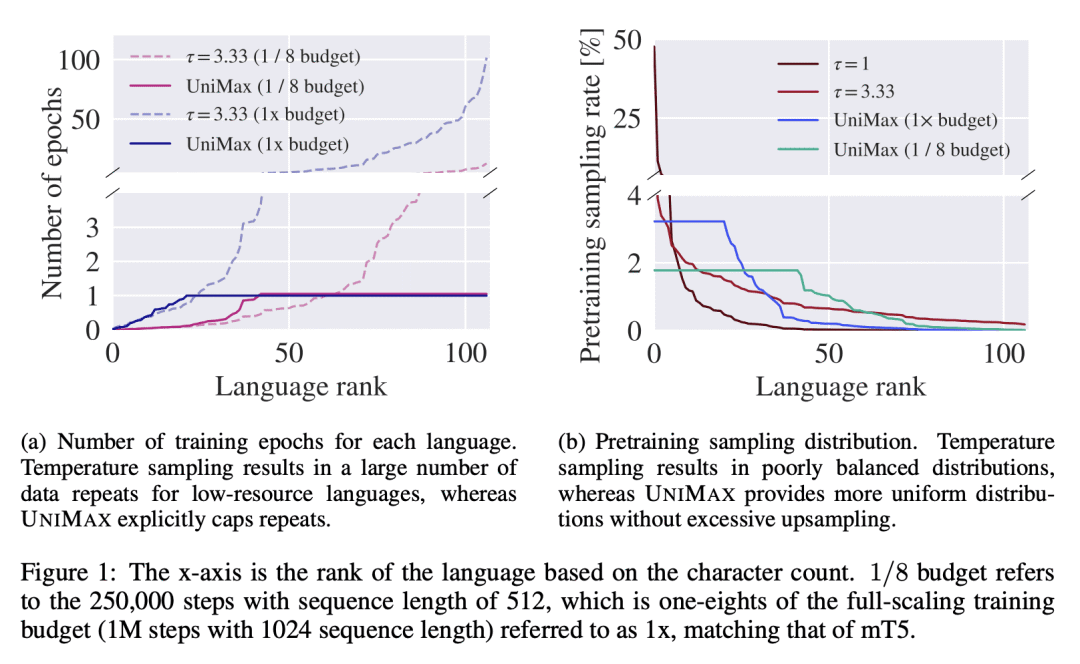

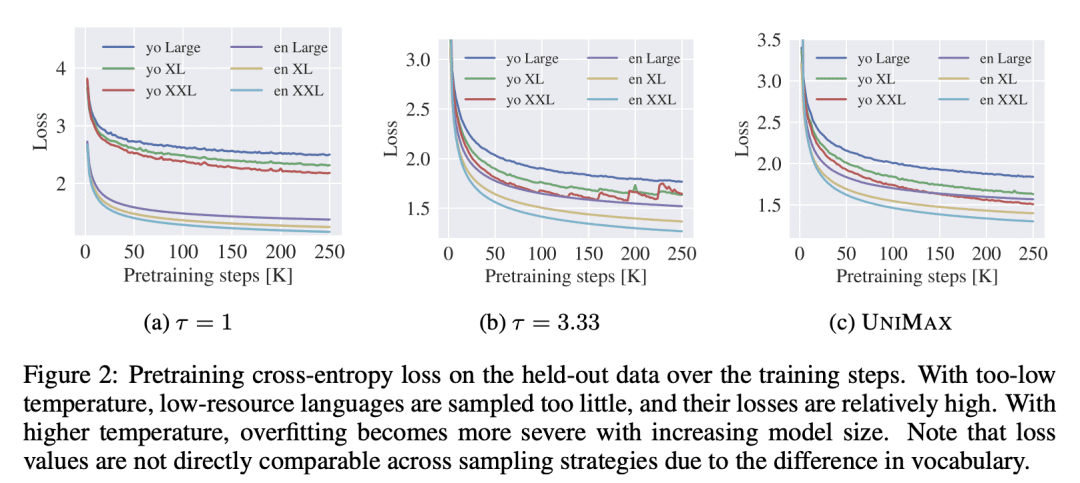

Pretrained multilingual large language models have typically used heuristic temperature-based sampling to balance between different languages. However previous work has not systematically evaluated the efficacy of different pretraining language distributions across model scales. In this paper, we propose a new sampling method, UniMax, that delivers more uniform coverage of head languages while mitigating overfitting on tail languages by explicitly capping the number of repeats over each languages corpus. We perform an extensive series of ablations testing a range of sampling strategies on a suite of multilingual benchmarks, while varying model scale. We find that UniMax outperforms standard temperature-based sampling, and the benefits persist as scale increases. As part of our contribution, we release an improved and refreshed variant of the mC4 multilingual corpus consisting of 29 trillion characters across 107 languages. In addition we release full code to reproduce our experiments.

论文链接:https://openreview.net/forum?id=kXwdL1cWOAi

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢