Transformers are Sample-Efficient World Models

V Micheli, E Alonso, F Fleuret

[University of Geneva]

Transformers是样本高效的世界模型

要点:

-

深度强化学习智能体的样本效率低下,限制了它们在现实世界中的应用; -

IRIS 是一个数据高效的智能体,在一个由离散自编码器和自回归 Transformer 组成的世界模型中学习; -

IRIS 实现了 1.046 的人工归一化平均分,在 Atari 100k 基准的 26 个游戏中,有 10 个超过了人类; -

IRIS 为有效解决复杂环境问题开辟了一条新的道路。

一句话总结:

IRIS 是一个在由离散自编码器和自回归 Transformer 组成的世界模型的想象中训练的智能体,在 Atari 100k 基准中为没有前瞻搜索的方法设定了新的最先进水平。

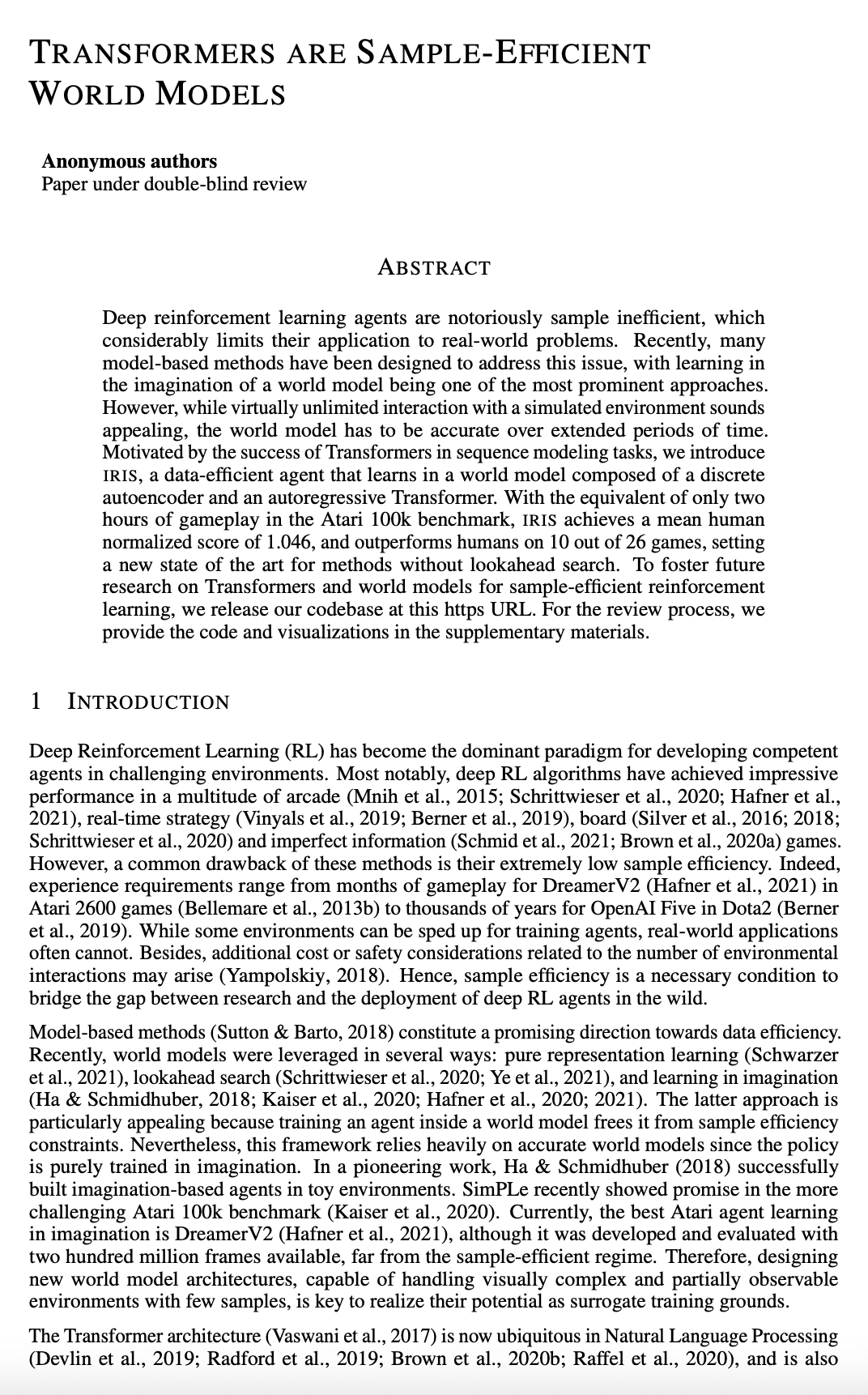

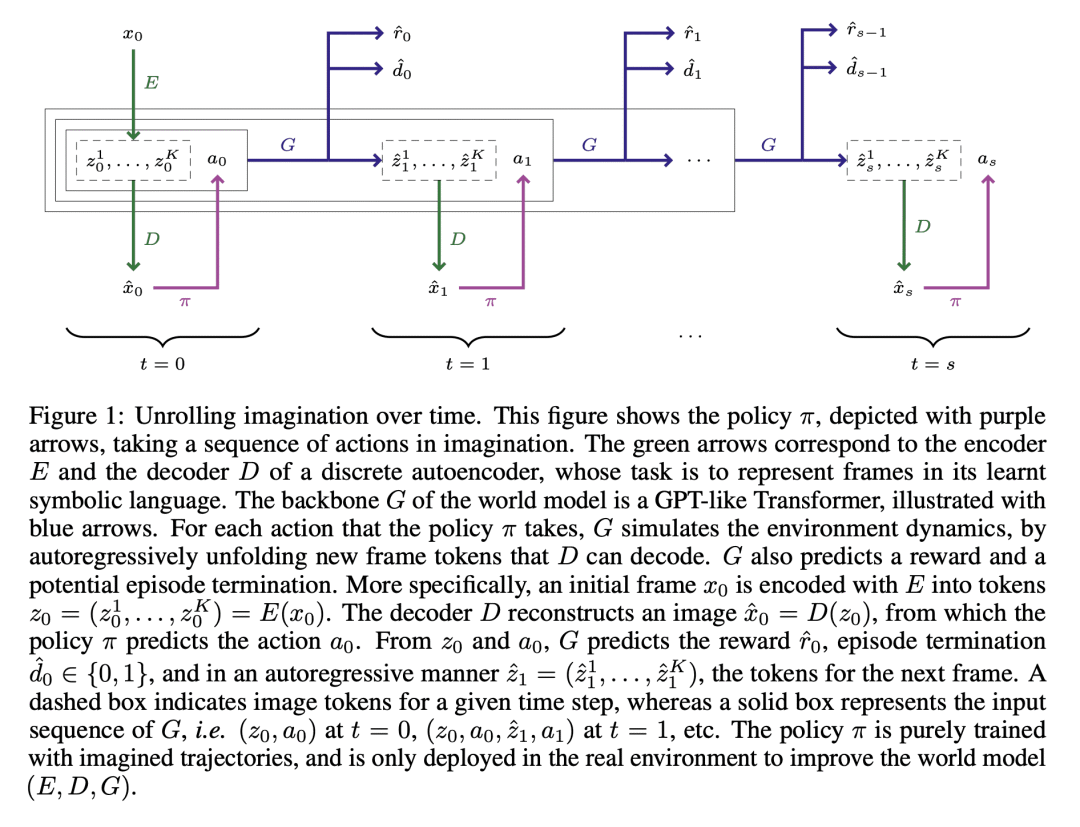

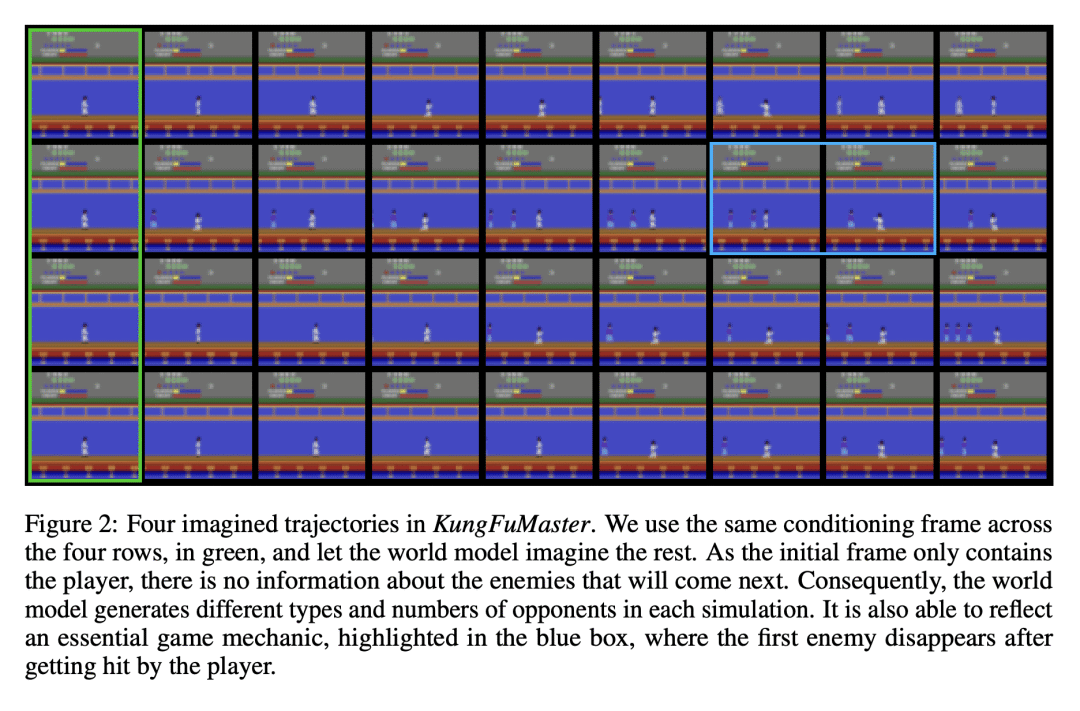

Deep reinforcement learning agents are notoriously sample inefficient, which considerably limits their application to real-world problems. Recently, many model-based methods have been designed to address this issue, with learning in the imagination of a world model being one of the most prominent approaches. However, while virtually unlimited interaction with a simulated environment sounds appealing, the world model has to be accurate over extended periods of time. Motivated by the success of Transformers in sequence modeling tasks, we introduce IRIS, a data-efficient agent that learns in a world model composed of a discrete autoencoder and an autoregressive Transformer. With the equivalent of only two hours of gameplay in the Atari 100k benchmark, IRIS achieves a mean human normalized score of 1.046, and outperforms humans on 10 out of 26 games, setting a new state of the art for methods without lookahead search. To foster future research on Transformers and world models for sample-efficient reinforcement learning, we release our codebase at this https URL. For the review process, we provide the code and visualizations in the supplementary materials.

https://openreview.net/forum?id=vhFu1Acb0xb

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢