Neural Algorithmic Reasoning with Causal Regularisation

B Bevilacqua, K Nikiforou, B Ibarz, I Bica, M Paganini, C Blundell, J Mitrovic, P Veličković

[DeepMind & Purdue University]

基于因果正则化的神经算法推理

要点:

-

所提出的自监督学习目标,采用了从现有提示中得到的增强,代表了算法的中间步骤,将基于GNN的算法推理器的执行建立在目标算法所进行的计算之上; -

因果图的设计,是为了捕捉这样的观察:算法在某一步骤的执行只由输入的一个子集决定; -

自监督目标学习的表征对不影响计算步骤的输入子集的变化是不变的; -

基于所提出的自监督目标的 Hint-ReLIC 模型使得目标算法的输出更加鲁棒,特别是与自回归暗示预测相比。

一句话总结:

基于因果正则化的神经算法推理,通过使用从算法的中间计算和基于因果图的自监督目标中得到的数据增强程序,提高了推理器的分布外泛化能力。

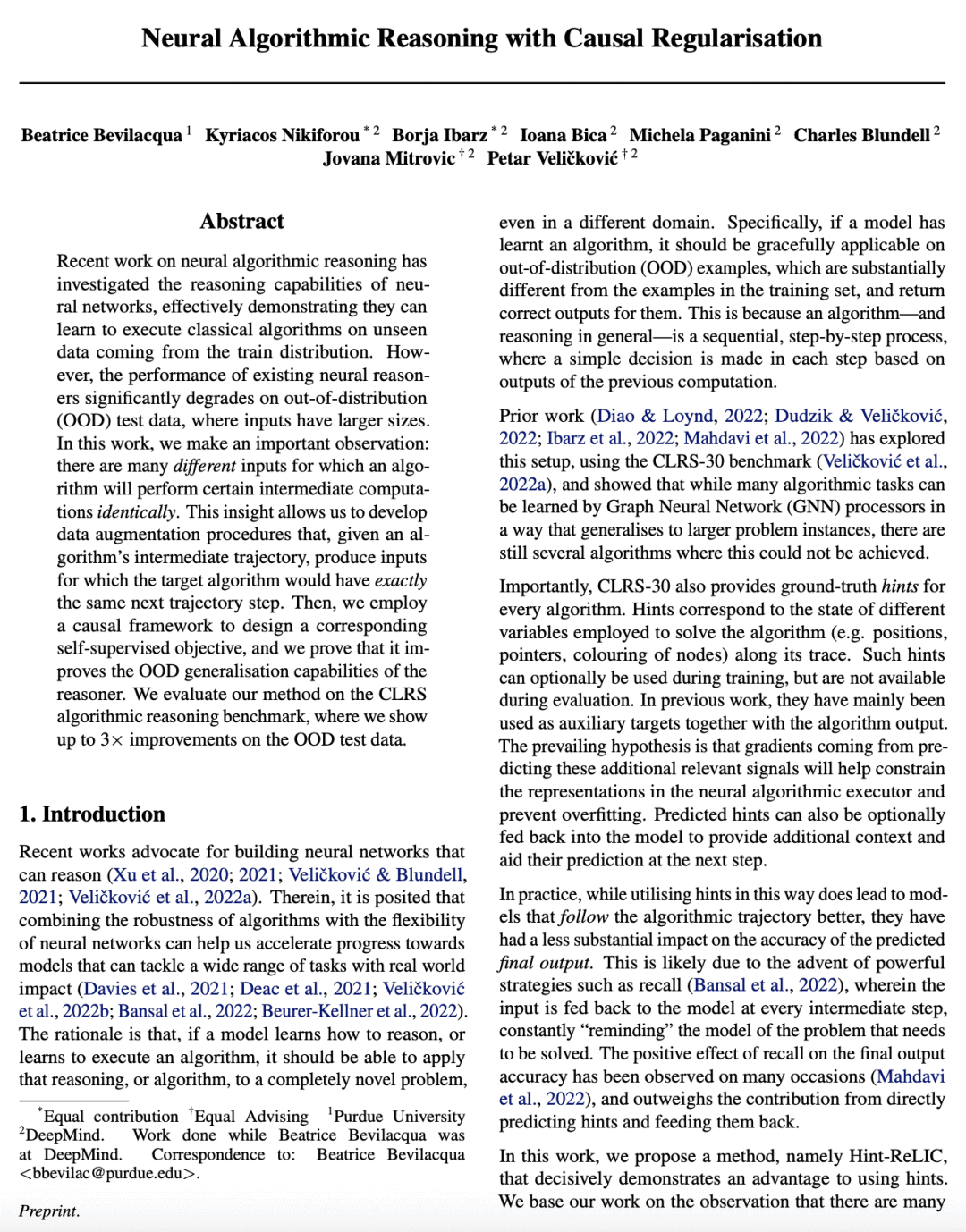

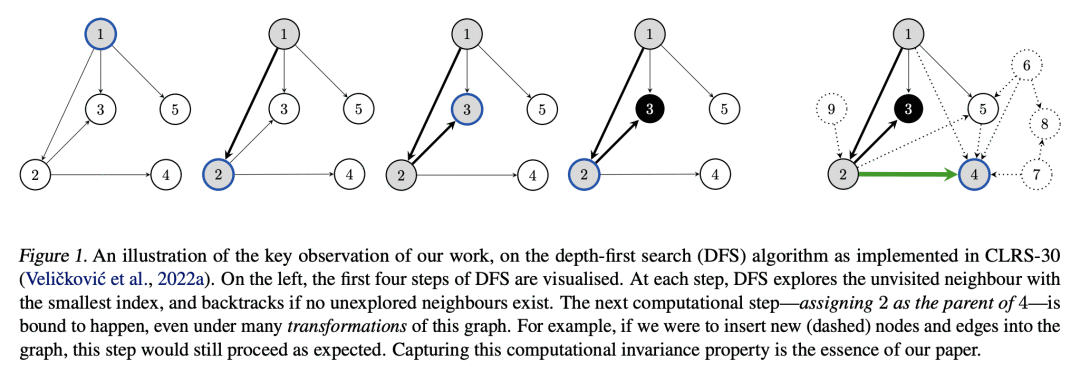

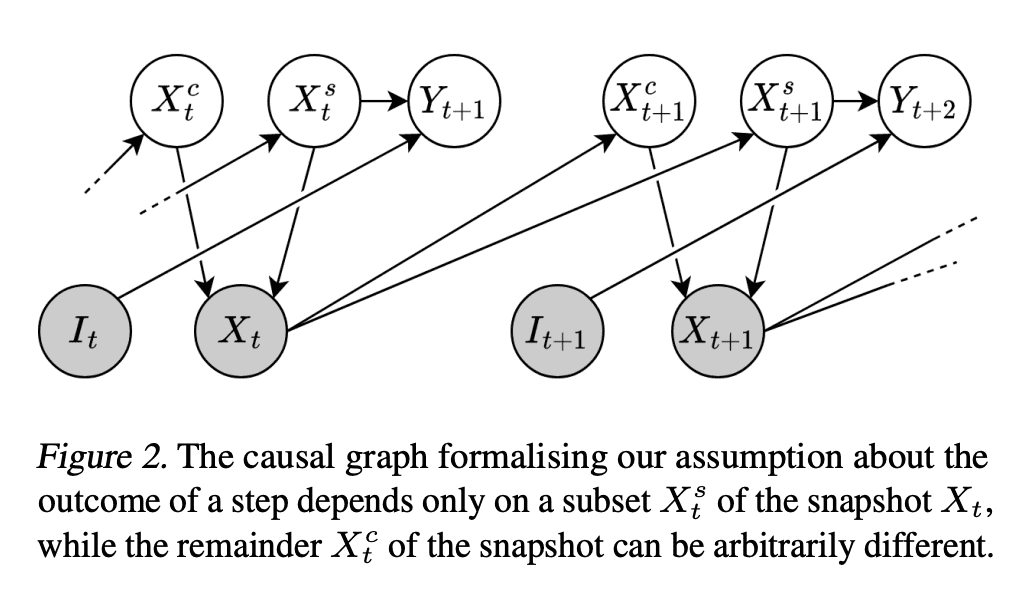

Recent work on neural algorithmic reasoning has investigated the reasoning capabilities of neural networks, effectively demonstrating they can learn to execute classical algorithms on unseen data coming from the train distribution. However, the performance of existing neural reasoners significantly degrades on out-of-distribution (OOD) test data, where inputs have larger sizes. In this work, we make an important observation: there are many \emph{different} inputs for which an algorithm will perform certain intermediate computations \emph{identically}. This insight allows us to develop data augmentation procedures that, given an algorithm's intermediate trajectory, produce inputs for which the target algorithm would have \emph{exactly} the same next trajectory step. Then, we employ a causal framework to design a corresponding self-supervised objective, and we prove that it improves the OOD generalisation capabilities of the reasoner. We evaluate our method on the CLRS algorithmic reasoning benchmark, where we show up to 3× improvements on the OOD test data.

https://arxiv.org/abs/2302.10258

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢