Towards Universal Fake Image Detectors that Generalize Across Generative Models

U Ojha, Y Li, Y J Lee

[University of Wisconsin-Madison]

跨生成模型的通用真伪检测器研究

要点:

-

现有的基于深度学习的方法在检测新生成模型的伪造图像方面是有限的;

-

限制的原因是由于分类器检测虚假模式的不对称调整,导致真实类持有所有的非伪造样本;

-

通过使用没有明确训练的特征空间来区分真伪图像,提出了无需学习的真假分类;

-

在这个空间中使用近邻和线性探测,使得检测伪造图像的泛化能力明显提高,特别是来自扩散/自回归模型等较新的方法。

一句话总结:

现有的基于深度学习的方法,无法从较新的生成模型中检测出伪造图像,一个简单的修复方法是使用一个没有经过真为分类训练的信息特征空间。

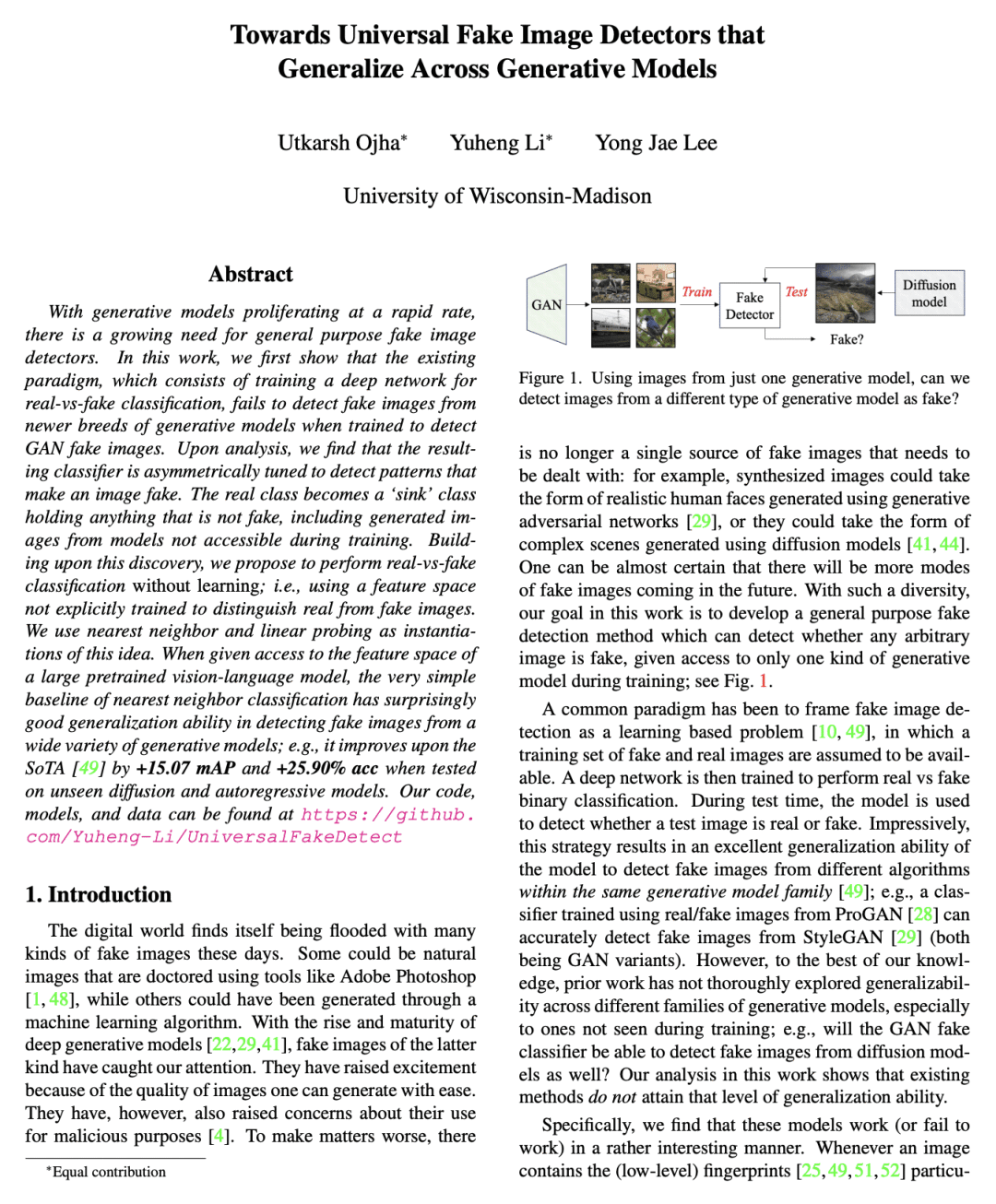

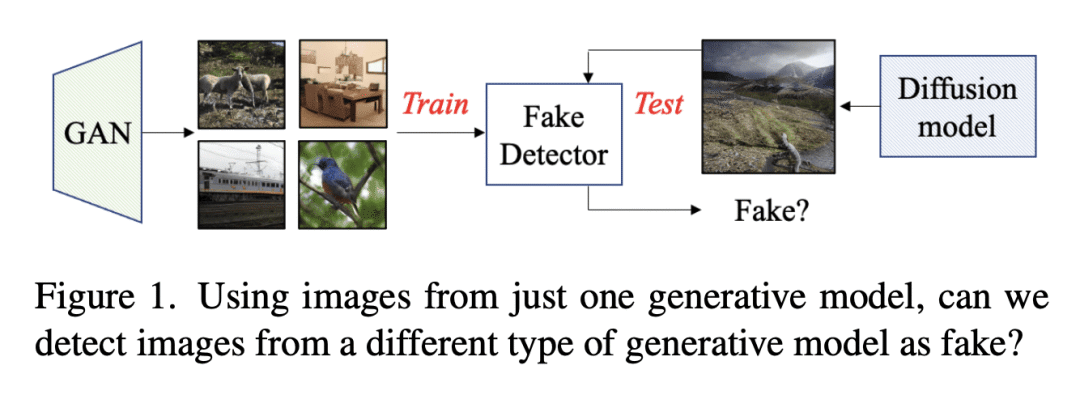

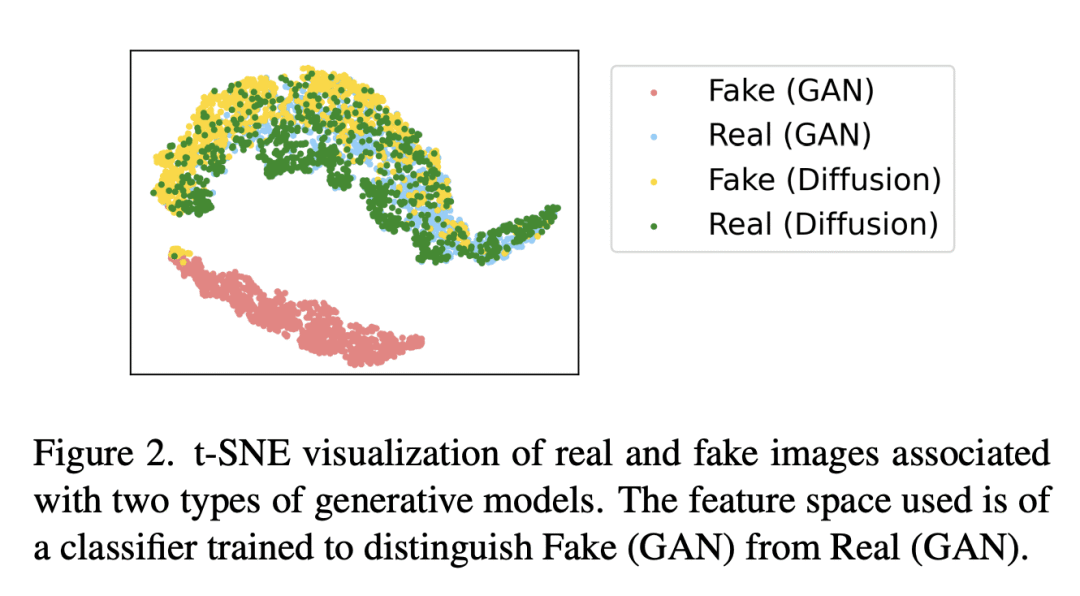

With generative models proliferating at a rapid rate, there is a growing need for general purpose fake image detectors. In this work, we first show that the existing paradigm, which consists of training a deep network for real-vs-fake classification, fails to detect fake images from newer breeds of generative models when trained to detect GAN fake images. Upon analysis, we find that the resulting classifier is asymmetrically tuned to detect patterns that make an image fake. The real class becomes a sink class holding anything that is not fake, including generated images from models not accessible during training. Building upon this discovery, we propose to perform real-vs-fake classification without learning; i.e., using a feature space not explicitly trained to distinguish real from fake images. We use nearest neighbor and linear probing as instantiations of this idea. When given access to the feature space of a large pretrained vision-language model, the very simple baseline of nearest neighbor classification has surprisingly good generalization ability in detecting fake images from a wide variety of generative models; e.g., it improves upon the SoTA by +15.07 mAP and +25.90% acc when tested on unseen diffusion and autoregressive models.

论文链接:https://arxiv.org/abs/2302.10174

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢