Reduce, Reuse, Recycle: Compositional Generation with Energy-Based Diffusion Models and MCMC

Y Du, C Durkan, R Strudel, J B. Tenenbaum, S Dieleman, R Fergus...

[MIT & Deepmind & Google Brain & INRIA]

减少、再利用、回收:用基于能量的扩散模型和MCMC进行组合生成

要点:

-

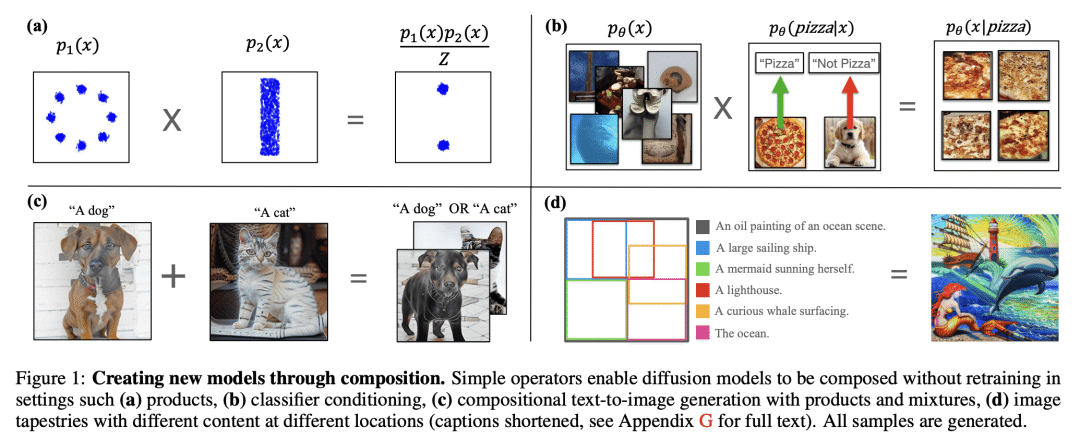

扩散模型在生成式建模中很受欢迎,但在组合生成任务中可能会失败; -

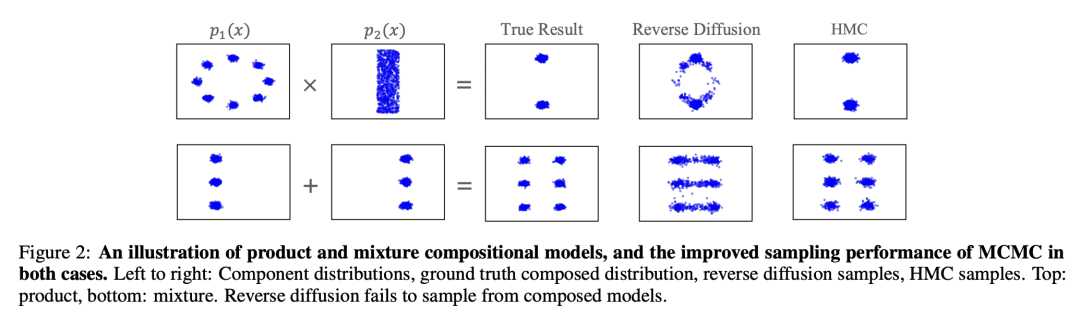

MCMC 衍生采样和基于能量的参数化,可以通过启用新方法来组合扩散模型和更强大的采样器来改善组合生成; -

该方法导致在各种域、规模和组合运算符中的明显改进; -

该方法的有效性在从 2D 数据到高分辨率文本到图像生成的设置中得到了证明。

一句话总结:

提出组合和重用扩散模型的新方法,用于组合生成和引导,通过 MCMC 衍生采样和基于能量的参数化提高性能。

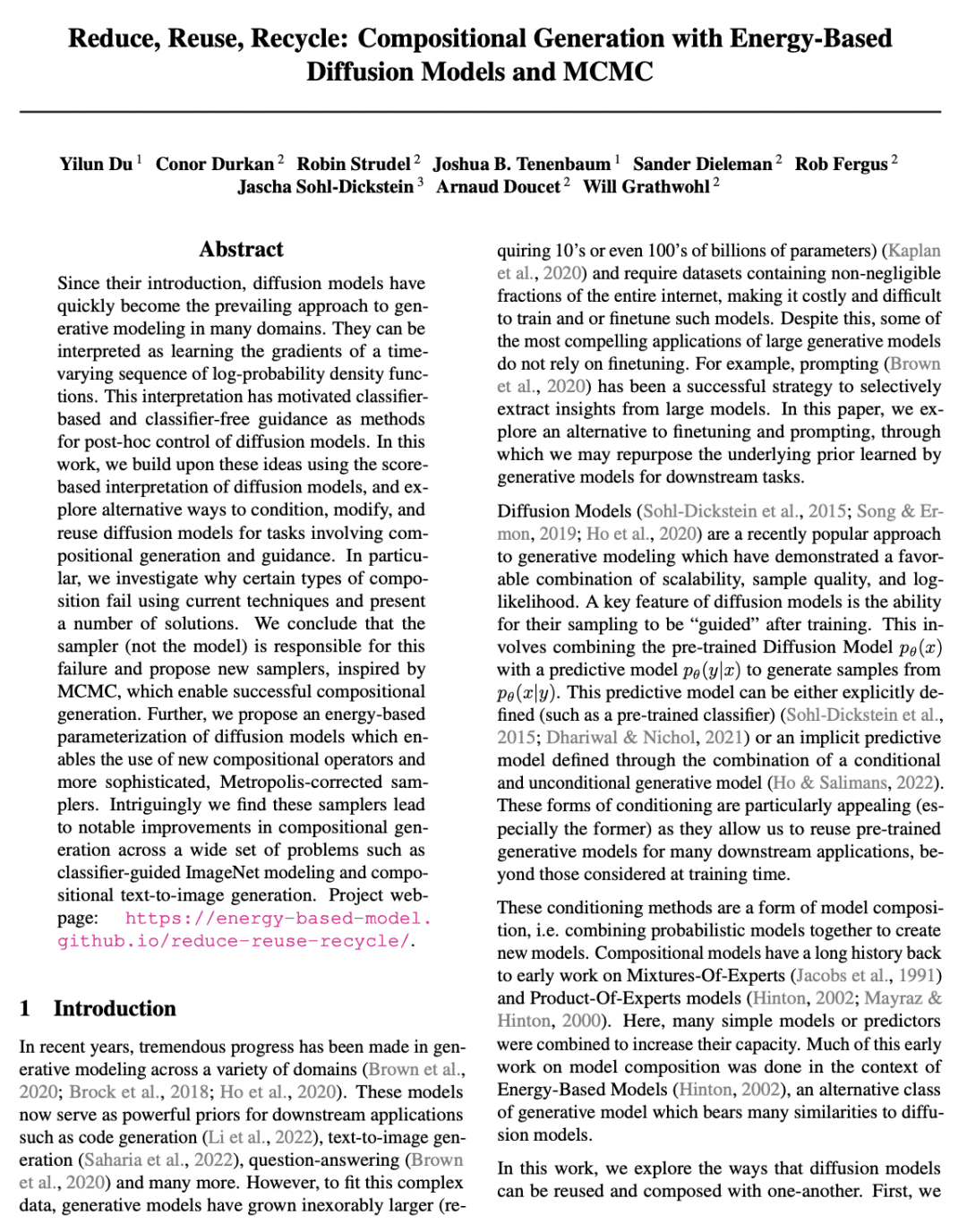

Since their introduction, diffusion models have quickly become the prevailing approach to generative modeling in many domains. They can be interpreted as learning the gradients of a time-varying sequence of log-probability density functions. This interpretation has motivated classifier-based and classifier-free guidance as methods for post-hoc control of diffusion models. In this work, we build upon these ideas using the score-based interpretation of diffusion models, and explore alternative ways to condition, modify, and reuse diffusion models for tasks involving compositional generation and guidance. In particular, we investigate why certain types of composition fail using current techniques and present a number of solutions. We conclude that the sampler (not the model) is responsible for this failure and propose new samplers, inspired by MCMC, which enable successful compositional generation. Further, we propose an energy-based parameterization of diffusion models which enables the use of new compositional operators and more sophisticated, Metropolis-corrected samplers. Intriguingly we find these samplers lead to notable improvements in compositional generation across a wide set of problems such as classifier-guided ImageNet modeling and compositional text-to-image generation.

论文链接:https://arxiv.org/abs/2302.11552

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢