Revisiting Activation Function Design for Improving Adversarial Robustness at Scale

C Xie, M Tan, B Gong, A Yuille, Q V Le

[University of California, Santa Cruz & Google& Johns Hopkins University]

改进激活函数设计以提高大规模对抗鲁棒性

要点:

-

平滑激活函数在不牺牲大规模图像分类准确性的情况下提高了对抗鲁棒性; -

用平滑替代方法取代ReLU,大大改善了 ImageNet 上的对抗鲁棒性,EfficientNet-L1 取得了最先进的结果,而且平滑激活函数在更大的网络中可以很好地扩展; -

在对抗训练中使用平滑激活函数可以提高寻找更难的对抗样本的能力,并为网络优化计算更好的梯度更新,从而在规模上提高鲁棒性; -

该研究强调了激活函数设计对于提高对抗鲁棒性的重要性,并鼓励更多的研究人员在大规模图像分类中研究该方向。

一句话总结:

用 SILU 这样的平滑激活函数代替 ReLU,可以在不牺牲精度的情况下提高对抗鲁棒性,并且在 EfficientNet-L1 这样的大型网络中效果良好。

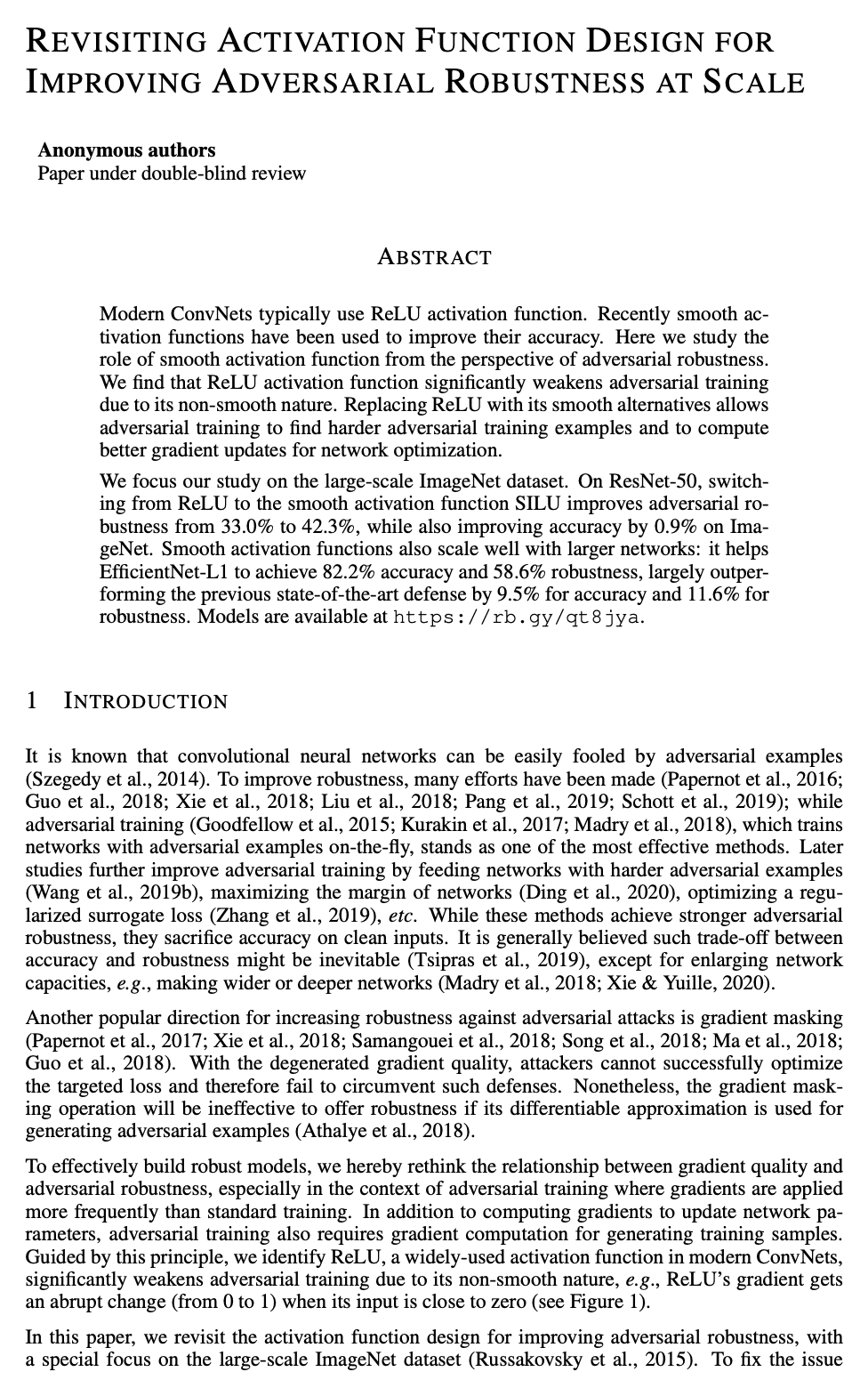

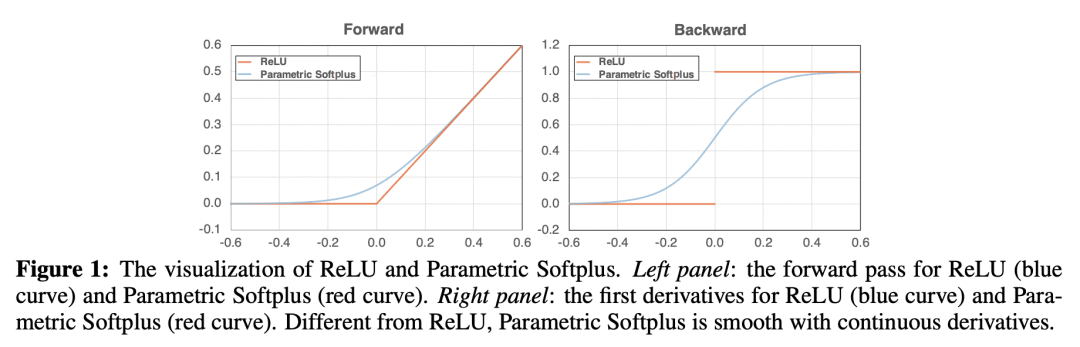

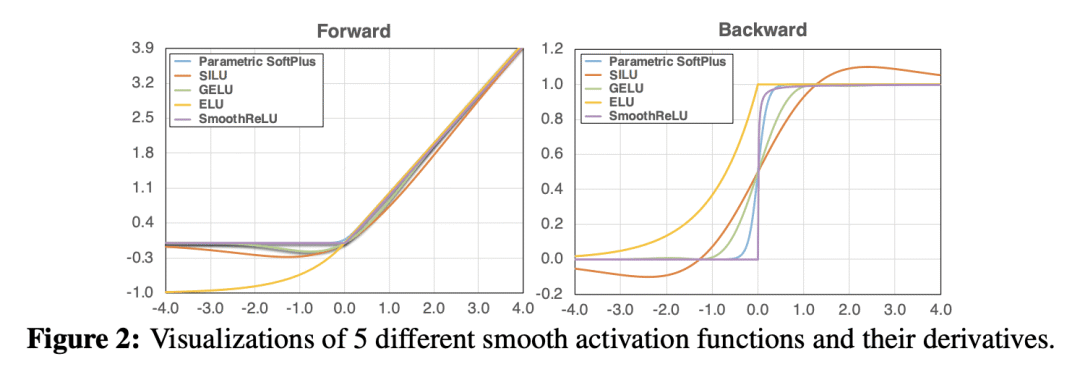

Modern ConvNets typically use ReLU activation function. Recently smooth activation functions have been used to improve their accuracy. Here we study the role of smooth activation function from the perspective of adversarial robustness. We find that ReLU activation function significantly weakens adversarial training due to its non-smooth nature. Replacing ReLU with its smooth alternatives allows adversarial training to find harder adversarial training examples and to compute better gradient updates for network optimization. We focus our study on the large-scale ImageNet dataset. On ResNet-50, switching from ReLU to the smooth activation function SILU improves adversarial robustness from 33.0% to 42.3%, while also improving accuracy by 0.9% on ImageNet. Smooth activation functions also scale well with larger networks: it helps EfficientNet-L1 to achieve 82.2% accuracy and 58.6% robustness, largely outperforming the previous state-of-the-art defense by 9.5% for accuracy and 11.6% for robustness. Models are available at https://rb.gy/qt8jya.

https://openreview.net/forum?id=BrKY4Wr6dk2

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢