Fair Diffusion: Instructing Text-to-Image Generation Models on Fairness

Felix Friedrich, Patrick Schramowski, Manuel Brack, Lukas Struppek, Dominik Hintersdorf, Sasha Luccioni, Kristian Kersting

Technical University Darmstadt, Huggingface,

公平扩散:指导文本到图像生成模型的公平性

要点:

1.生成型人工智能模型最近在质量上取得了惊人的结果,因此被应用于快速增长的应用中。然而,由于它们是高度数据驱动的,依赖于从互联网上随机收集的十亿大小的数据集,因此它们也遭受退化和偏见的人类行为。它们甚至可能强化这种偏见。

2.为了不仅揭示而且对抗这些不期望的影响,提出了一种新的策略,称为公平扩散,以在将生成文本部署到图像模型之后减弱偏见。

(i) 使用稳定扩散的例子检查和测量(性别-职业)偏见的来源,

(ii)提出并评估一种新的策略,即公平扩散,以克服和减轻不公平的模型结果,

(iii)讨论公平传播模式的未来路径,特别是如何将其融入社会直接促进与控制中的用户的公平性。

一句话总结:

具体来说,展示了基于人类指令,在任何方向上改变偏见,从而为身份群体带来任意新的比例。正如经验评估所证明的,这种引入的控制能够指导生成图像模型的公平性,而不需要数据过滤和额外的训练。[机器翻译+人工校对]

Fair Diffusion: Instructing Text-to-Image Generation Models on Fairness

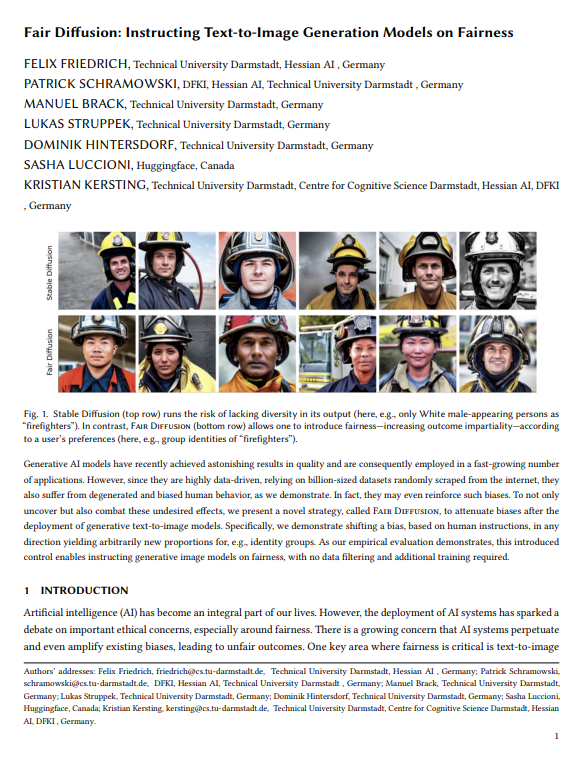

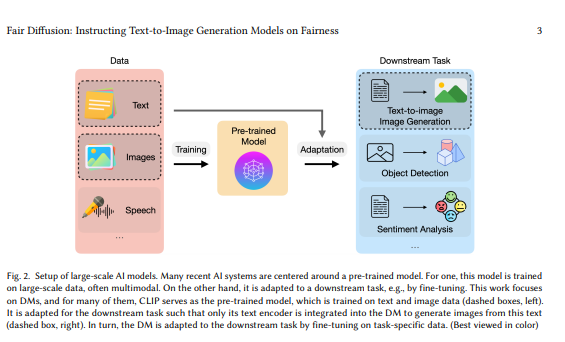

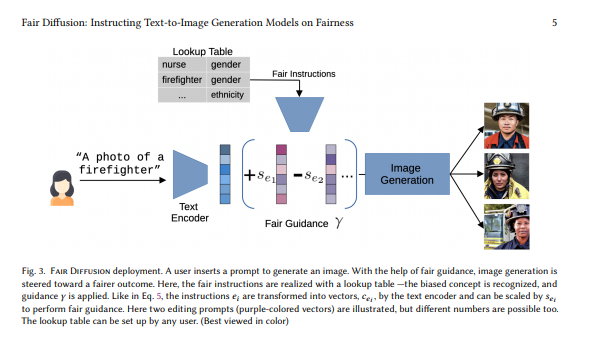

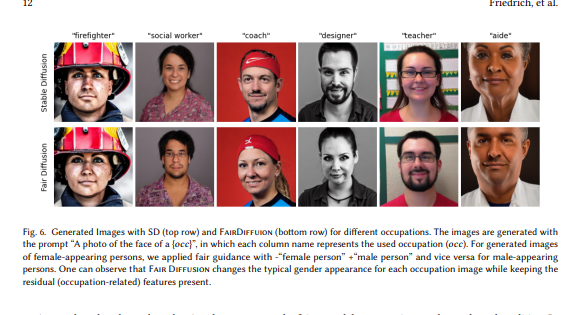

Generative AI models have recently achieved astonishing results in quality and are consequently employed in a fast-growing number of applications. However, since they are highly data-driven, relying on billion-sized datasets randomly scraped from the internet, they also suffer from degenerated and biased human behavior, as we demonstrate. In fact, they may even reinforce such biases. To not only uncover but also combat these undesired effects, we present a novel strategy, called Fair Diffusion, to attenuate biases after the deployment of generative text-to-image models. Specifically, we demonstrate shifting a bias, based on human instructions, in any direction yielding arbitrarily new proportions for, e.g., identity groups. As our empirical evaluation demonstrates, this introduced control enables instructing generative image models on fairness, with no data filtering and additional training required.

https://arxiv.org/pdf/2302.10893.pdf

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢