Edgeformers: Graph-Empowered Transformers for Representation Learning on Textual-Edge Networks

Bowen Jin, Yu Zhang, Yu Meng, Jiawei Han

Department of Computer Science, University of Illinois at Urbana-Champaign

Edgeformers:用于文本边缘网络上表示学习的图赋能变换器

要点:

1.许多现实世界社交/信息网络中的边缘与富文本信息(例如,用户-用户通信或用户产品评论)相关联。然而,主流的网络表示学习模型侧重于传播和聚合节点属性,缺乏利用边缘文本语义的具体设计。虽然存在边缘感知图神经网络,但它们直接将边缘属性初始化为特征向量,无法完全捕获边缘的上下文化文本语义。

2.在本文中,提出了一种基于图增强的Transformer的框架Edgeformers,通过以上下文化的方式对边缘上的文本进行建模来执行边缘和节点表示学习。

- 从概念上讲,确定了在网络边缘上建模文本信息的重要性,并阐述了文本边缘网络上的表示学习问题。

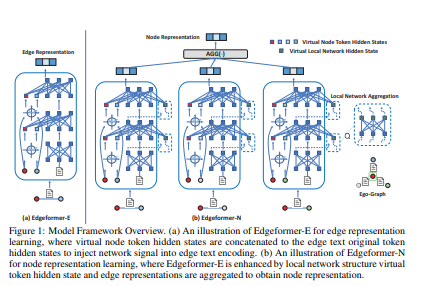

- 在方法上,提出了Edgeformers(即,Edgeformer-e和Edgeformer-N),这两种图形增强的Transformer架构,以上下文化的方式深度耦合网络和文本信息,用于边缘和节点表示学习。

- 根据经验,在来自不同领域的五个公共数据集上进行了实验,并证明了Edgeformer优于各种基线,包括以节点为中心的GNN、边缘感知GNN和PLM-GNN级联架构。

一句话总结:

具体来说,在边缘表示学习中,我们在编码边缘文本时向每个Transformer层注入网络信息;在节点表示学习中,通过每个节点的自我图中的注意力机制来聚集边缘表示。在来自三个不同领域的五个公共数据集上,Edgeformers在边缘分类和链接预测方面始终优于最先进的基线,分别证明了学习边缘和节点表示的有效性。[机器翻译+人工校对]

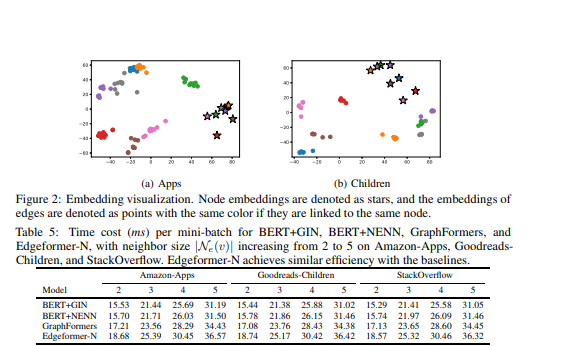

Edges in many real-world social/information networks are associated with rich text information (e.g., user-user communications or user-product reviews). However, mainstream network representation learning models focus on propagating and aggregating node attributes, lacking specific designs to utilize text semantics on edges. While there exist edge-aware graph neural networks, they directly initialize edge attributes as a feature vector, which cannot fully capture the contextualized text semantics of edges. In this paper, we propose Edgeformers, a framework built upon graph-enhanced Transformers, to perform edge and node representation learning by modeling texts on edges in a contextualized way. Specifically, in edge representation learning, we inject network information into each Transformer layer when encoding edge texts; in node representation learning, we aggregate edge representations through an attention mechanism within each node's ego-graph. On five public datasets from three different domains, Edgeformers consistently outperform state-of-the-art baselines in edge classification and link prediction, demonstrating the efficacy in learning edge and node representations, respectively.

https://arxiv.org/abs/2302.11050

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢