Tuning computer vision models with task rewards

André Susano Pinto, Alexander Kolesnikov, Yuge Shi, Lucas Beyer, Xiaohua Zhai

Google Research,Brain Team Zurich

使用任务奖励调整计算机视觉模型

要点:

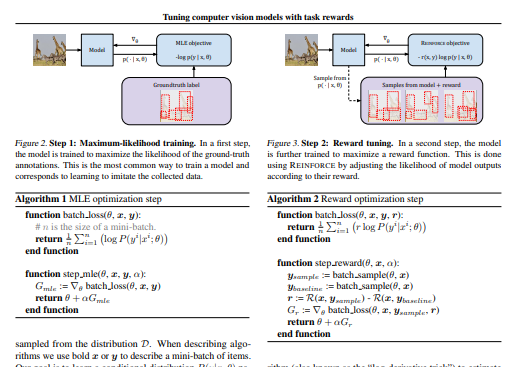

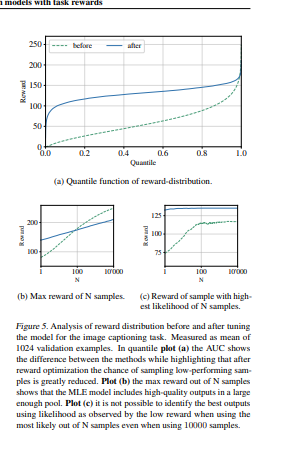

1.模型预测和预期用途之间的不一致可能对计算机视觉模型的部署不利。当任务涉及复杂的结构化输出时,问题就更加严重,因为设计解决这种不一致的程序变得更加困难。在自然语言处理中,这通常是使用强化学习技术来解决的,该技术将模型与任务奖励相匹配。

2.文章证明,使用REAR调整具有奖励函数的预训练模型对于广泛的计算机视觉任务来说是开箱即用的。

3.强调目标检测、全景分割和图像着色的奖励优化带来的定量和定性改进。在一组不同的计算机视觉任务上的方法的简单性和有效性证明了它的通用性和适应性。

4.尽管在这项工作中,主要以评估指标的形式使用奖励,这些初步结果显示了优化计算机视觉模型的有希望的途径,具有更复杂和更难指定的奖励,例如人类反馈或整体系统性能。

一句话总结:

采用这种方法,并在多个计算机视觉任务中显示了其惊人的有效性,如目标检测、全景分割、彩色化和图像字幕。这种方法有可能广泛适用于更好地将模型与各种计算机视觉任务对齐。

Misalignment between model predictions and intended usage can be detrimental for the deployment of computer vision models. The issue is exacerbated when the task involves complex structured outputs, as it becomes harder to design procedures which address this misalignment. In natural language processing, this is often addressed using reinforcement learning techniques that align models with a task reward. We adopt this approach and show its surprising effectiveness across multiple computer vision tasks, such as object detection, panoptic segmentation, colorization and image captioning. We believe this approach has the potential to be widely useful for better aligning models with a diverse range of computer vision tasks.

https://arxiv.org/pdf/2302.08242.pdf

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢