Improving Adaptive Conformal Prediction Using Self-Supervised Learning

Nabeel Seedat、Alan Jeffares、Fergus Imrie、Mihaela van der Schaar

University of Cambridge & University of California

利用自监督学习改进自适应共形预测

要点:

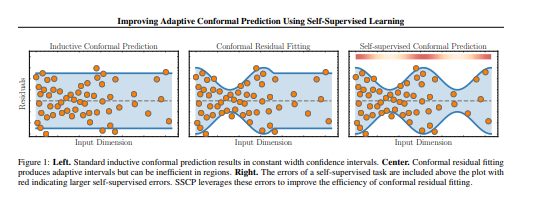

共形预测是一种强大的无分布不确定性量化工具,可以在有限样本保证下建立有效的预测区间。为了产生同样适用于每个实例难度的有效间隔,一种常见的方法是在单独的校准集上计算标准化不合格分数。自监督学习已在许多领域被有效地用于学习下游预测的一般表示。然而,在模型预训练和表征学习之外,自我监督的使用在很大程度上尚未被探索。在这项工作中,研究了自我监督的借口任务如何提高共形回归器的质量,特别是通过提高共形区间的适应性。

贡献:

- 自我监督提高了剩余估计,特别是在具有挑战性和稀疏区域:我们通过经验证明,自我监督损失改善了残差估计,从而改善了预测区间的适应性,特别是对于更具挑战性的示例和更稀疏的区域。

- 标记数据可以重新调整用途:根据经验证明,标记数据可以用于自我监督任务,以提高共形预测间隔的质量。

- 未标记数据提高CP:通过经验证明了未标记数据的价值,以提高共形预测区间的质量超出自我监督表征学习的标准应用。

一句话总结:

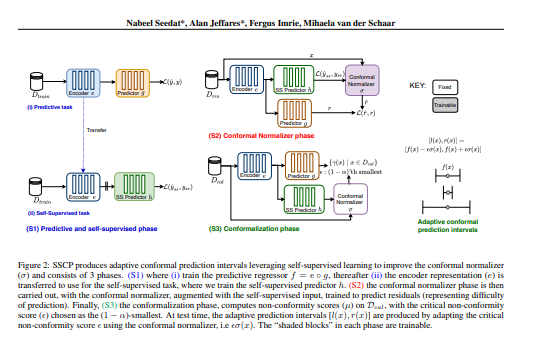

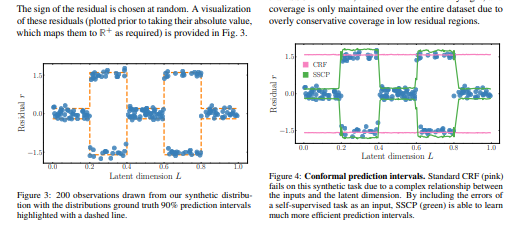

在现有预测模型的基础上训练具有自我监督借口任务的辅助模型,并使用自我监督错误作为额外特征来估计不合格分数。我们使用关于共形预测间隔的效率(宽度)、缺陷和过量的合成和真实数据,以经验方式证明了附加信息的益处。[机器翻译+人工校对]

Conformal prediction is a powerful distribution-free tool for uncertainty quantification, establishing valid prediction intervals with finite-sample guarantees. To produce valid intervals which are also adaptive to the difficulty of each instance, a common approach is to compute normalized nonconformity scores on a separate calibration set. Self-supervised learning has been effectively utilized in many domains to learn general representations for downstream predictors. However, the use of self-supervision beyond model pretraining and representation learning has been largely unexplored. In this work, we investigate how self-supervised pretext tasks can improve the quality of the conformal regressors, specifically by improving the adaptability of conformal intervals. We train an auxiliary model with a self-supervised pretext task on top of an existing predictive model and use the self-supervised error as an additional feature to estimate nonconformity scores. We empirically demonstrate the benefit of the additional information using both synthetic and real data on the efficiency (width), deficit, and excess of conformal prediction intervals.

https://arxiv.org/pdf/2302.12238.pdf

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢