MultiViz: Towards Visualizing and Understanding Multimodal Models

Paul Pu Liang、Yiwei Lyu、Gunjan Chhablani、Nihal Jain、Zihao Deng、Xingbo Wang、Louis Philippe Morency、Ruslan Salakhuddinov

Carnegie Mellon University, University of Michigan

MultiViz:可视化和理解多模态模型

要点:

1.多模态模型在现实世界应用中的前景激发了可视化和理解其内部机制的研究,最终目标是使利益相关者能够可视化模型行为、执行模型调试并促进对机器学习模型的信任。然而,现代多模态模型通常是黑箱神经网络,这使得理解其内部机制具有挑战性。如何在这些模型中可视化多模态交互的内部建模?

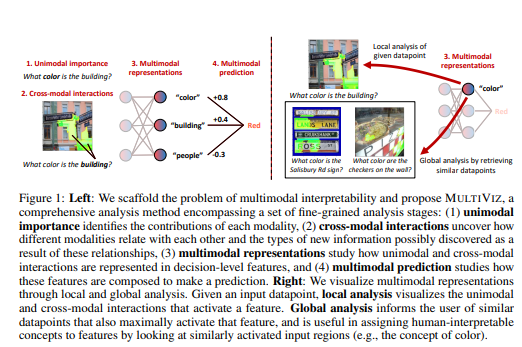

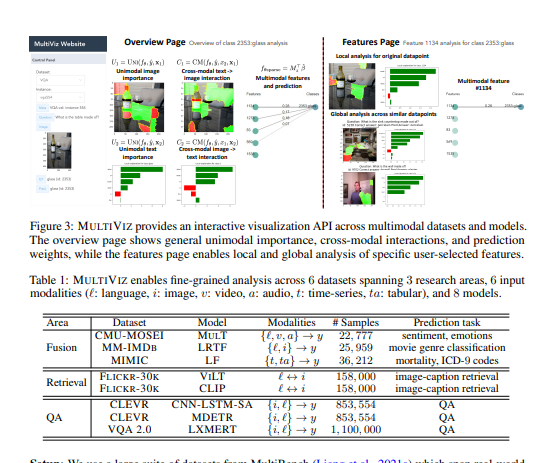

2.论文旨在通过提出MultiViz来填补这一空白,MultiViz是一种通过将可解释性问题分为4个阶段来分析多模态模型行为的方法:(1)单峰重要性:每个模态如何对下游建模和预测做出贡献,(3)多模态表示:如何在决策级特征中表示单峰和跨模态交互;(4)多模态预测:如何组合决策级特征进行预测。MultiViz旨在针对不同的模式、模型、任务和研究领域进行操作。

一句话总结:

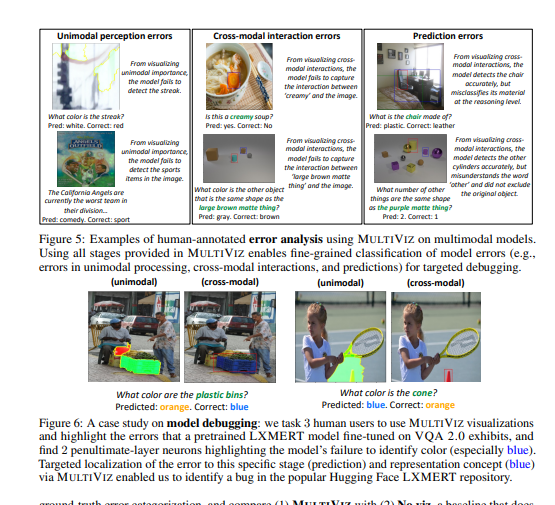

通过对6个真实世界任务中的8个训练模型的实验,表明,MultiViz中的互补阶段共同使用户能够(1)模拟模型预测,(2)为特征分配可解释的概念,(3)对模型错误分类进行错误分析,以及(4)使用错误分析中的见解来调试模型。MultiViz是公开的,将定期更新新的解释工具和指标,并欢迎社区的意见。[机器翻译+人工校对]

The promise of multimodal models for real-world applications has inspired research in visualizing and understanding their internal mechanics with the end goal of empowering stakeholders to visualize model behavior, perform model debugging, and promote trust in machine learning models. However, modern multimodal models are typically black-box neural networks, which makes it challenging to understand their internal mechanics. How can we visualize the internal modeling of multimodal interactions in these models? Our paper aims to fill this gap by proposing MultiViz, a method for analyzing the behavior of multimodal models by scaffolding the problem of interpretability into 4 stages: (1) unimodal importance: how each modality contributes towards downstream modeling and prediction, (2) cross-modal interactions: how different modalities relate with each other, (3) multimodal representations: how unimodal and cross-modal interactions are represented in decision-level features, and (4) multimodal prediction: how decision-level features are composed to make a prediction. MultiViz is designed to operate on diverse modalities, models, tasks, and research areas. Through experiments on 8 trained models across 6 real-world tasks, we show that the complementary stages in MultiViz together enable users to (1) simulate model predictions, (2) assign interpretable concepts to features, (3) perform error analysis on model misclassifications, and (4) use insights from error analysis to debug models. MultiViz is publicly available, will be regularly updated with new interpretation tools and metrics, and welcomes inputs from the community.

https://arxiv.org/pdf/2207.00056.pdf

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢