Learning Neural Volumetric Representations of Dynamic Humans in Minutes

Chen Geng, Sida Peng, Zhen Xu, Hujun Bao, Xiaowei Zhou

Zhejiang University

几分钟内学习动态人的神经体积表示

要点:

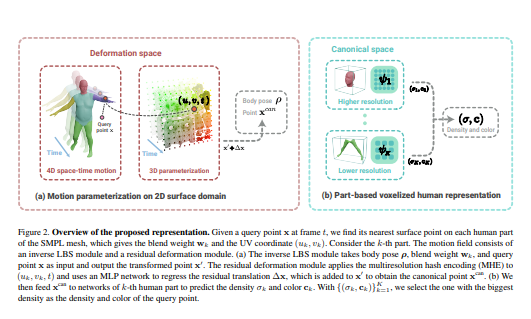

1.本文解决了从稀疏多视图视频中快速重建动态人的自由视点视频的挑战。最近的一些工作将动态人体表示为一个典型的神经辐射场(NeRF)和一个运动场,它们是通过可微分渲染从视频中学习的。但每个场景的优化通常需要几个小时。其他可推广的NeRF模型利用从数据集中学习到的先验知识,并通过仅以视觉逼真度为代价对新场景进行微调来减少优化时间。

2.在本文中,提出了一种新的方法,用于在几分钟内从具有竞争性视觉质量的稀疏视图视频中学习动态人类的神经体积视频。具体来说,定义了一种新的基于部分的体素化人体表示,以更好地将网络的表示能力分配给不同的人体部分。此外,提出了一种新的2D运动参数化方案,以提高变形场学习的收敛速度。

一句话总结:

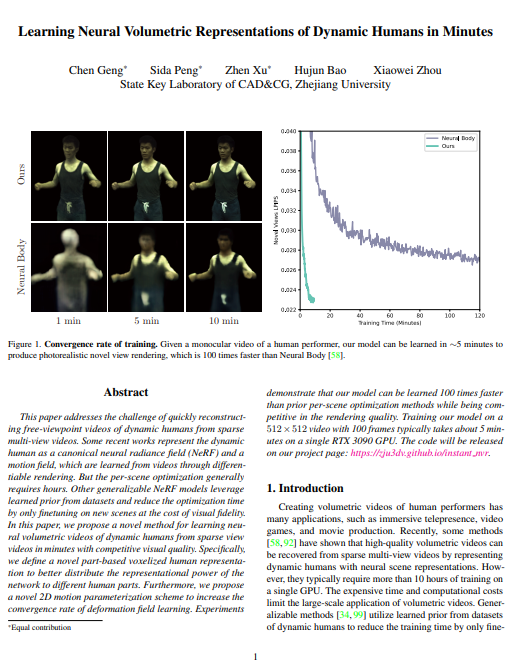

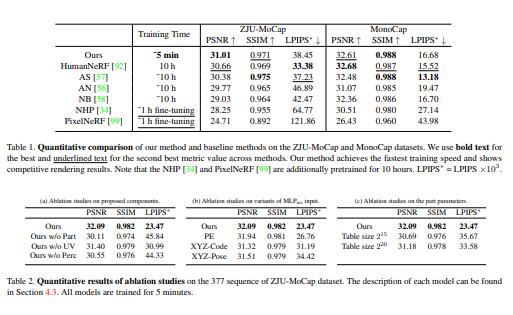

实验表明,该模型学习速度比先前的逐场景优化方法快100倍,同时在渲染质量上具有竞争力。在单个RTX 3090 GPU上,在100帧的512×512视频上训练我们的模型通常需要大约5分钟。代码将在项目页面上发布:Learning Neural Volumetric Representations of Dynamic Humans in Minutes (zju3dv.github.io)[机器翻译+人工总结]

This paper addresses the challenge of quickly reconstructing free-viewpoint videos of dynamic humans from sparse multi-view videos. Some recent works represent the dynamic human as a canonical neural radiance field (NeRF) and a motion field, which are learned from videos through differentiable rendering. But the per-scene optimization generally requires hours. Other generalizable NeRF models leverage learned prior from datasets and reduce the optimization time by only finetuning on new scenes at the cost of visual fidelity. In this paper, we propose a novel method for learning neural volumetric videos of dynamic humans from sparse view videos in minutes with competitive visual quality. Specifically, we define a novel part-based voxelized human representation to better distribute the representational power of the network to different human parts. Furthermore, we propose a novel 2D motion parameterization scheme to increase the convergence rate of deformation field learning. Experiments demonstrate that our model can be learned 100 times faster than prior per-scene optimization methods while being competitive in the rendering quality. Training our model on a 512×512 video with 100 frames typically takes about 5 minutes on a single RTX 3090 GPU.

https://arxiv.org/pdf/2302.12237.pdf

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢