Modulating Pretrained Diffusion Models for Multimodal Image Synthesis

C Ham, J Hays, J Lu, K K Singh, Z Zhang, T Hinz

[Georgia Institute of Technology & Adobe Research]

面向多模态图像合成的预训练扩散模型调制

要点:

-

提出一种称为多模态调节模块(MCM)的新方法,用预训练扩散模型实现条件图像合成,避免了从头训练网络或微调预训练网络这一计算昂贵的过程; -

MCM 是一个经过训练的小模块,可以在采样过程中使用扩散模型原始训练过程中未见的 2D 模态来调节扩散网络的预测; -

该方法使用户能控制图像的空间布局,并实现对图像生成过程的控制增加,同时保留了高图像质量; -

MCM 大约比原来的扩散模型小100倍,并且只用有限的新目标模态的配对样本进行训练,以在采样期间调制扩散模型输出,使得它比从头开始训练或微调大型模型更便宜,使用的内存更少。

一句话总结:

预训练扩散模型可以用多模态调节模块(MCM)进行调制,以实现便宜和有效的条件图像合成。

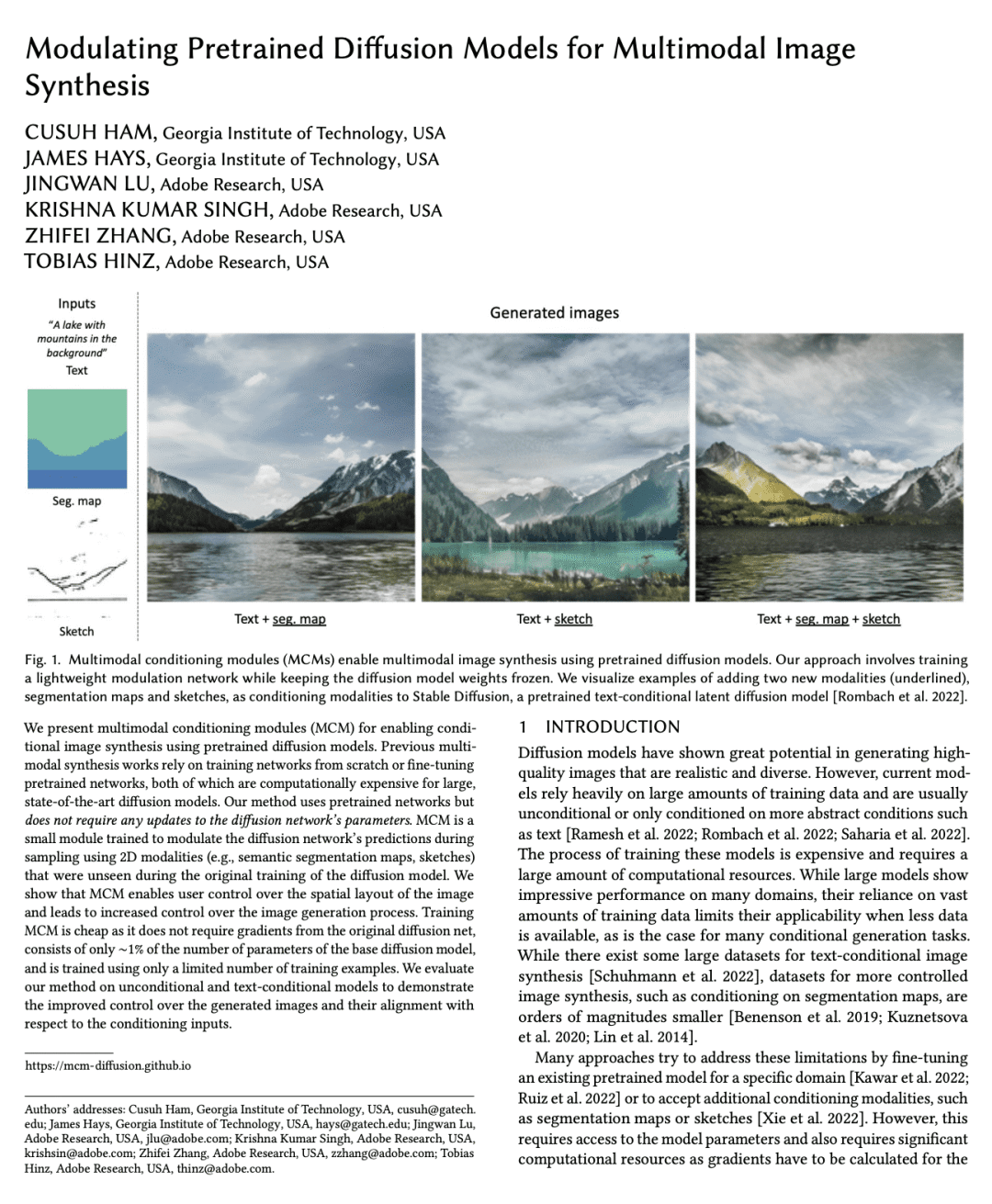

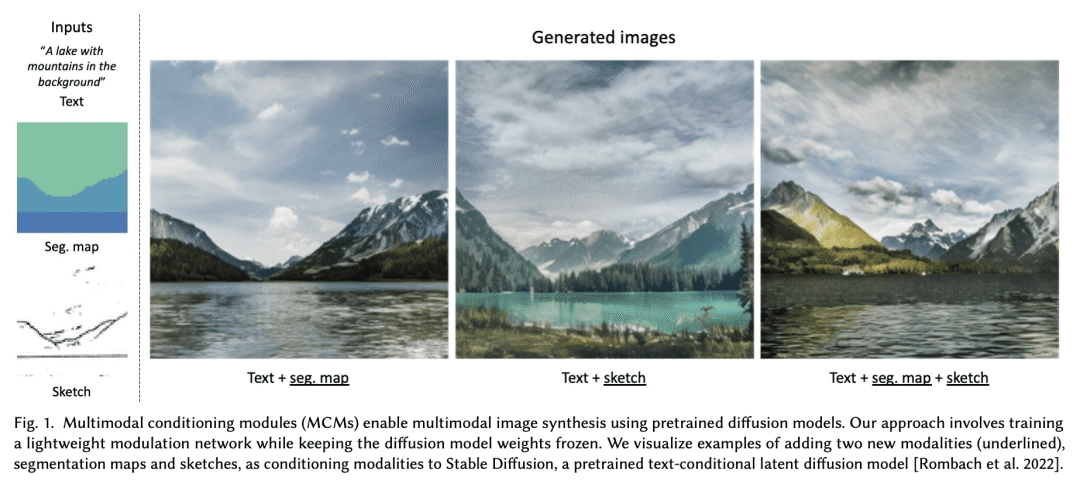

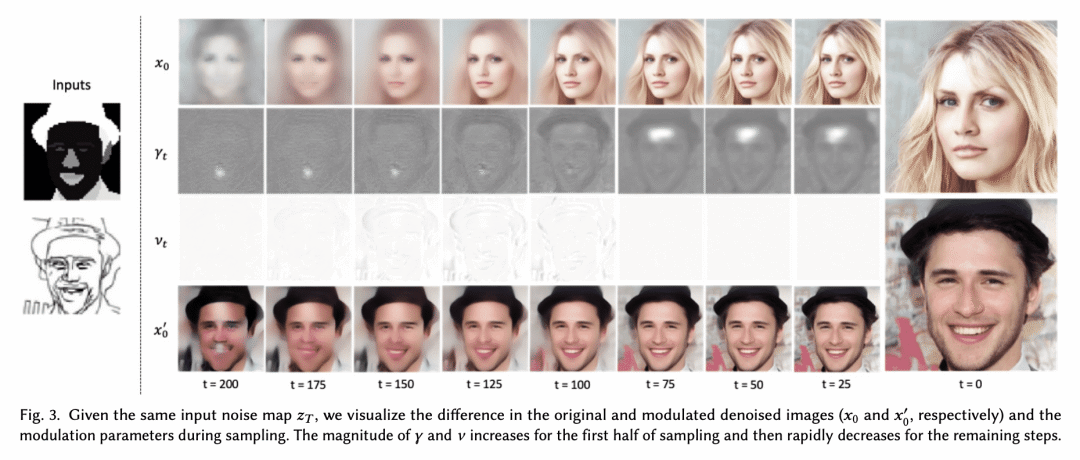

We present multimodal conditioning modules (MCM) for enabling conditional image synthesis using pretrained diffusion models. Previous multimodal synthesis works rely on training networks from scratch or fine-tuning pretrained networks, both of which are computationally expensive for large, state-of-the-art diffusion models. Our method uses pretrained networks but does not require any updates to the diffusion network's parameters. MCM is a small module trained to modulate the diffusion network's predictions during sampling using 2D modalities (e.g., semantic segmentation maps, sketches) that were unseen during the original training of the diffusion model. We show that MCM enables user control over the spatial layout of the image and leads to increased control over the image generation process. Training MCM is cheap as it does not require gradients from the original diffusion net, consists of only ∼1% of the number of parameters of the base diffusion model, and is trained using only a limited number of training examples. We evaluate our method on unconditional and text-conditional models to demonstrate the improved control over the generated images and their alignment with respect to the conditioning inputs.

https://arxiv.org/abs/2302.12764

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢