Check Your Facts and Try Again: Improving Large Language Models with External Knowledge and Automated Feedback

B Peng, M Galley, P He, H Cheng, Y Xie, Y Hu, Q Huang, L Liden, Z Yu, W Chen, J Gao

[Microsoft Research]

检查事实再次尝试: 利用外部知识和自动反馈改进大型语言模型

要点:

-

LLM-Augmenter 用外部知识和自动反馈的即插即用模块增强了黑盒 LLM;

-

在不影响回答流畅性和信息性的前提下,大大减少了幻觉;

-

经验验证表明,在以任务为导向的对话和开放域问答场景中是有效的;

-

LLM-Augmenter 通过将 ChatGPT 的回答建立在综合外部知识和自动反馈的基础上,提高了 ChatGPT 的事实性得分。

一句话总结:

LLM-Augmenter 框架通过纳入外部知识和自动反馈,改进了像 ChatGPT 这样的黑盒LLM,提高了其事实性得分。

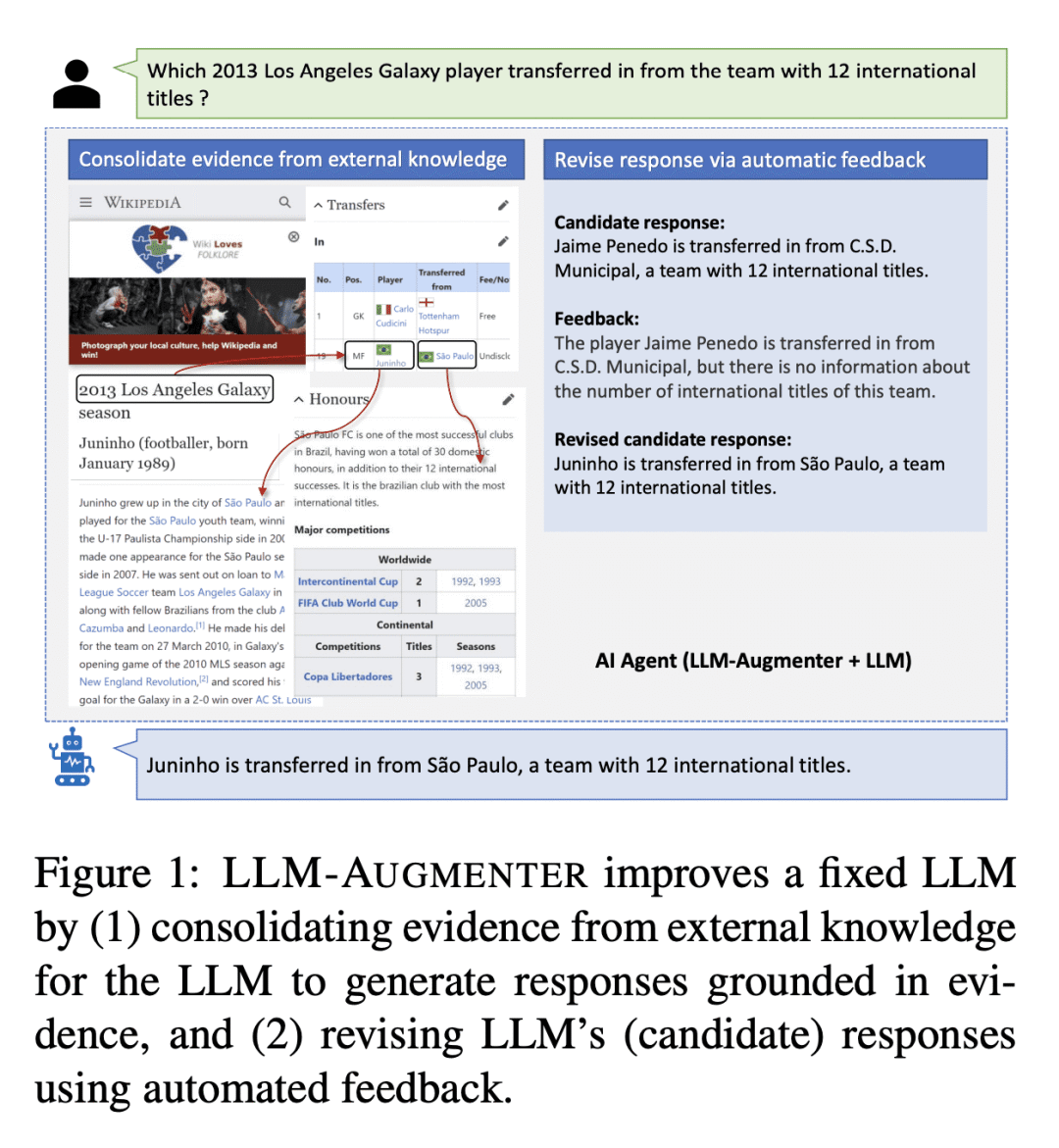

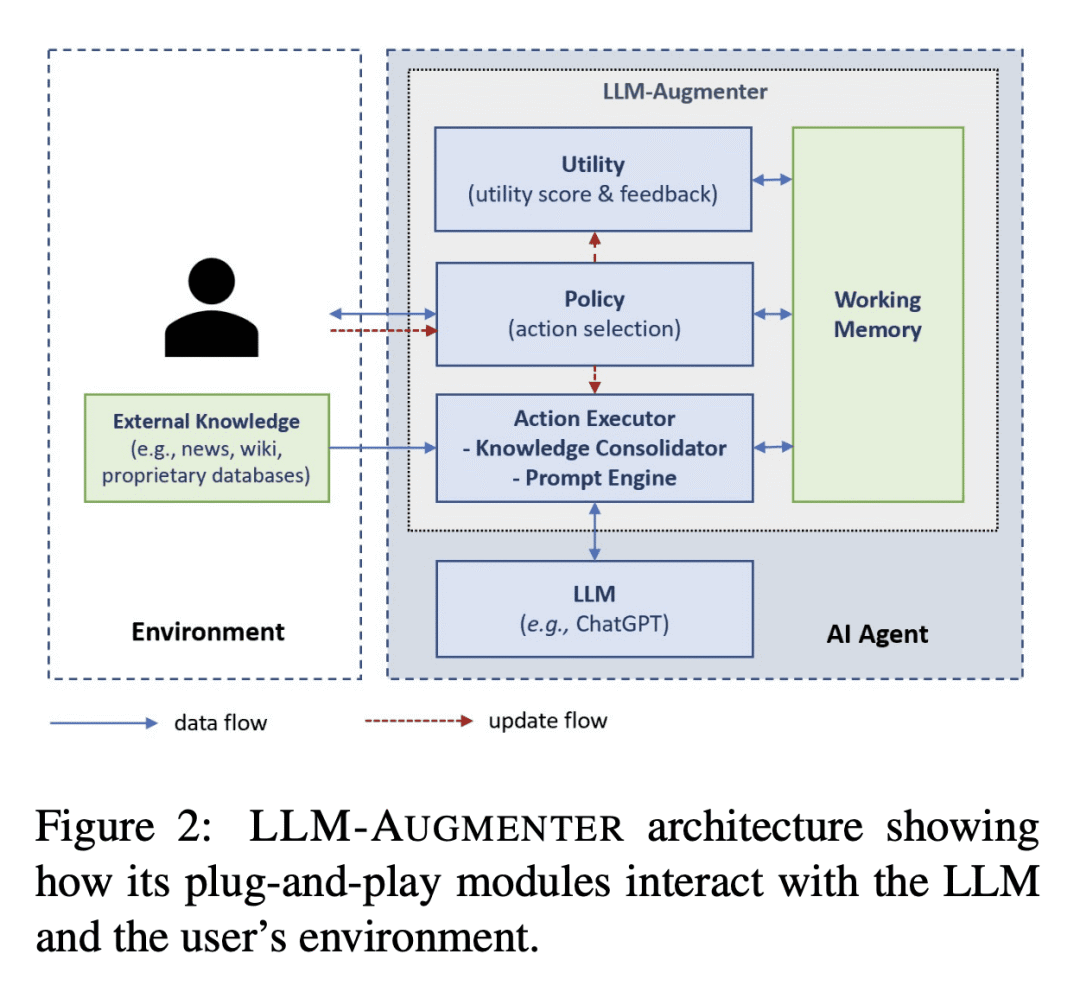

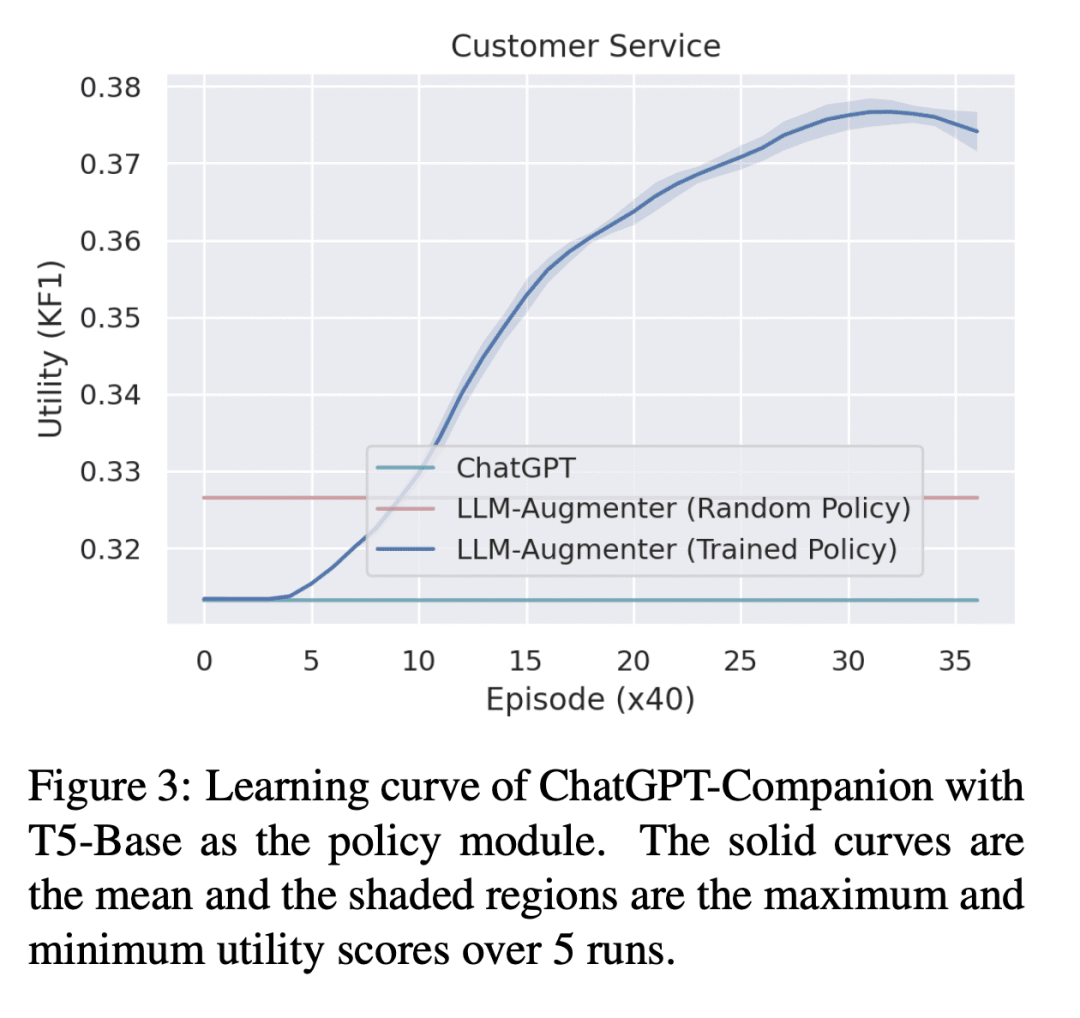

Large language models (LLMs), such as ChatGPT, are able to generate human-like, fluent responses for many downstream tasks, e.g., task-oriented dialog and question answering. However, applying LLMs to real-world, mission-critical applications remains challenging mainly due to their tendency to generate hallucinations and inability to use external knowledge.This paper proposes a LLM-Augmenter system, which augments a black-box LLM with a set of plug-and-play modules. Our system makes the LLM generate responses grounded in consolidated external knowledge, e.g., stored in task-specific databases. It also iteratively revises LLM prompts to improve model responses using feedback generated by utility functions, e.g., the factuality score of a LLM-generated response. The effectiveness of LLM-Augmenter is empirically validated on two types of mission-critical scenarios, task-oriented dialog and open-domain question answering. LLM-Augmenter significantly reduces ChatGPT's hallucinations without sacrificing the fluency and informativeness of its responses. We make the source code and models publicly available.

https://arxiv.org/abs/2302.12813

另外几篇值得关注的论文:

[LG] Physics-Constrained Deep Learning for Climate Downscaling

P Harder, V Ramesh, A Hernandez-Garcia, Q Yang, P Sattigeri, D Szwarcman, C Watson, D Rolnick

[Fraunhofer ITWM & Mila Quebec AI Institute & IBM Research]

基于物理约束的气候下变换深度学习

要点:

-

提出一种新方法,将基于物理学的约束纳入到用于气候下变换(downscaling)的神经网络架构中;

-

该方法提高了不同深度学习架构在各种气候数据集上的预测性能,同时保证了质量和能量守恒等物理约束;

-

该方法还可提高其他领域(如标准图像和卫星图像)超分辨率准确性,并为沿空间和时间维度的下变换引入了一个新的深度学习架构;

-

约束层有助于解决与应用于下变换的深度学习有关的常见问题:可以抑制沿海效应(coastal effect),在关键区域的误差变小,分布外泛化得到改善,训练可以更加稳定。

一句话总结:

一种将基于物理学的约束纳入气候下变换神经网络架构的新方法,该方法提高了预测性能,同时保证了守恒定律。

The availability of reliable, high-resolution climate and weather data is important to inform long-term decisions on climate adaptation and mitigation and to guide rapid responses to extreme events. Forecasting models are limited by computational costs and, therefore, often generate coarse-resolution predictions. Statistical downscaling, including super-resolution methods from deep learning, can provide an efficient method of upsampling low-resolution data. However, despite achieving visually compelling results in some cases, such models frequently violate conservation laws when predicting physical variables. In order to conserve physical quantities, we develop methods that guarantee physical constraints are satisfied by a deep learning downscaling model while also improving their performance according to traditional metrics. We compare different constraining approaches and demonstrate their applicability across different neural architectures as well as a variety of climate and weather datasets. Besides enabling faster and more accurate climate predictions, we also show that our novel methodologies can improve super-resolution for satellite data and standard datasets.

https://arxiv.org/abs/2208.05424

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢