Inseq: An Interpretability Toolkit for Sequence Generation Models

Gabriele Sarti, Nils Feldhus, Ludwig Sickert, Oskar van der Wal

University of Groningen & University of Amsterdam & German Research Center for Artificial Intelligence (DFKI)

Inseq:序列生成模型的可解释性工具包

要点:

1.过去在自然语言处理可解释性方面的工作主要集中在流行的分类任务上,而在很大程度上忽视了生成设置,部分原因是缺乏专用工具。

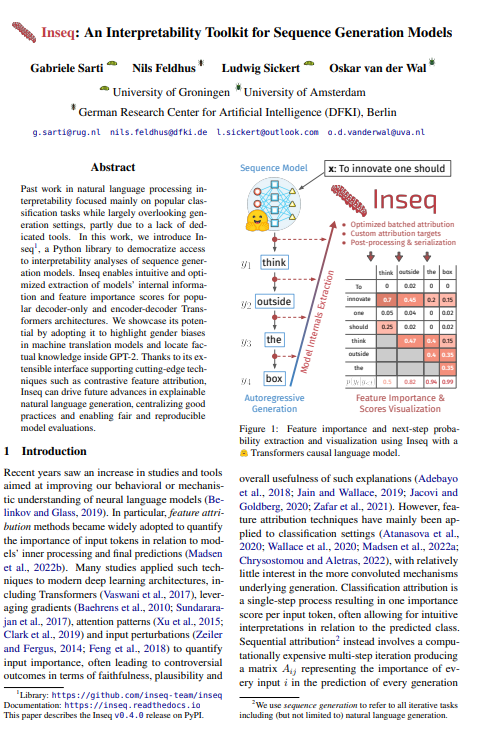

2。这项工作中,介绍了 Inseq,这是一个 Python 库,用于使对序列生成模型的可解释性分析的访问民主化。 Inseq 支持对流行的仅解码器和编码器-解码器 Transformers 架构的模型内部信息和特征重要性分数进行直观和优化的提取。

一句话总结:

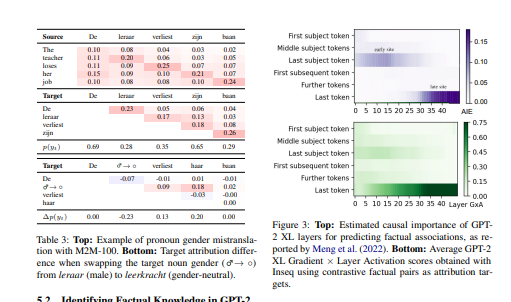

通过采用它来突出机器翻译模型中的性别偏见并在 GPT-2 中定位事实知识来展示它的潜力。 由于其可扩展的接口支持对比特征归因等尖端技术,Inseq 可以推动可解释的自然语言生成的未来发展,集中良好实践并实现公平和可重现的模型评估。[机器翻译+人工校对]

Past work in natural language processing interpretability focused mainly on popular classification tasks while largely overlooking generation settings, partly due to a lack of dedicated tools. In this work, we introduce Inseq, a Python library to democratize access to interpretability analyses of sequence generation models. Inseq enables intuitive and optimized extraction of models' internal information and feature importance scores for popular decoder-only and encoder-decoder Transformers architectures. We showcase its potential by adopting it to highlight gender biases in machine translation models and locate factual knowledge inside GPT-2. Thanks to its extensible interface supporting cutting-edge techniques such as contrastive feature attribution, Inseq can drive future advances in explainable natural language generation, centralizing good practices and enabling fair and reproducible model evaluations.

https://arxiv.org/pdf/2302.13942.pdf

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢