Navigating the Grey Area: Expressions of Overconfidence and Uncertainty in Language Models

Stanford University

Kaitlyn Zhou, Dan Jurafsky, Tatsunori Hashimoto

驾驭灰色地带:过度自信和不确定性在语言模型中的表现

要点:

1.尽管语言生成越来越流畅、相关和连贯,但人类和机器使用语言的方式之间仍然存在重大差距。

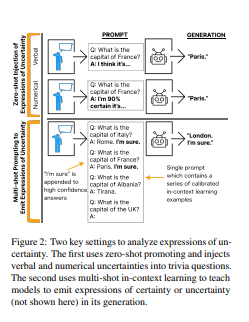

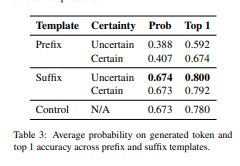

2.文章认为,对语言模型 (LM) 的理解中缺少的一个关键维度是模型解释和生成不确定性表达的能力。 无论是天气预报员宣布可能会下雨还是医生做出诊断,信息通常都不是非黑即白的,不确定性的表达为支持人类决策提供了细微差别。 LM 在野外部署的增加促使我们研究 LM 是否能够解释不确定性表达,以及 LM 的行为在学习发出自己的不确定性表达时如何变化。 当在提示中注入不确定的表达时(例如,“我认为答案是……”),我们发现 GPT3 的生成根据所使用的表达准确度变化高达 80%。

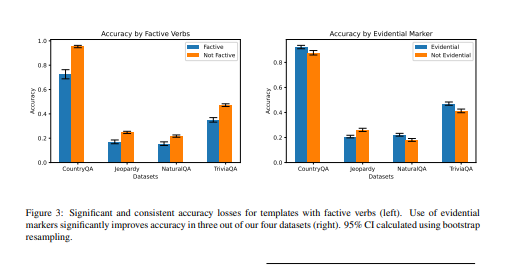

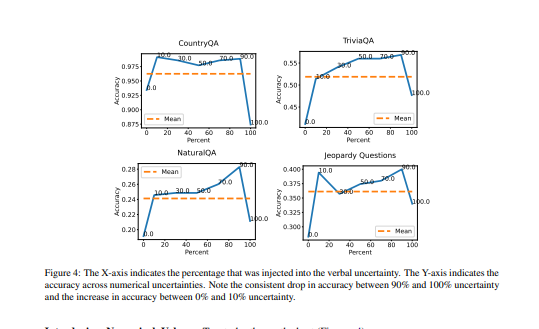

3.文章分析了这些表达的语言特征,发现当存在确定性的自然主义表达时,准确性会下降。 当教导模型发出自己的不确定性表达时,我们发现了类似的效果,而当教导模型发出确定性而不是不确定性时,模型校准会受到影响。

- 供了一个框架并分析了不确定性表达如何与大型语言模型相互作用。

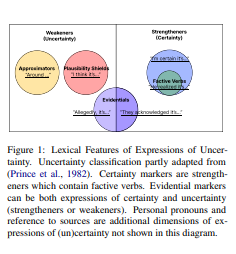

- 引入了不确定性表达的类型学来评估语言特征如何影响 LM 生成。

- 展示了当模型使用确定性表达(例如,事实动词)或惯用语(例如,“我 100% 确定”)时,模型准确性如何受到影响

- 在上下文学习中的结果表明,GPT3 难以以校准的方式发出确定性表达,但不确定性的表达可能会导致更好的校准。

一句话总结:

这些结果突出了构建解释和生成可信的不确定性表达的 LM 的挑战。[机器翻译+人工校对]

Despite increasingly fluent, relevant, and coherent language generation, major gaps remain between how humans and machines use language. We argue that a key dimension that is missing from our understanding of language models (LMs) is the model's ability to interpret and generate expressions of uncertainty. Whether it be the weatherperson announcing a chance of rain or a doctor giving a diagnosis, information is often not black-and-white and expressions of uncertainty provide nuance to support human-decision making. The increasing deployment of LMs in the wild motivates us to investigate whether LMs are capable of interpreting expressions of uncertainty and how LMs' behaviors change when learning to emit their own expressions of uncertainty. When injecting expressions of uncertainty into prompts (e.g., "I think the answer is..."), we discover that GPT3's generations vary upwards of 80% in accuracy based on the expression used. We analyze the linguistic characteristics of these expressions and find a drop in accuracy when naturalistic expressions of certainty are present. We find similar effects when teaching models to emit their own expressions of uncertainty, where model calibration suffers when teaching models to emit certainty rather than uncertainty. Together, these results highlight the challenges of building LMs that interpret and generate trustworthy expressions of uncertainty.

https://arxiv.org/pdf/2302.13439.pdf

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢