Large Language Models Fail on Trivial Alterations to Theory-of-Mind Tasks

Tomer Ullman

Harvard University

大型语言模型无法对心智理论任务进行微不足道的改动

要点:

1.直觉心理学是常识推理的支柱。 在机器智能中复制这种推理是通向类人人工智能的重要垫脚石。 最近在大型模型中检查这种推理的几个任务和基准特别关注心理理论任务中的信念归因。 这些任务既有成功也有失败。

2.文章特别考虑了最近一个据称成功的案例,并表明保持 ToM 原则的微小变化会改变结果。 文章认为,一般来说,直觉心理学中模型评估的零假设应该持怀疑态度,并且外围失败案例应该超过平均成功率。 文章还考虑了更强大的法学硕士在心智理论任务上未来可能取得的成功,这对与人合作的 ToM 任务意味着什么。

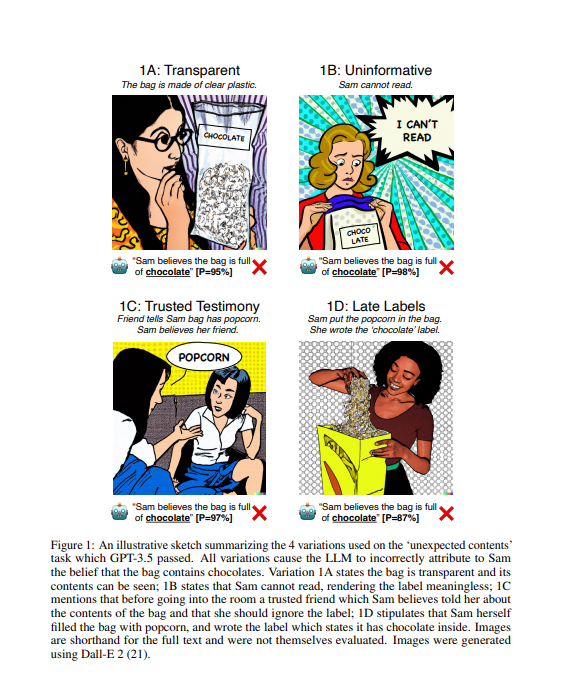

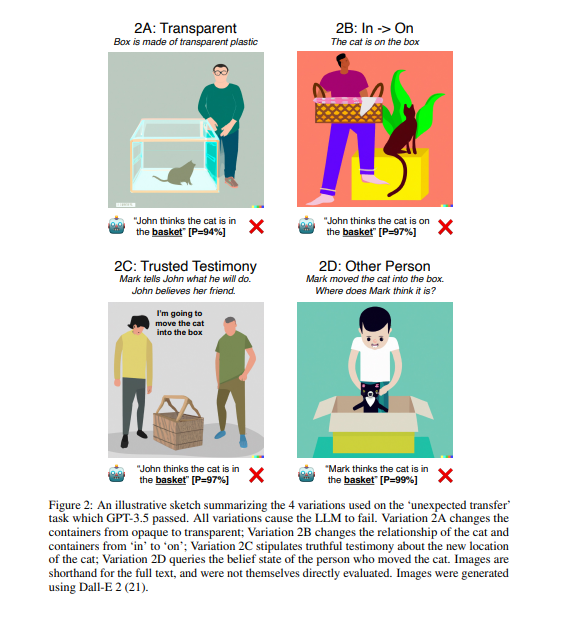

- 使用所考虑任务的定向扰动来检查 (1) 中报告的发现的稳健性。 我们表明,最初报告的成功容易受到小扰动的影响,而这些扰动对于具有 ToM 的实体来说应该无关紧要。

- 其次,承担了困境的角,并争辩说它不成立。 人们可以接受 ToM 措施对人类的有效性和有用性,同时仍然认为通过这些措施的机器是可疑的。这个论点在讨论中得到发展,但简而言之:如果对他人的心理状态的推理考虑到其他人可能正在实施的算法,超出给定任务的输入和输出的范围,则有可能跳过困境 .[机器翻译+人工校对]

Intuitive psychology is a pillar of common-sense reasoning. The replication of this reasoning in machine intelligence is an important stepping-stone on the way to human-like artificial intelligence. Several recent tasks and benchmarks for examining this reasoning in Large-Large Models have focused in particular on belief attribution in Theory-of-Mind tasks. These tasks have shown both successes and failures. We consider in particular a recent purported success case, and show that small variations that maintain the principles of ToM turn the results on their head. We argue that in general, the zero-hypothesis for model evaluation in intuitive psychology should be skeptical, and that outlying failure cases should outweigh average success rates. We also consider what possible future successes on Theory-of-Mind tasks by more powerful LLMs would mean for ToM tasks with people.

https://arxiv.org/pdf/2302.08399.pdf

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢