Permutation Equivariant Neural Functionals

A Zhou, K Yang, K Burns, Y Jiang, S Sokota, J. Z Kolter, C Finn

[Stanford University & CMU]

置换等变神经泛函

要点:

-

提出一种设计用于处理权重空间对象神经网络的框架,称为神经泛函网络(NFN); -

重点是由于神经网络的结构而在权重空间产生的置换对称性; -

包括两种等变 NF 层,作为 NFN 的构建模块,在基础对称性假设和参数效率方面有所不同; -

实验结果表明,互换等变神经函数的性能优于之前的方法,并能有效解决权重空间的任务。

一句话总结:

提出一种设计用于处理权重空间对象神经网络的框架,重点是置换对称性,通过实验证明其对各种权重空间任务有效。

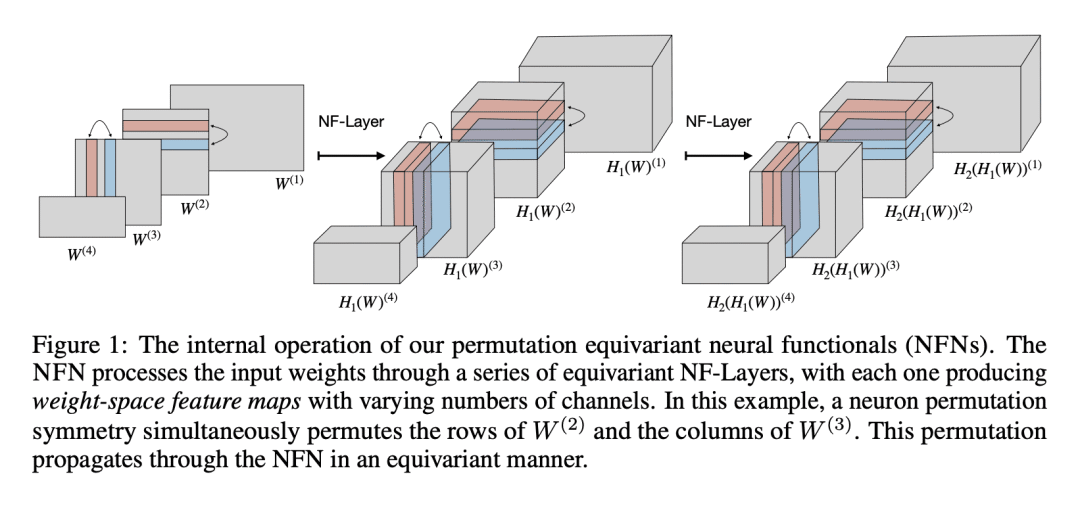

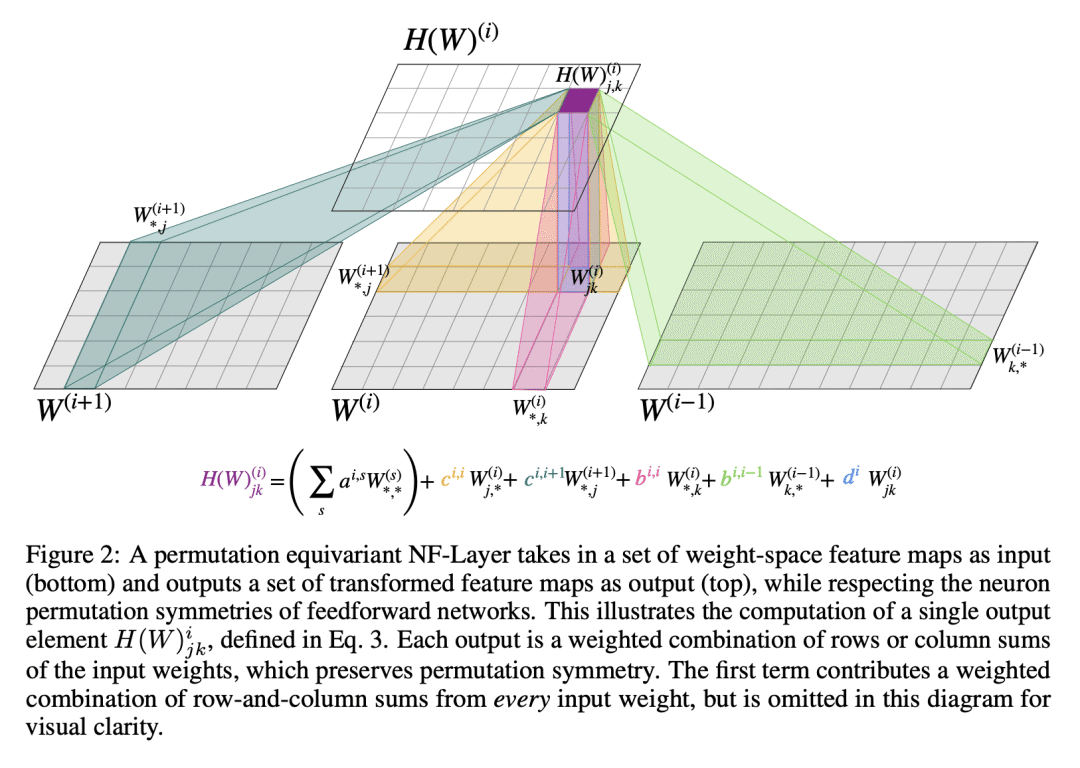

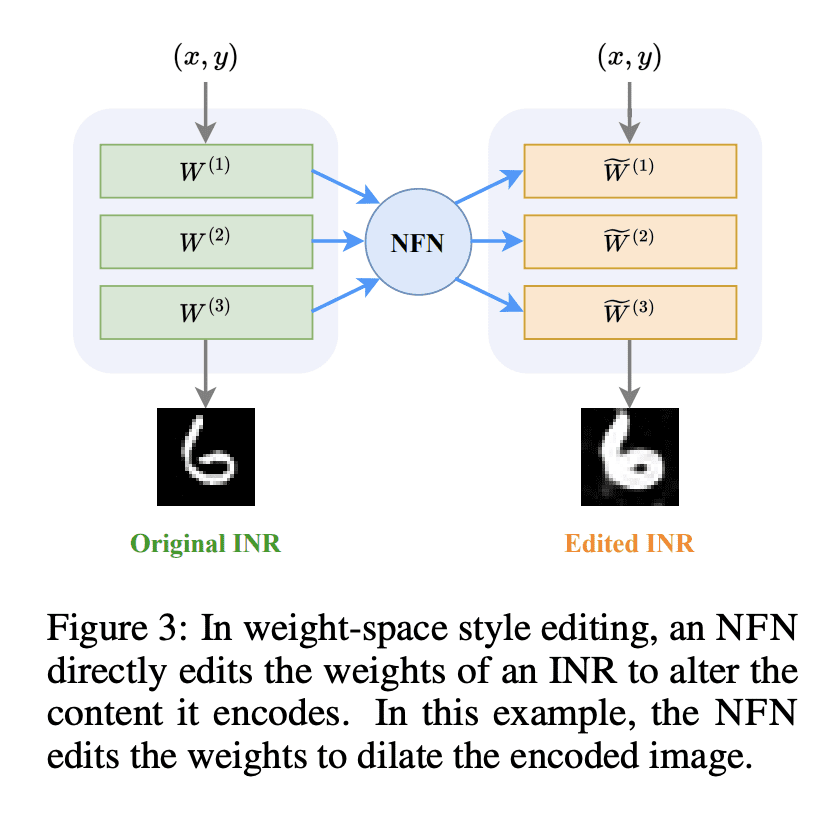

This work studies the design of neural networks that can process the weights or gradients of other neural networks, which we refer to as neural functional networks (NFNs). Despite a wide range of potential applications, including learned optimization, processing implicit neural representations, network editing, and policy evaluation, there are few unifying principles for designing effective architectures that process the weights of other networks. We approach the design of neural functionals through the lens of symmetry, in particular by focusing on the permutation symmetries that arise in the weights of deep feedforward networks because hidden layer neurons have no inherent order. We introduce a framework for building permutation equivariant neural functionals, whose architectures encode these symmetries as an inductive bias. The key building blocks of this framework are NF-Layers (neural functional layers) that we constrain to be permutation equivariant through an appropriate parameter sharing scheme. In our experiments, we find that permutation equivariant neural functionals are effective on a diverse set of tasks that require processing the weights of MLPs and CNNs, such as predicting classifier generalization, producing "winning ticket" sparsity masks for initializations, and editing the weights of implicit neural representations (INRs). In addition, we provide code for our models and experiments at this https URL.

论文链接:https://arxiv.org/abs/2302.14040

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢