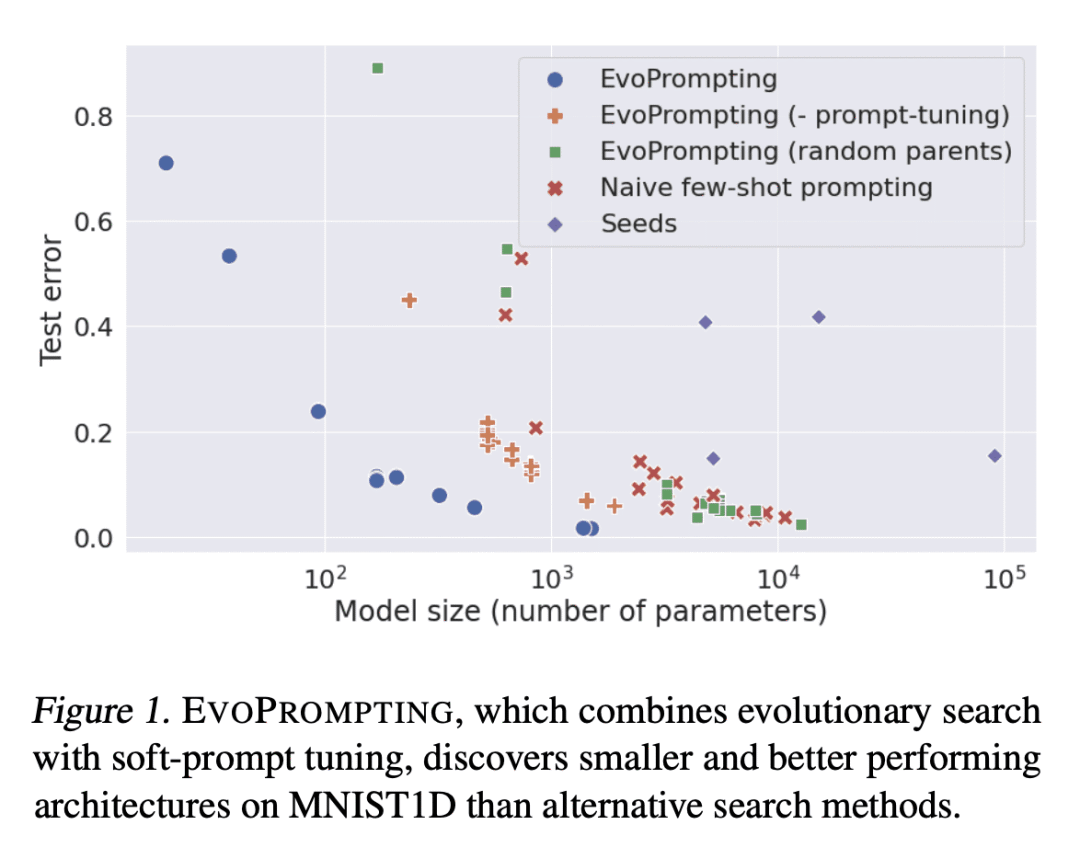

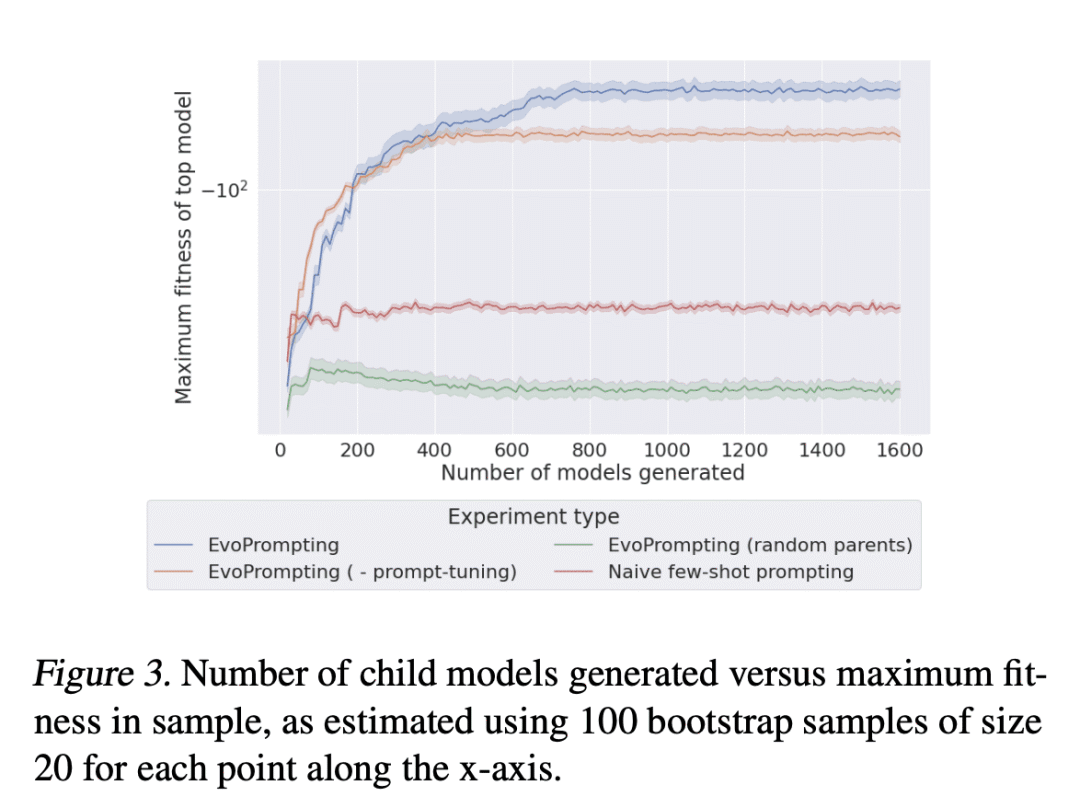

EvoPrompting 将进化搜索(evolutionary search)与软提示微调(soft prompt-tuning)相结合,在各种机器学习任务中创建准确和高效的神经网络架构。

EvoPrompting: Language Models for Code-Level Neural Architecture Search

A Chen, D M. Dohan, D R. So

[Google Brain & New York University]

EvoPrompting: 面向代码级神经架构搜索的语言模型

要点:

-

EvoPrompting 方法提高了语言模型的少样本/上下文能力,发现了新的和有竞争力的神经架构; -

在 MNIST-1D 和 CLRS 算法推理基准任务上,EvoPrompting 优于人工设计的和单纯的少样本提示法; -

EvoPrompting 具有足够的通用性,可以很容易地适用于搜索 NAS 以外的其他类型推理任务的解决方案; -

未来的工作可以扩大 EvoPrompting 的规模,与更有竞争力的大规模架构进行比较,如 Transformer。

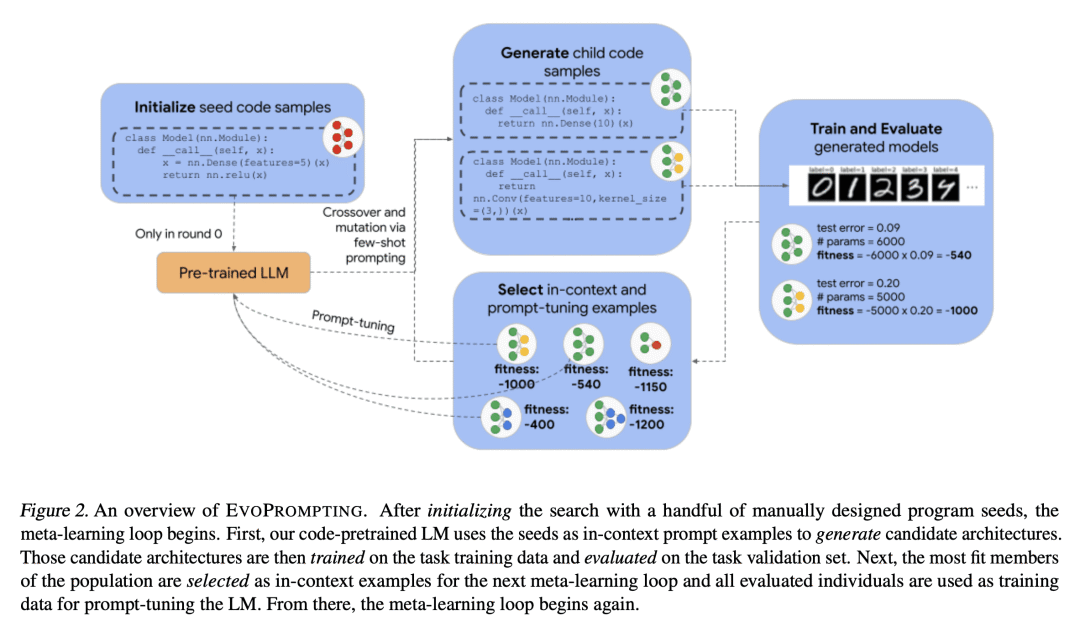

Given the recent impressive accomplishments of language models (LMs) for code generation, we explore the use of LMs as adaptive mutation and crossover operators for an evolutionary neural architecture search (NAS) algorithm. While NAS still proves too difficult a task for LMs to succeed at solely through prompting, we find that the combination of evolutionary prompt engineering with soft prompt-tuning, a method we term EvoPrompting, consistently finds diverse and high performing models. We first demonstrate that EvoPrompting is effective on the computationally efficient MNIST-1D dataset, where EvoPrompting produces convolutional architecture variants that outperform both those designed by human experts and naive few-shot prompting in terms of accuracy and model size. We then apply our method to searching for graph neural networks on the CLRS Algorithmic Reasoning Benchmark, where EvoPrompting is able to design novel architectures that outperform current state-of-the-art models on 21 out of 30 algorithmic reasoning tasks while maintaining similar model size. EvoPrompting is successful at designing accurate and efficient neural network architectures across a variety of machine learning tasks, while also being general enough for easy adaptation to other tasks beyond neural network design.

论文链接:https://arxiv.org/abs/2302.14838

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢