Monocular Depth Estimation using Diffusion Models

S Saxena, A Kar, M Norouzi, D J. Fleet

[Google Research]

基于扩散模型的单目深度估计

要点:

-

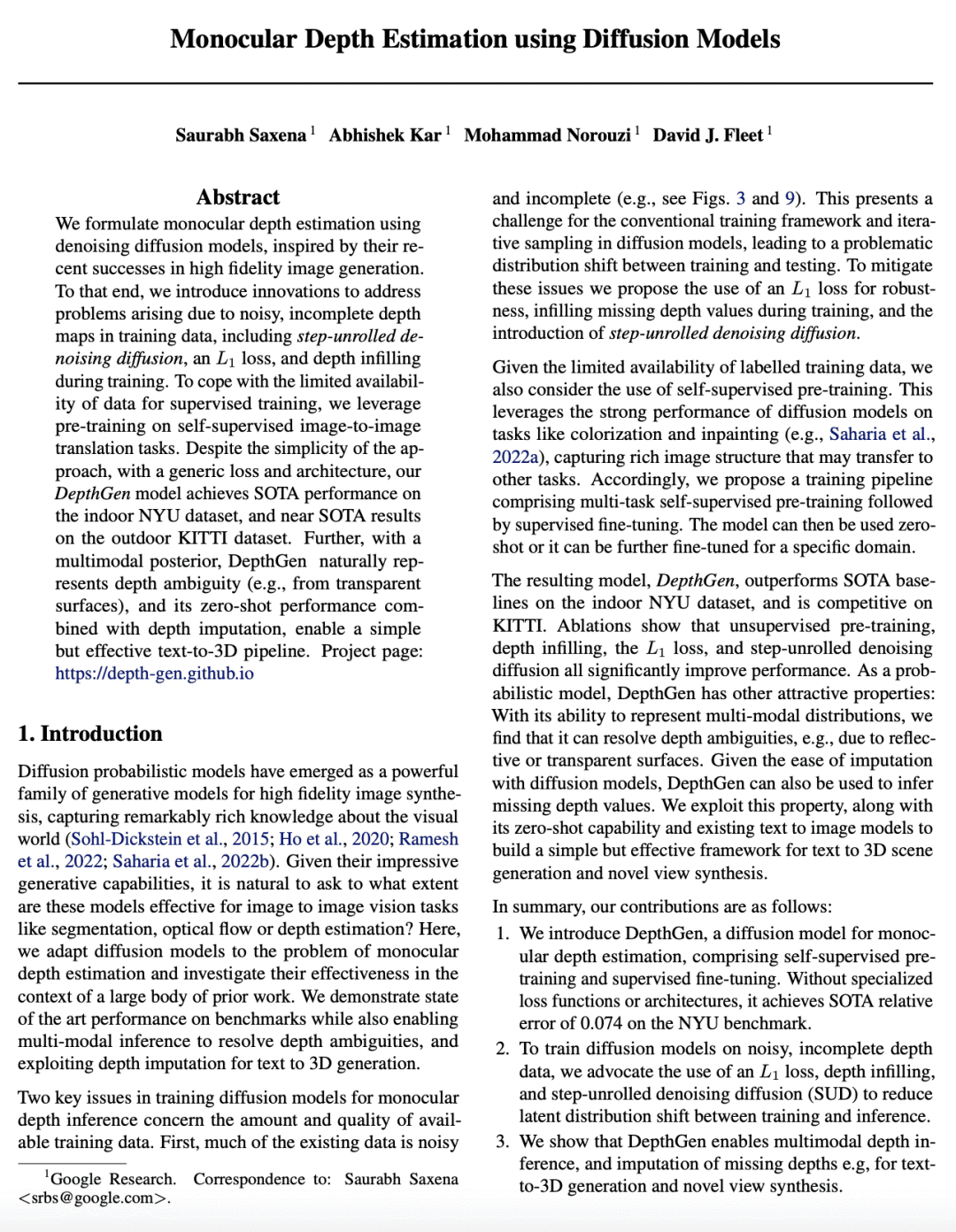

DepthGen 是一种用于单目深度估计的扩散模型,基于自监督预训练和监督微调,在NYU基准上达到SOTA相对误差0.074; -

使用L1损失、深度填充和分步去噪扩散,在含噪、不完整的深度数据上训练扩散模型; -

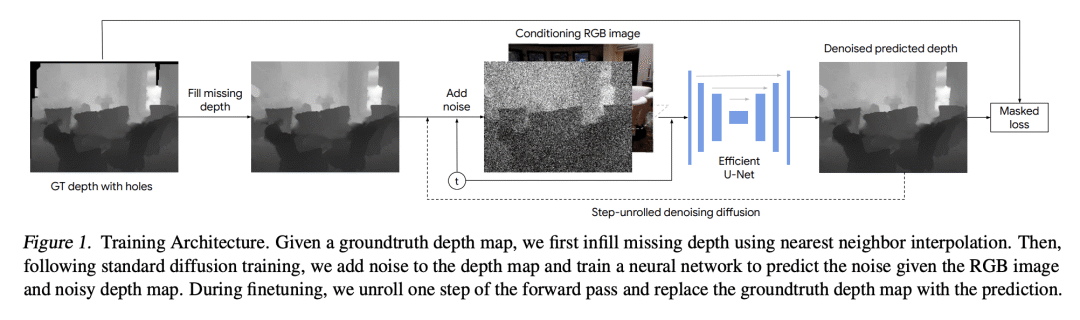

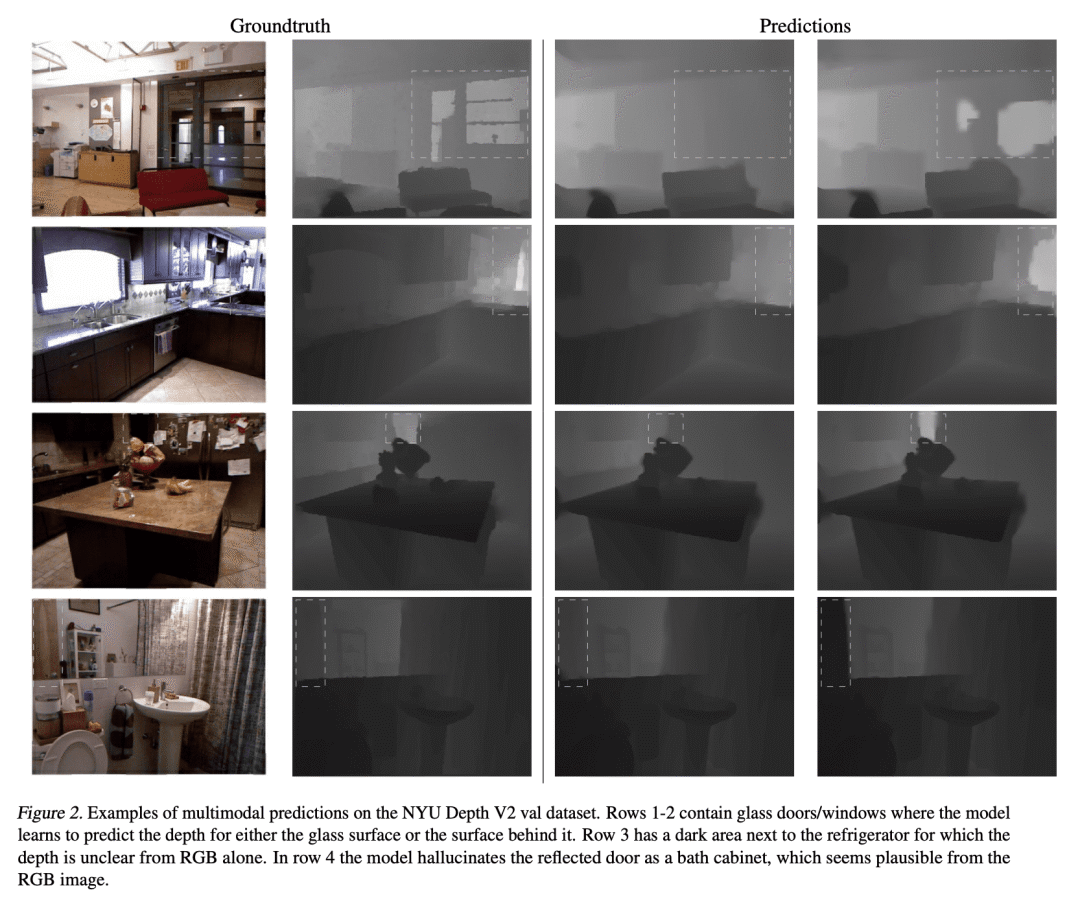

展示了多模态深度推断和缺失深度分配,用于文本到3D生成和新视图合成。

一句话总结:

用去噪扩散模型进行单目深度估计的新方法,在具有挑战性的深度估计基准上取得了最先进的结果,实现了多模态深度推断和归纳。

We formulate monocular depth estimation using denoising diffusion models, inspired by their recent successes in high fidelity image generation. To that end, we introduce innovations to address problems arising due to noisy, incomplete depth maps in training data, including step-unrolled denoising diffusion, an L1 loss, and depth infilling during training. To cope with the limited availability of data for supervised training, we leverage pre-training on self-supervised image-to-image translation tasks. Despite the simplicity of the approach, with a generic loss and architecture, our DepthGen model achieves SOTA performance on the indoor NYU dataset, and near SOTA results on the outdoor KITTI dataset. Further, with a multimodal posterior, DepthGen naturally represents depth ambiguity (e.g., from transparent surfaces), and its zero-shot performance combined with depth imputation, enable a simple but effective text-to-3D pipeline. Project page: this https URL

论文链接:https://arxiv.org/abs/2302.14816

内容中包含的图片若涉及版权问题,请及时与我们联系删除

评论

沙发等你来抢